Copyright ©

This work is licensed by a Creative Commons license .

First printing, February 2022

First, this thesis is dedicated to my family. We are a small family: my parents, my grandparents and my uncles and aunt. Specially, to my mother, who has never failed to encourage me to follow what I like to study, also for her constancy in response to my chaos. Also, specially to my father, from whom I got the passion for photography and computer science. Knowing this one could understand better this thesis. To my paternal grandparents who are no longer with us. To my maternal grandmother who always has good advice. Recently, she said to my mother: "the kid has studied enough; since you send it to kindergarten with 3 years, he hasn’t stopped", referring to this thesis!

To my friends, beginning with Anna, who is my flatmate and my partner; and who has supported me during these final thesis months. To other friends from the high school, both neighborhoods I grew up and those friends from the faculty, also. To other colleagues with whom I have shared the fight for a better university model, and now we share other fights, to my comrades!

To the Department of Electronics and Biomedical Engineering of Universitat de Barcelona, to all its members. Specially, to Dr. A. Cornet for being a nice host at the department. Specially, to Dr. A. Herms, to encourage me to pursue the thesis in this department and to contact Dr. J. D. Prades, director of this thesis. Also, to other colleagues from the MIND research group i from the Laboratory from ’the \(\mathrm{0}\) floor’: to Dr. C. Fàbrega, to Dr. O. Casals, and many others!

To Dr. J. D. Prades himself, for the opportunity by accepting this thesis proposal, and embrace the idea I presented, leading to the creation of ColorSensing. To the ColorSensing team, begining with María Eugenia Martín-Hidalgo, co-funder and CEO of ColorSensing. Without forgetting, all the other teammates: to Josep Maria, to Hanna, to Dani, to María, to Ferran and to Míriam (Dr. M. Marchena). But also, to the former teammates: to Peter, to Oriol (Dr. O. Cusola), to Arnau, to Carles, to Pablo, to Gerard, to Hamid and to David. Thank you very much all for this journey.

This thesis has been funded in part by the European Research Council under the FP7 and the H2020 programs, no. \(\mathrm{727297}\) and no. \(\mathrm{957527}\). Also, by the Eurostarts program with the Agreement no. \(\mathrm{11453}\). Additional funding sources have been: AGAUR - PRODUCTE (\(\mathrm{2016-PROD-00036}\)), BBVA Leonardo, and the ICREA Academia program.

Aquesta tesi va dedicada en primera instància a la meva família. Som una família petita: a mons pares, als meus avis i als meus tiets. Especialment, a ma mare, perquè mai a fallat en animar-me per perseguir el que m’agrada estudiar, també per la seva constància davant del meu desordre. Especialment també, al meu pare, per la seva passió amb la fotografia i la informàtica que des de petit m’ha inculcat, així hom pot entendre aquesta tesi molt millor. Als meus avis paterns que ja no estan. A la meva àvia materna que sempre té bons consells, i fa poc li va dir a ma mare: "si el nen ja ha estudiat prou, d’ençà que el vas portar amb tres anys (al col·legi) no ha parat d’estudiar", referint-se a aquesta tesi!

Als meus amics, començant per l’Anna, que és la meva companya de pis, i la meva parella; que m’ha recolzat durant aquests darrers mesos a casa mentre redactava la tesi. A tots aquells amics de l’institut, del barri, de la ’urba’ i de la facultat. També a aquelles companyes amb les quals hem compartit lluites a la universitat des de l’època d’estudi i ara seguim compartint altres espais polítics, els i les meves camarades.

Al Departament d’Enginyeria Electrònica i Biomèdica de la Universitat de Barcelona, a tots els seus membres. Especialment, al Dr. A. Cornet per la seva acollida al departament. Al Dr. A. Herms, per animar-me a fer la tesi al Departament i contactar al Dr. J. D. Prades, director d’aquesta tesi. També, a altres companyes i companys del MIND, el nostre grup de recerca, i del Laboratori ’de planta \(\mathrm{0}\)’: al Dr. C. Fàbrega, la Dra. O. Casals, i tots els altres!

Al mateix Dr. J. D. Prades, per l’oportunitat acceptant aquesta tesi, i acollir la idea que li vaig presentar fins al punt d’impulsar la creació de ColorSensing. A tot l’equip de ColorSensing, començant per la Maria Eugenia Martín-Hidalgo, cofundadora i CEO de ColorSensing. Però, per suposat, a la resta de l’equip: al Josep Maria, a la Hanna, al Dani, a la María, al Ferran i a la Míriam (Dra. M. Marchena). Però també als seus antics membres amb qui hem coincidit: al Peter, a l’Oriol (Dr. O. Cusola), a l’Arnau, al Carles, al Pablo, al Gerard, a l’Hamid i al David. Moltes gràcies a tots i totes per fer aquest viatge conjuntament.

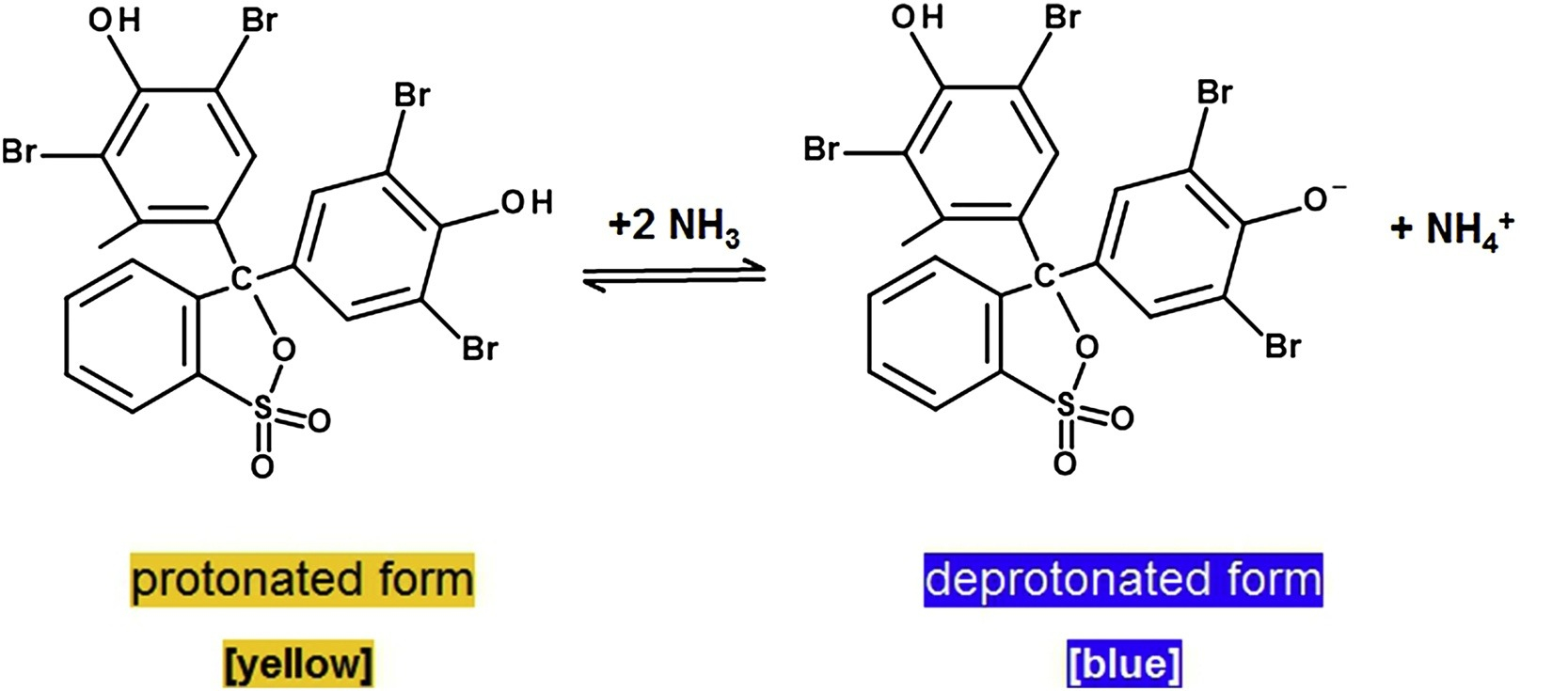

Color-based sensors, where a material change its color from one color to another, and this is change is observed by a user who performs a manual reading, often offer qualitative solutions. These materials change their color in response to changes in a certain physical or chemical magnitude. We can find colorimetric indicators with several sensing targets, such as: temperature, humidity, environmental gases, etc. The common approach to quantify these sensors is to place ad hoc electronic components, e.g. a reader device.

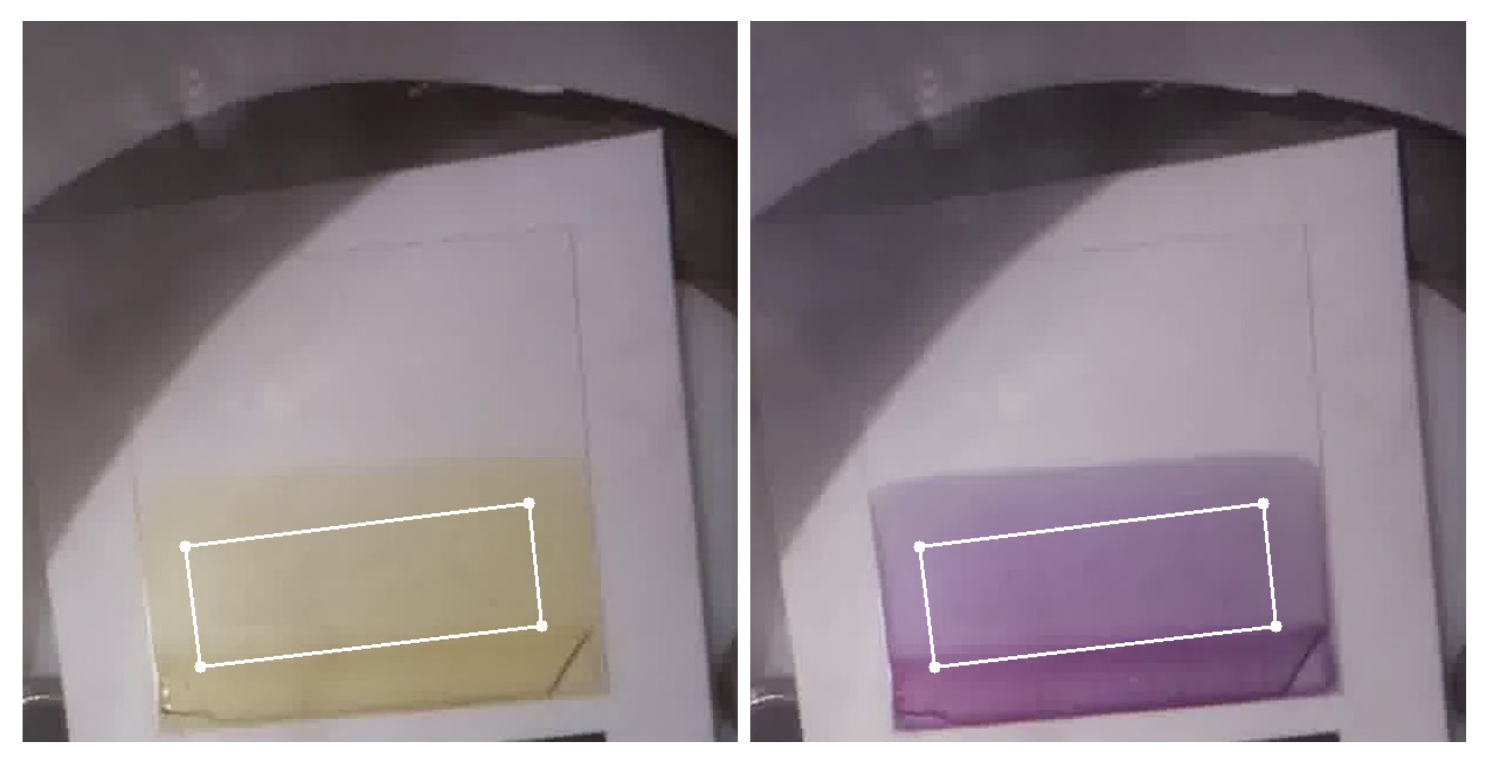

With the rise of smartphone technology, this thesis builds around the possibility to automatically acquire a digital image of those sensors and then compute a quantitative measure is near. By leveraging this measuring process to the smartphones, we would avoid the use of ad hoc electronic components, thus reducing colorimetric application cost. However, there exists a challenge on how-to acquire the images of the colorimetric applications and how-to do it consistently, coping with the disparity of external factors affecting the measure, such as ambient light conditions or different camera modules.

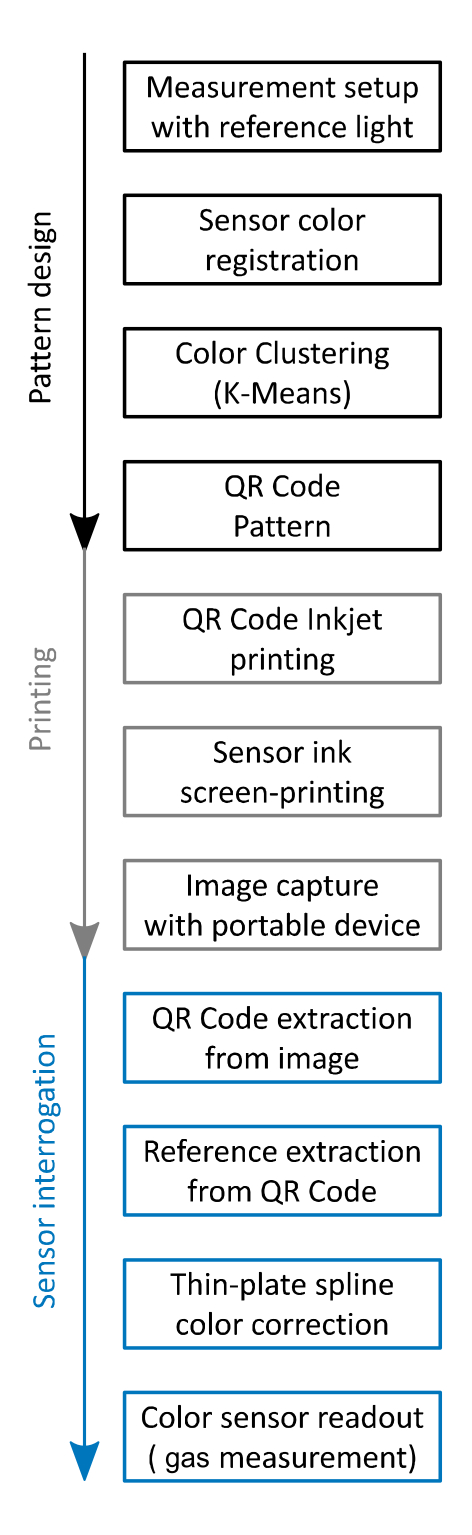

In this thesis, we tackle the challenges to digitize and quantify colorimetric applications, such as colorimetric indicators. We make a proposal to use 2D barcodes, well-known computer vision patterns, as the base technology to overcome those challenges. Our research focused on four main challenges: (I) to capture barcodes on top of real-world challenging surfaces (bottles, food packages, etc.), which are the usual surfaces where colorimetric indicators are placed; (II) to define a new 2D barcode to embed colorimetric features in a back-compatible fashion; (III) to achieve image consistency when capturing images with smartphones by reviewing existent methods and proposing a new color correction method, based upon thin-plate splines mappings; and (IV) to demonstrate a specific application use case applied to a colorimetric indicator for sensing \(CO_2\) in the range of modified atmosphere packaging –MAP–, one of the common food-packaging standards.

Els sensors basats en color, normalment ofereixen solucions qualitatives, ja que en aquestes solucions un material canvia el seu color a un altre color, i aquest canvi de color és observat per un usuari que fa una mesura manual. Aquests materials canvien de color en resposta a un canvi en una magnitud física o química. Avui en dia, podem trobar indicadors colorimètrics que amb diferents objectius, per exemple: temperatura, humitat, gasos ambientals, etc. L’opció més comuna per quantitzar aquests sensors és l’ús d’electrònica addicional, és a dir, un lector.

Amb l’augment de la tecnologia dels telèfons intel·ligents, aquesta tesi explora les possibilitats d’automatitzar l’adquisició d’imatges digitals d’aquests sensors i després computar una mesura quantitativa és a prop. Desplaçant aquest procés de mesura als telèfons mòbils, evitem l’ús d’aquesta electrònica addicional, i així, es redueix el cost de l’aplicació colorimètrica. Tanmateix, existeixen reptes sobre com adquirir les imatges de les aplicacions colorimètriques i de com fer-ho de forma consistent, a causa de la disparitat de factors externs que afecten la mesura, com per exemple la llum ambient or les diferents càmeres utilitzades.

En aquesta tesi, encarem els reptes de digitalitzar i quantitzar aplicacions colorimètriques, com els indicadors colorimètrics. Fem la proposta d’utilitzar codis de barres en dues dimensions, que són coneguts patrons de visió per computador, com a base de la nostra tecnologia per superar aquests reptes. Hem enfocat la nostra recerca en quatre reptes principals: (I) capturar codis de barres sobre de superfícies del món real (ampolles, safates de menjar, etc.), que són les superfícies on usualment aquests indicadors colorimètrics estan situats; (II) definir un nou codi de barres en dues dimensions per encastar elements colorimètrics de forma retro-compatible; (III) aconseguir consistència en la captura d’imatges quan es capturen amb telèfons mòbils, revisant mètodes de correcció de color existents i proposant un nou mètode basat en transformacions geomètriques que utilitzen splines; i (IV) demostrar l’ús de la tecnologia en un cas específic aplicat a un indicador colorimètric per detectar \(CO_2\) en el rang per envasos amb atmosfera modificada –MAP–, un dels estàndards d’envasat alimentari.

The rise of the smartphone technology developed in parallel to the popularization of digital cameras enabled an easier access to photography devices to the people. Nowadays, modern smartphones have onboard digital cameras that can feature good color reproduction for imaging uses [1].

Alongside with this phenomenon, there has been a popularization of color-based solutions to detect biochemistry analytes [2]. Both phenomena are probable to be linked, since the first one eases the second. Scientists who want to pursue research to discover new or improve existent color-based analytics found themselves with better and better imaging tools, spending fewer and fewer resources.

Color-based sensing [2] is often preferred over electronic sensing [3] for three reasons: \(\mathrm{1}\)) the rapid detection of the analytes; \(\mathrm{2}\)) the high sensitivity; and \(\mathrm{3}\)) the high selectivity of colormetric sensors. Nevertheless, imaging acquisition on smartphone devices still presents some acquisition challenges, and how to overcome those challenges is still an open debate [4].

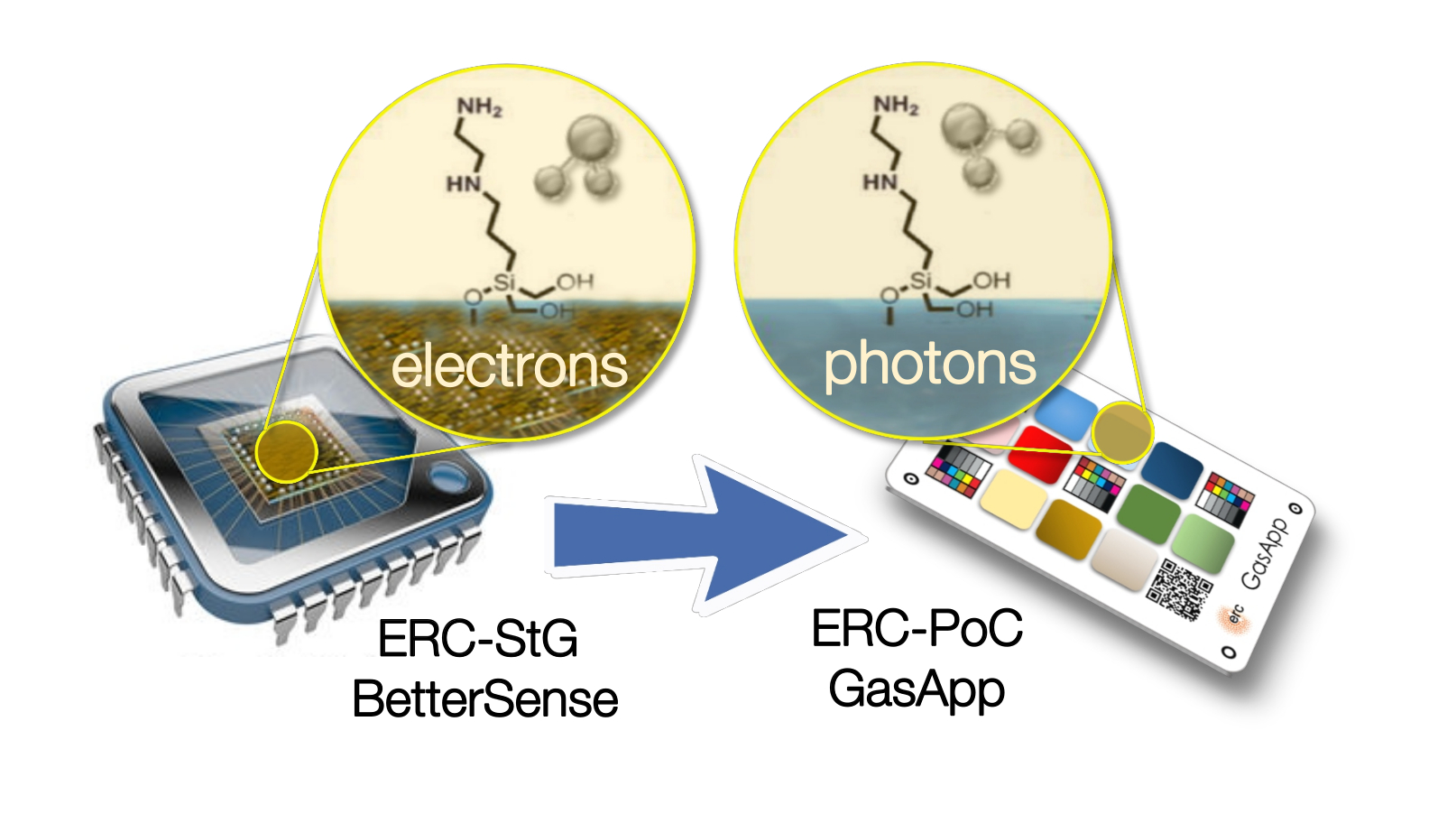

This is why, the ERC-StG BetterSense project (ERC n. 336917) was granted the extension ERC-PoC GasApp project (ERC n.727297). Bettersense aimed to address the high power consumption and the poor selectivity of electronic gas sensor technologies [5]. GasApp aimed to bring the capability to detect gases to smartphone technology, relying on color-based sensor technology [6].

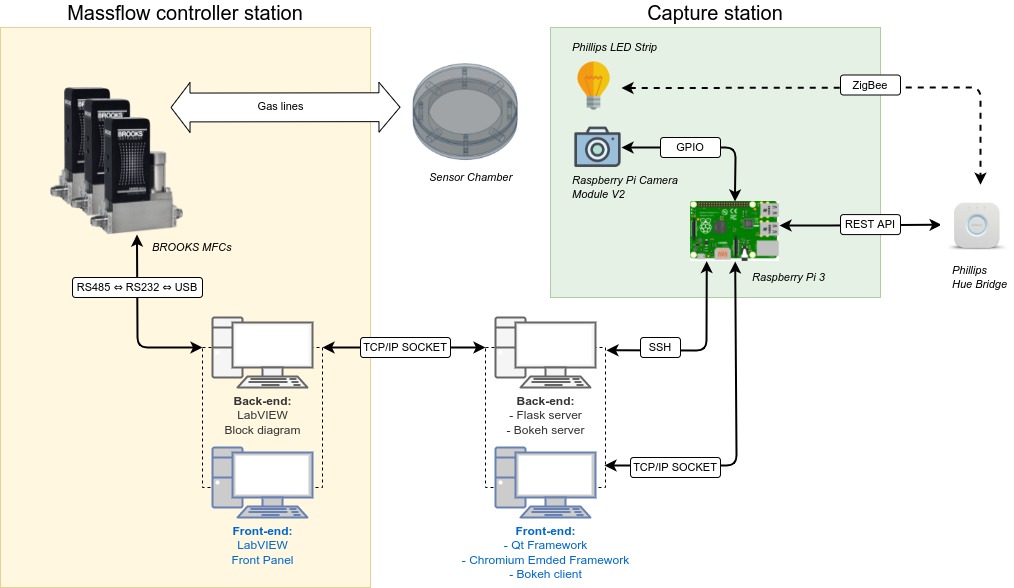

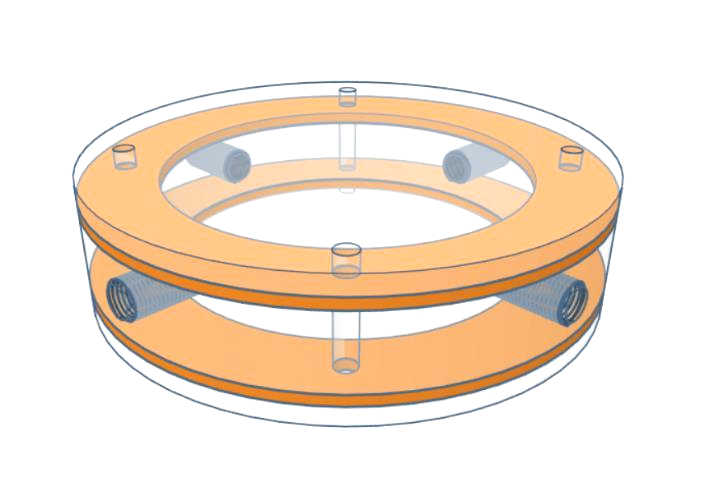

The accumulated knowledge from BetterSense was translated into the GasApp project to create colorimetric indicators to sense target gases, the GasApp proposal is detailed in [fig:bettersense2gasapp]. Later on, the SnapGas project (Eurostars n. 11453) was also granted to carry on this research topic, and apply the new technology to other colorimetric indicators to sense environmental gases [7].

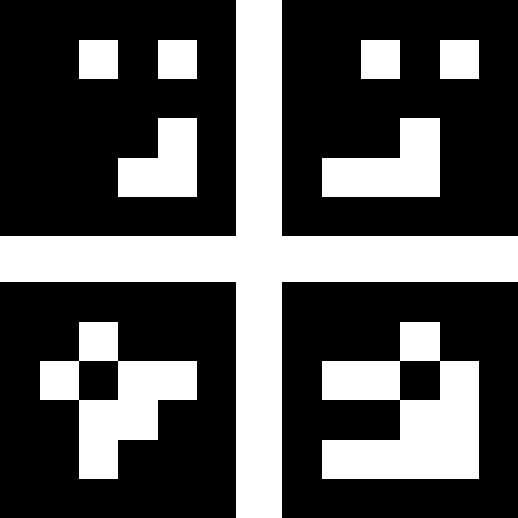

The GasApp proposal was based on changing from electronic devices to colorimetric indicators, thus leveraging the electronic components of the sensor readout to handheld smartphones. To do so, GasApp proposed a solution implementing an array with colorimetric indicators placed on top of a card-sized substrate to be captured by a smartphone device (see [fig:bettersense2gasapp]).

The design of this array of colorimetric indicators presented several challenges, such as: detecting and extracting the card and the desired region of interest (sensors), embedding one or more color charts and later perform color correction techniques to achieve adequate sensor readouts at any possible scenario a mobile phone could take a capture.

The research of this thesis started in this context, and the work here presented aims to tackle these problems and resolve them with an integral solution. Let us go deeper in some of these challenges to properly formulate our thesis proposal.

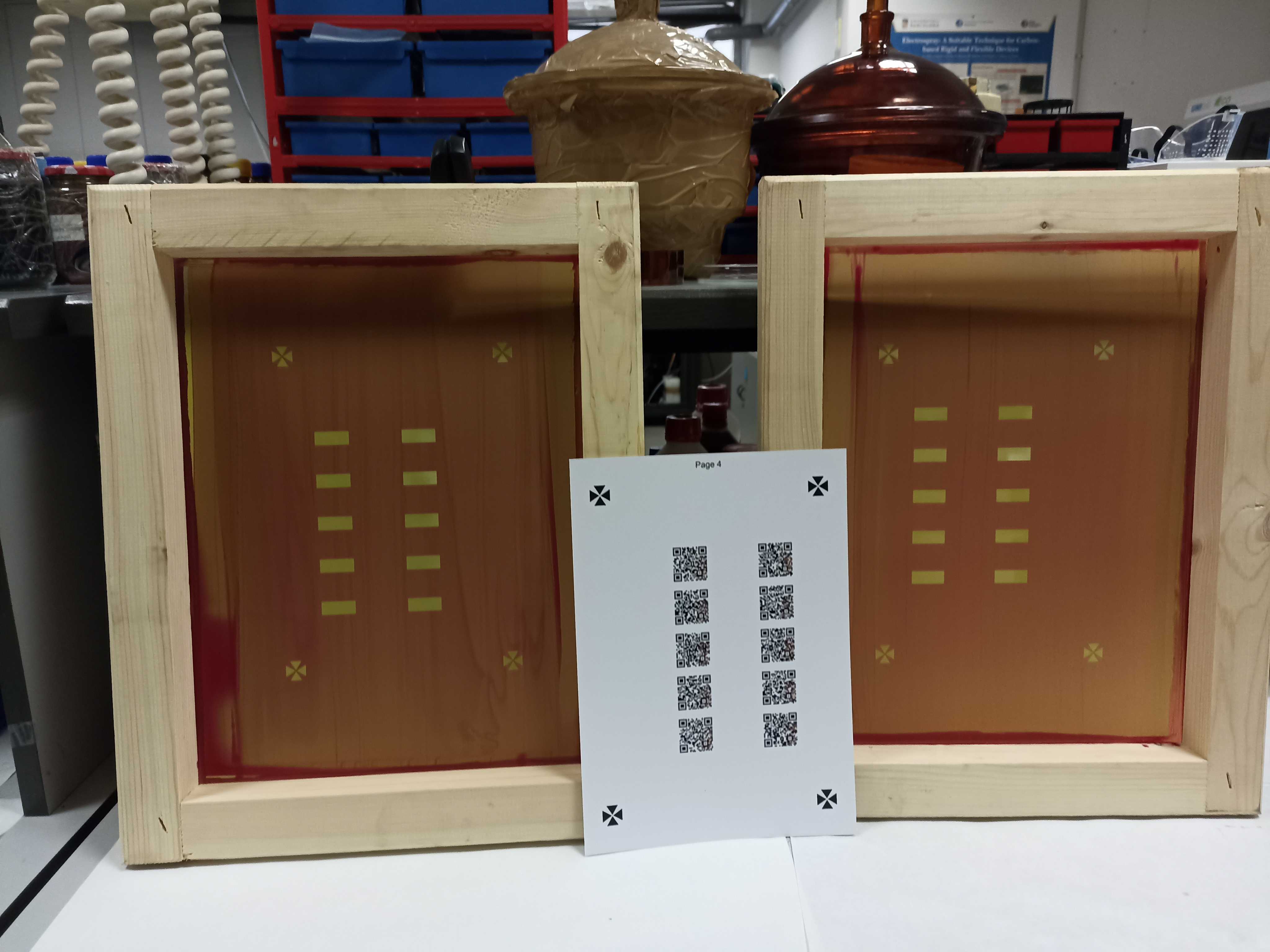

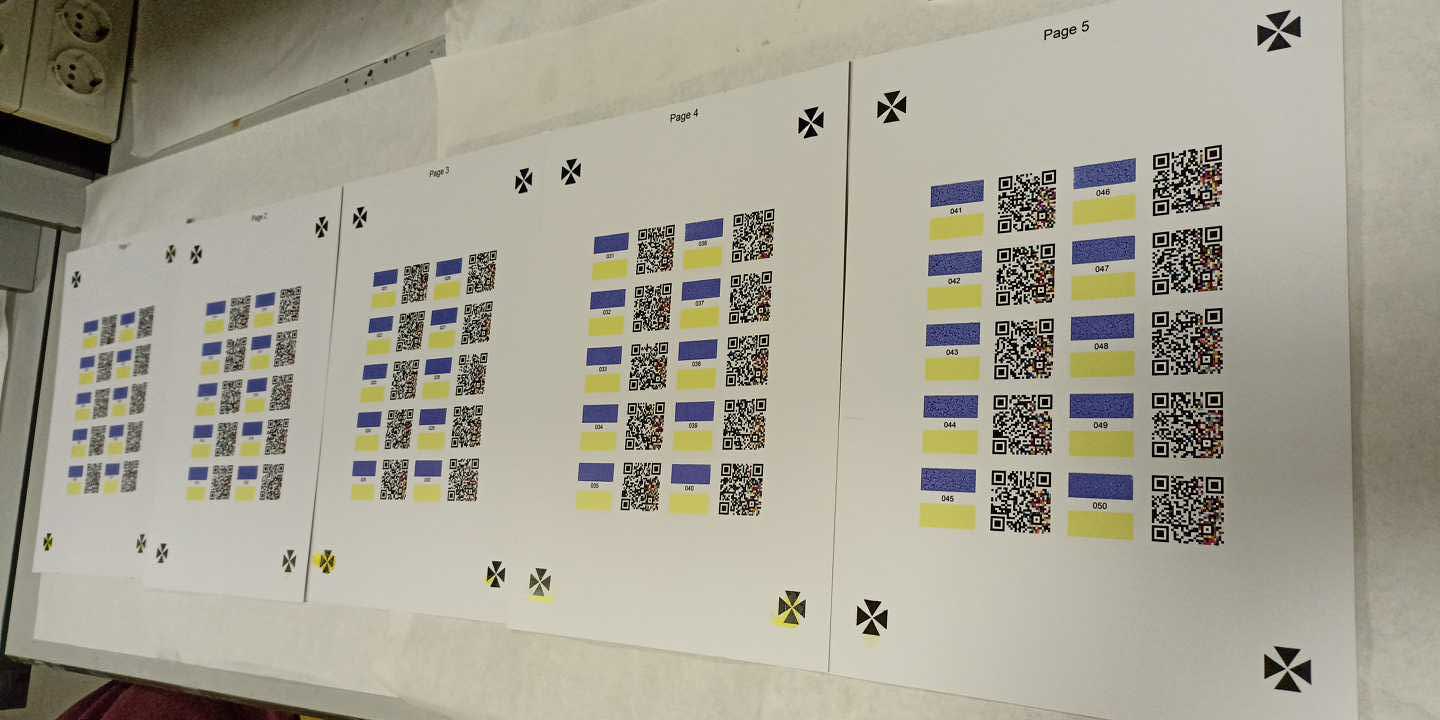

First, the fabrication of the color-based sensors presents a challenge itself. There exists is a common starting point in printed sensors technologies to use ink-jet printing as the first approach to the problem to fabricate a printed sensor [8], [9]. However, ink-jet printing is an expensive and often limited printing technology from the standpoint of view of mass-production [10].

Second, color reproduction is a wide-known challenge of digital cameras [11]. Often, when a digital camera captures a scene it can produce several artifacts during the capture (e.g. underexposure, overexposure, ...), this is represented in [fig:color_artifacts].

The problem of color reproduction, involves a directly linked problem: the problem of achieving image consistency among datasets [12]. While color reproduction aims at matching the color of a given object when reproduced in another device as an image (e.g. a painting, a printed photo, a digital photo on a screen, etc.), image consistency is the problem of taking different images of the same object in different illumination conditions and with different capturing devices, to finally obtain the same apparent colors for this object.

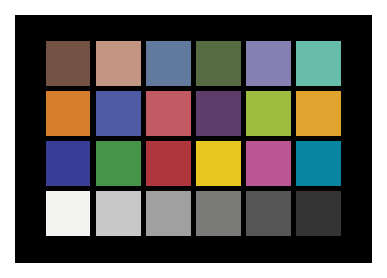

Usually, both problems are solved with the addition of color rendition charts to the scene. Color charts are machine-readable patterns which contain several color references [13]. Color charts bring a systematic way of solving the image consistency problem by increasing the amount of color references to create subsequently better color corrections than the default white-balance [14], [15].

Third, using smartphones to acquire image data often presents computer vision challenges. On the one hand, authors preferred to enclose the smartphone device in a fixed setup [16], [17]. On the other hand, there exists a consolidated knowledge on computer vision techniques, which could be applied to readout colorimetric sensors with handheld smartphones [18].

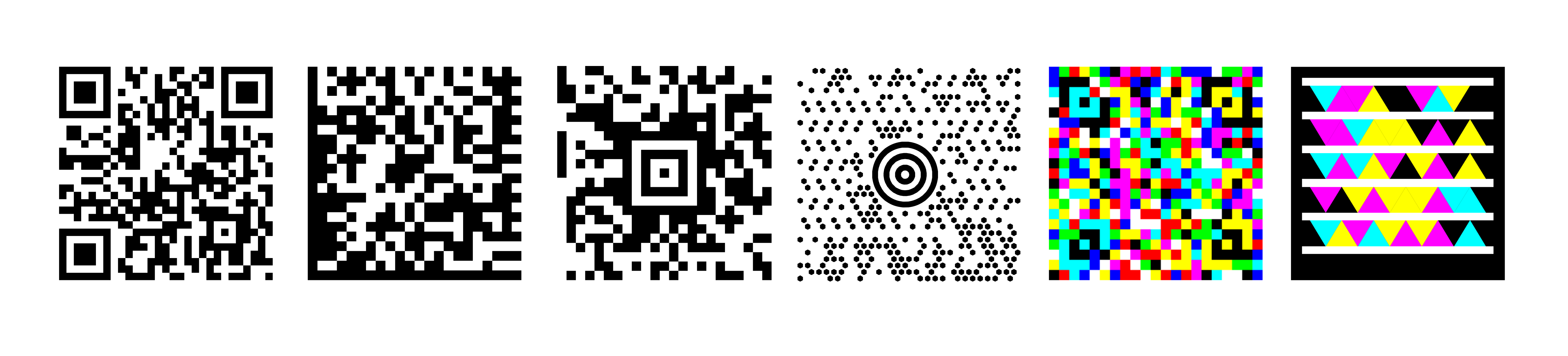

Computer vision often seeks to extract features from the captured scene to be able to perform the desired operations on the image, such as: projective corrections, color readouts, etc. These features are objects with unique contour metrics or shapes, like the ArUco codes (see [fig:aruco_codes]) used in augmented reality technology [19].

Moreover, 2D barcode technology is based upon this principle: encode data into machine-readable patterns which are easy to extract from a scene thanks to their uniqueness. QR Codes are the most known 2D barcodes [20].

This is why, other authors had proposed solutions to print QR Codes with using colorimetric indicators as their printing ink. Rendering QR Codes which change its color when the target substance is detected [21]. Even, using colorimetric dyes as actuators, where authors enhanced the QR Code capacity instead of sensing any material [22].

Altogether, the presented solutions did not fully resolve what GasApp needed: an integrated, disposable, cost-effective machine-readable pattern to allocate colorimetric environmental sensors. The state-of-the-art research presented partial solutions, i.e. the colorimetric indicator was tackled, but there was not a proposal on how to perform automated readouts. Or, the sensor was arranged in a QR Code layout, but color correction was not tackled. Or, the color calibration problem was approached, but any of the other two problems were tackled, etc.

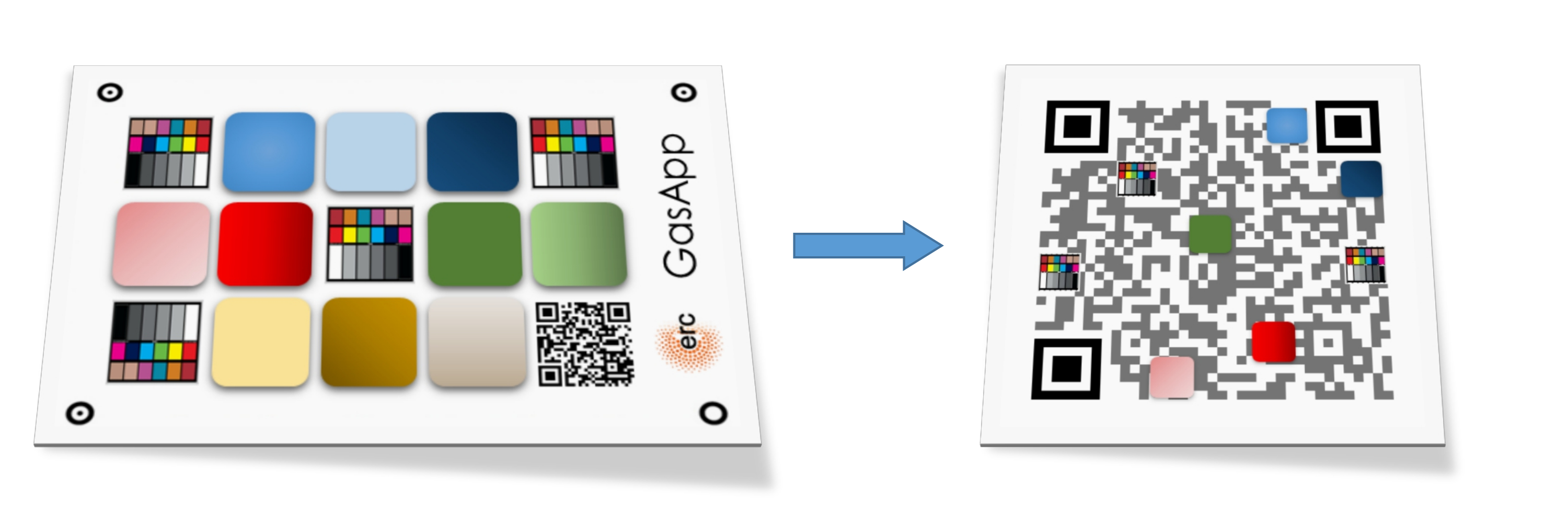

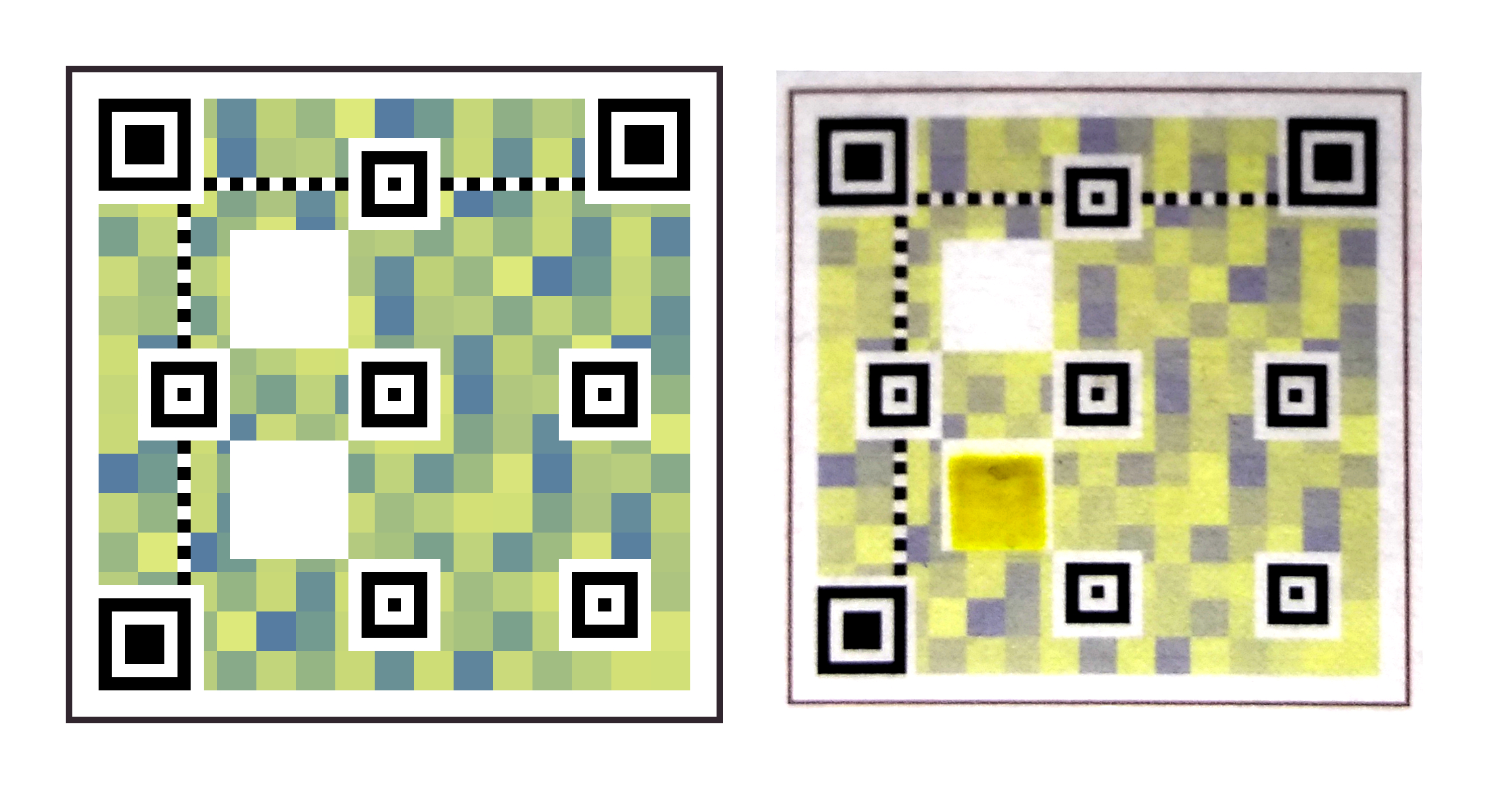

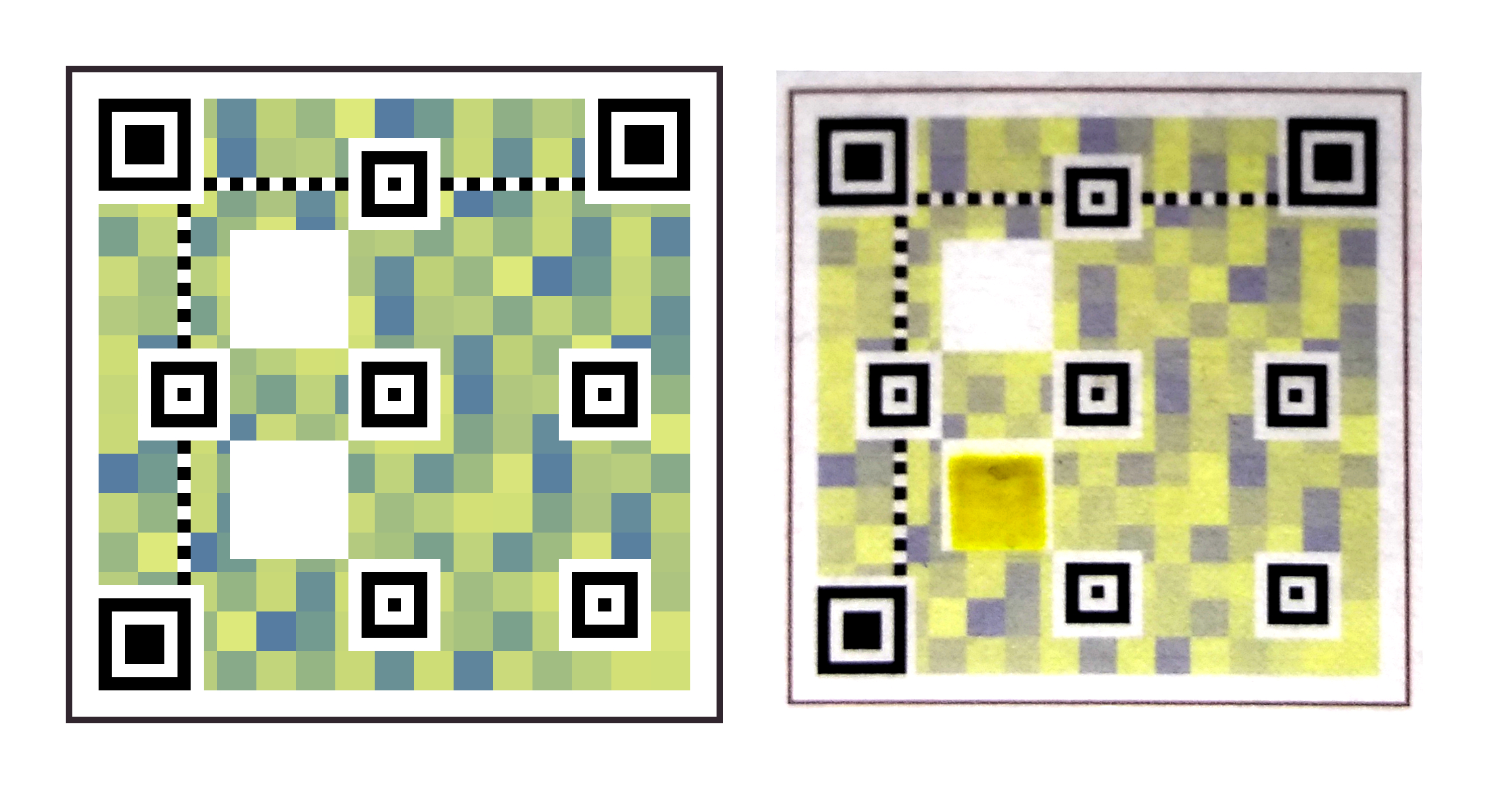

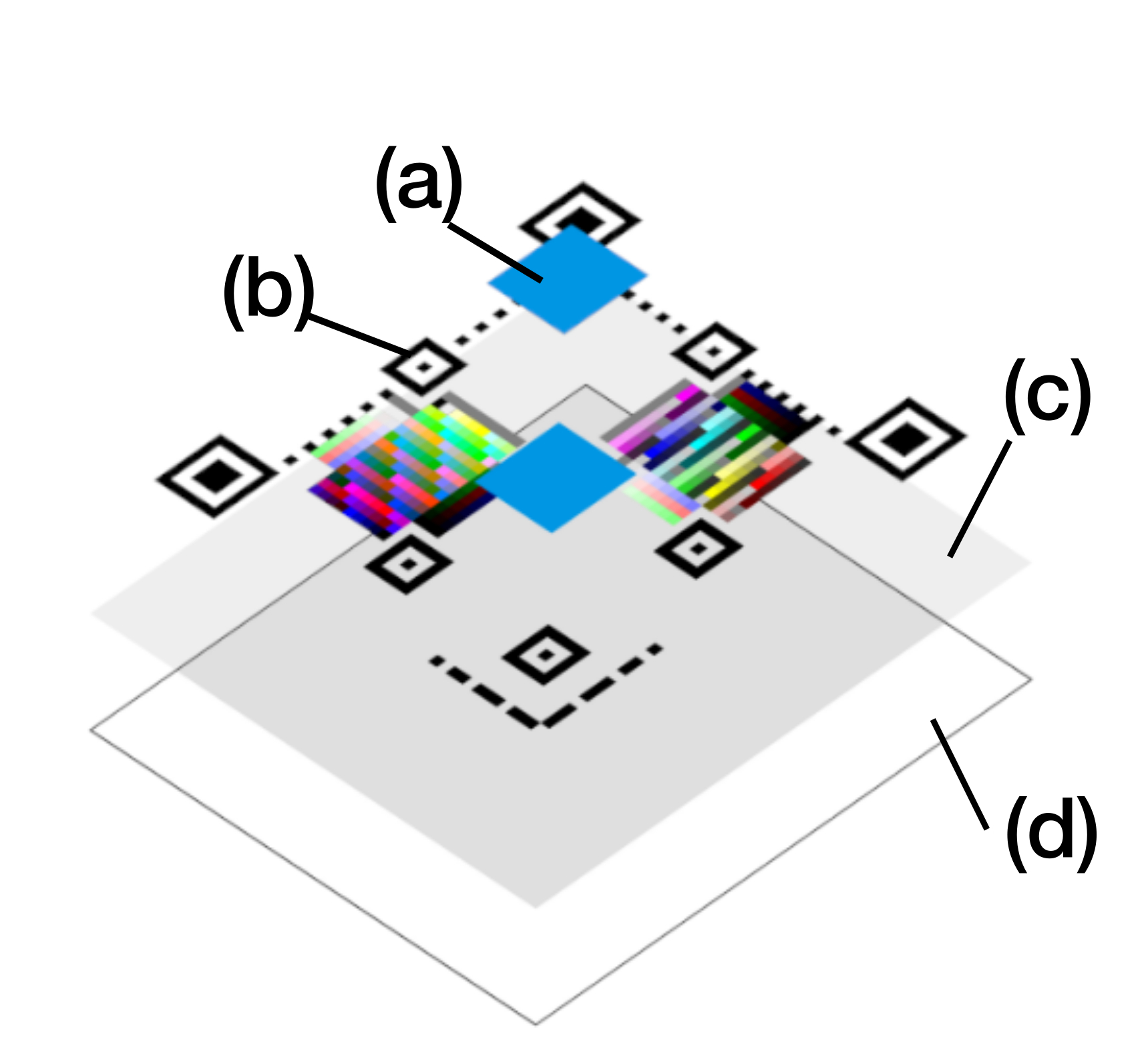

To solve those challenges, we proposed the creation of an integrated machine-readable pattern based on QR Codes, which would embed both the color correction patches and the colorimetric indicators patches. And, those embeddings ought to be back-compatible with the QR Code standard, to maintain the data storage capabilities of QR Codes for traceability applications [20]. A representation of this idea is portrayed in [fig:thesis_proposal].

The novelty of the idea led us to submit a patent application in 2018, which was granted worldwide in 2019, and now is being evaluated in National phases [23]. Moreover, we launched ColorSensing, a spin-off company from Universitat de Barcelona to develop further the technology in industrial applications [24].

The strong points of the back-compatible proposal were:

the use of pre-existent computer vision algorithms to locate QR Codes, freeing the designed pattern of redundant computer vision features, as those ’circles’ seen outside the GasApp card ([fig:thesis_proposal]), which are redundant with the finder patterns of the QR Code (corners of the QR Code);

the reduced size presented by a QR Code ([fig:thesis_proposal]), the original GasApp proposal was set to a business card size (\(\mathrm{3.5}\) \(\times\) \(\mathrm{2.0}\) inches), while our QR Code proposal is smaller (\(\mathrm{1}\) \(\times\) \(\mathrm{1}\) inch);

reducing the barrier between the new technology and the final users, as the back-compatible proposal maintains the mainstream standard of the QR Codes. By this, one could simply encode a desired URL in the QR Code data alongside with the color information and always be able to redirect the final user to a download link of the proper reader, which enables the color readout;

and, the capacity to increase the color references embedded in a color chart, while also reducing the global size of the chart, (e.g. the usual size of a commercial ColorChecker is about 11 \(\times\) 8.5 inches, and it encodes 24 color patches), using modern machine-readable standard (such as QR Codes as an encoding base) enables a systematic path to increase the capacity per surface unit, and subsequently according to color correction theory, leading to a better color corrections having more color references.

All in all, this thesis proposes a new approach to automate color correction for colorimetry applications using barcodes; namely, Color QR Codes featuring colorimetric indicators. Let us enumerate the objectives of the thesis:

To capture machine-readable patterns placed on top of challenging surfaces, which are captured with handheld smartphones. These surfaces can be non-rigid surfaces present in real-world applications, such as: bottles, packaging, food, etc.

To define a back-compatible QR Code modification method to extend QR Codes to act as color charts, which back-compatibility ensures that the digital data of the QR Code remains readable during the whole modification process.

To achieve image consistency using color charts for any camera or light setup, enabling colorimetric applications to yield quantitative results, and doing so by specifying a color correction method that takes into account arbitrary modifications in the captured scene, such as: light source, smartphone device, etc.

To demonstrate a specific application of the technology based on colorimetric indicators, where the accumulated results from objectives I to III are applied.

In this thesis, we tackled the above-mentioned objectives. Prior to that, we introduce in a dedicated chapter the backgrounds and methods applied to this thesis. Then, we present four thematic chapters related to each one of the objectives. These chapters were prepared with a coherent structure: a brief introduction, a proposal, an experimental details section, the results presentation and the conclusion discussion. Later, a general conclusion chapter was added to close the thesis. Let us briefly present the content of each thematic chapter.

First, in [ch:4] we reviewed the state-of-the-art method to extract QR Codes from different surfaces. Then, we focused on a novel approach to readout QR Codes on challenging surfaces, such as those found in food packages, cylinders or any non-rigid plastic [25], [26].

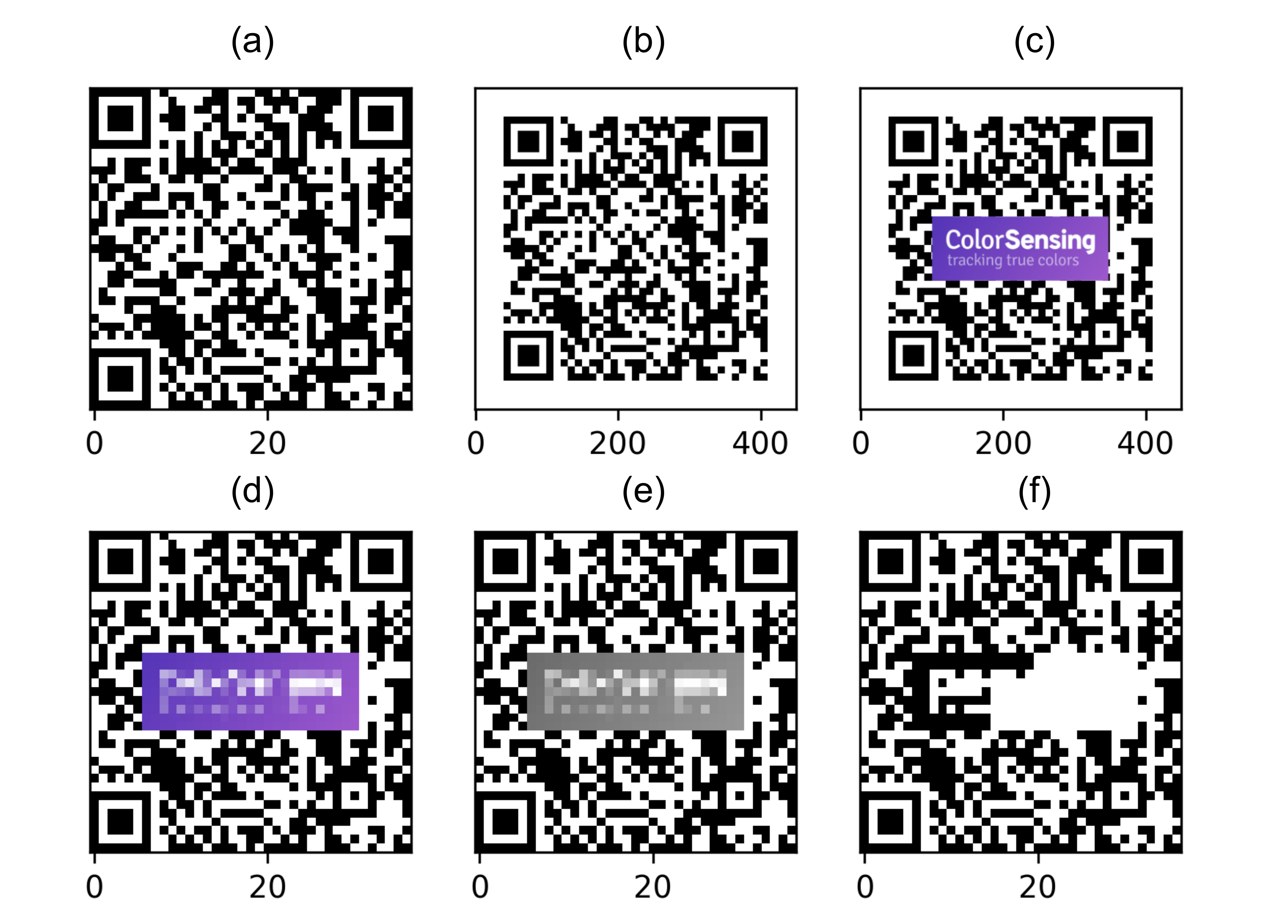

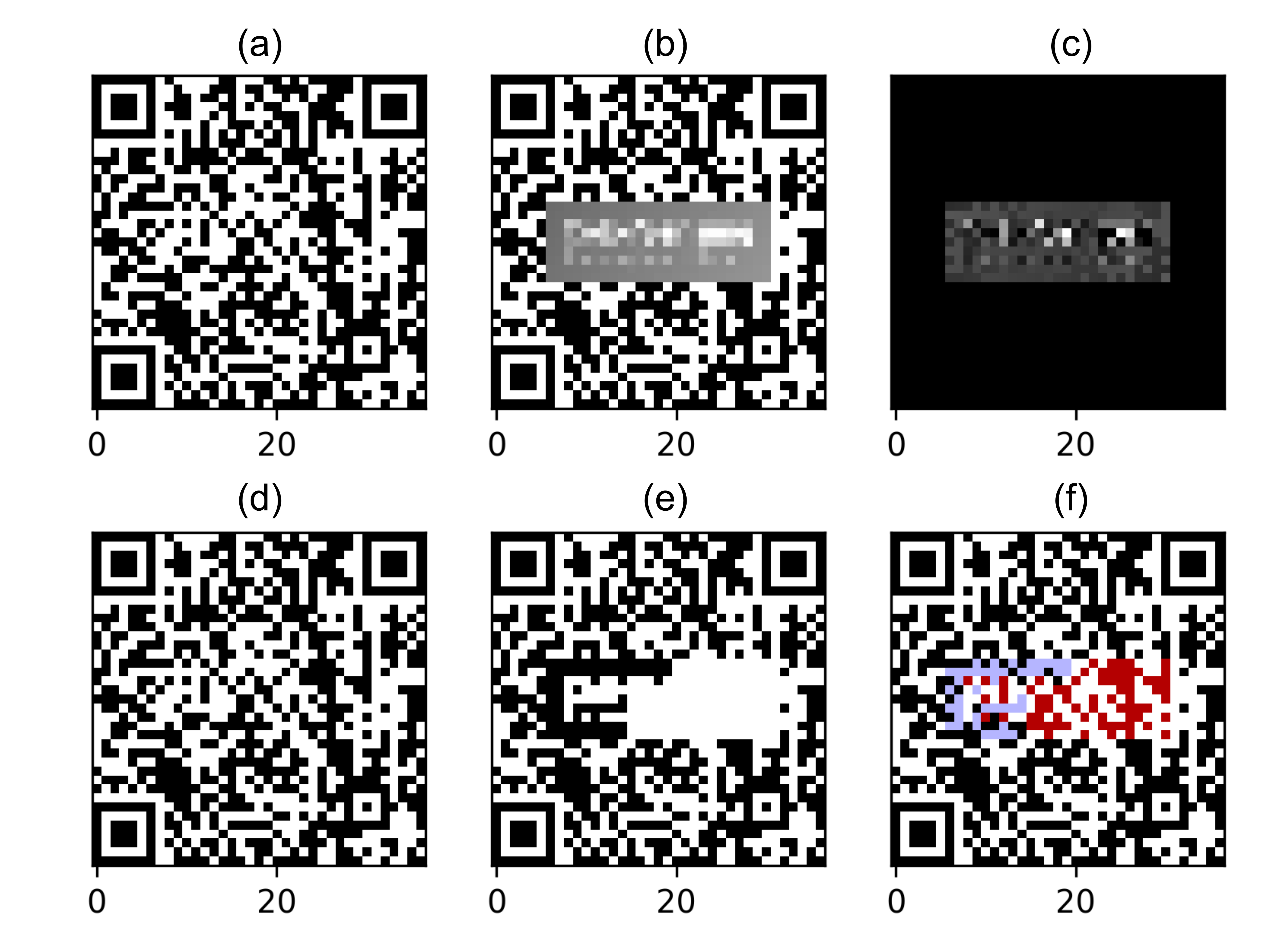

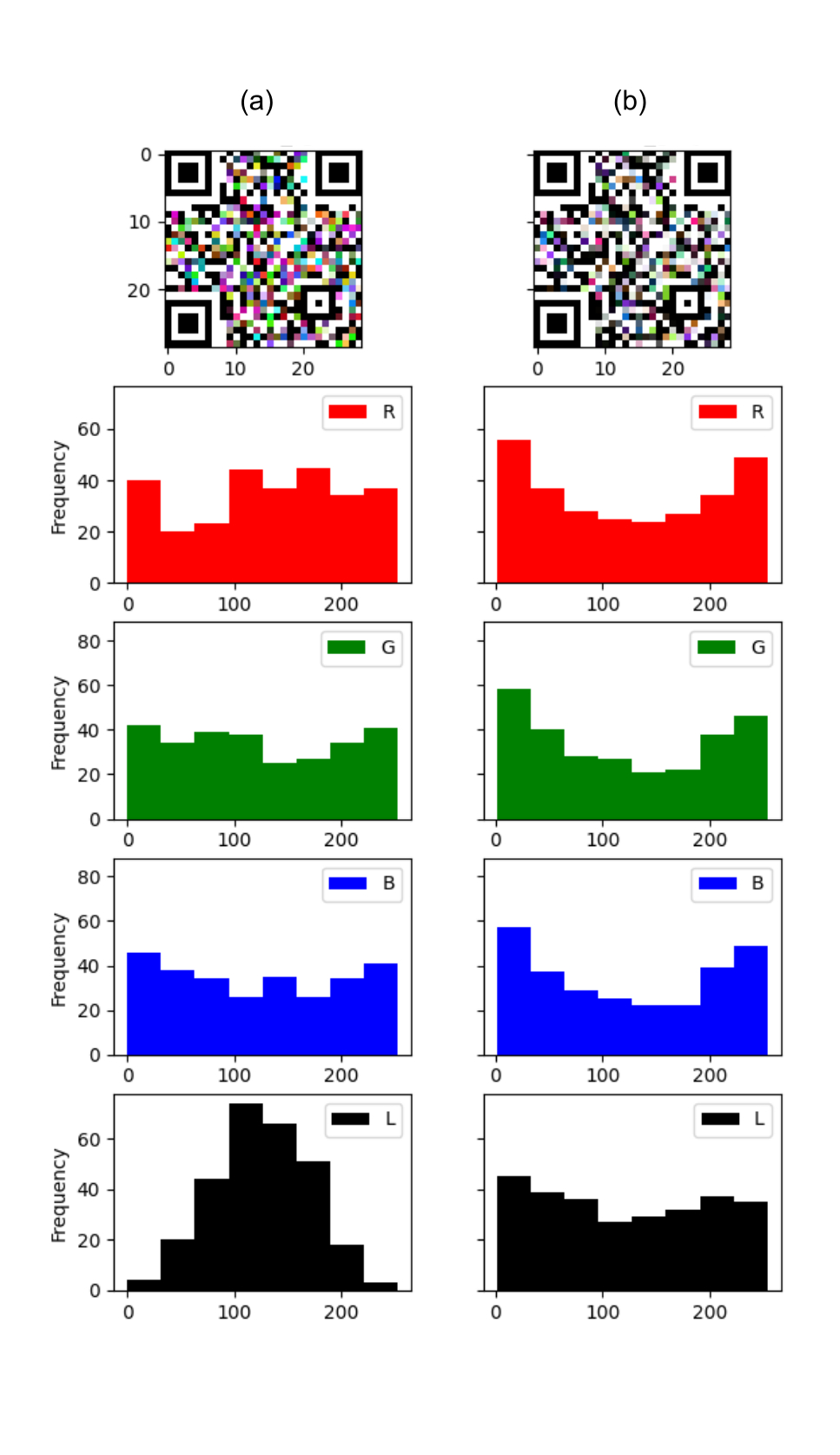

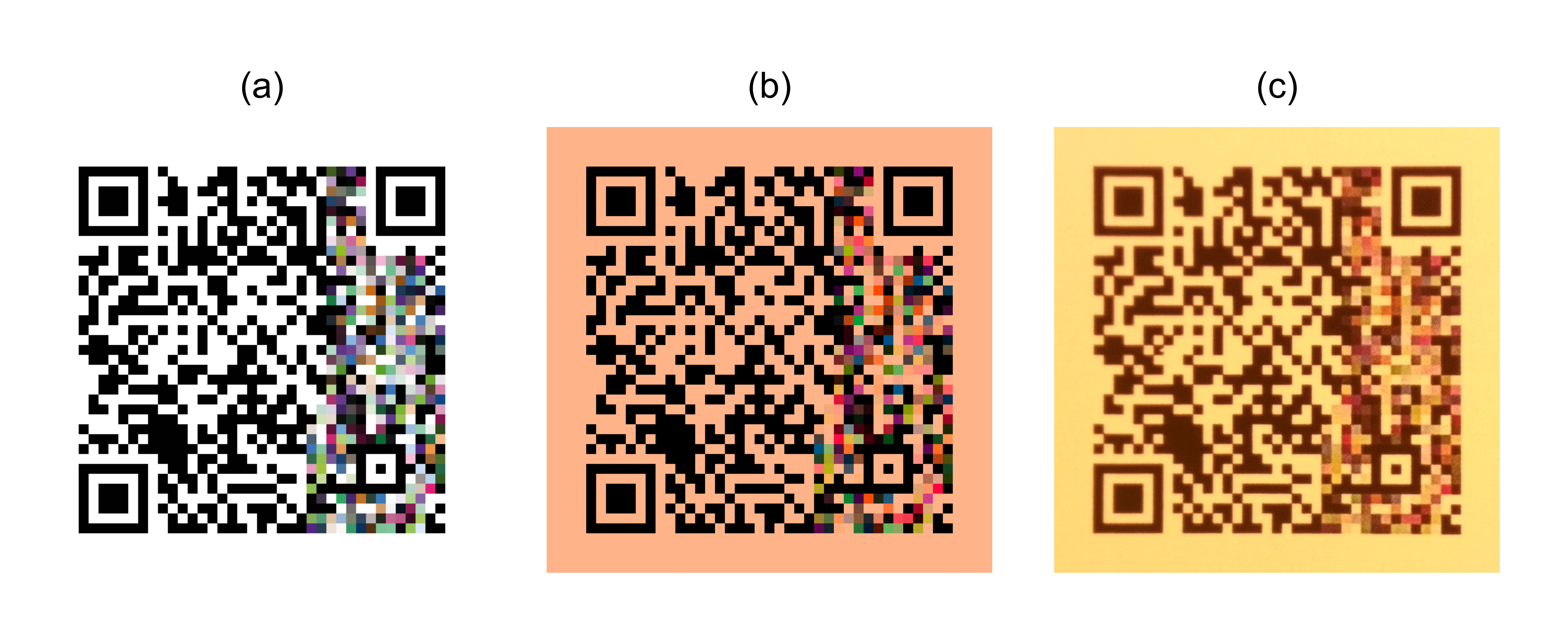

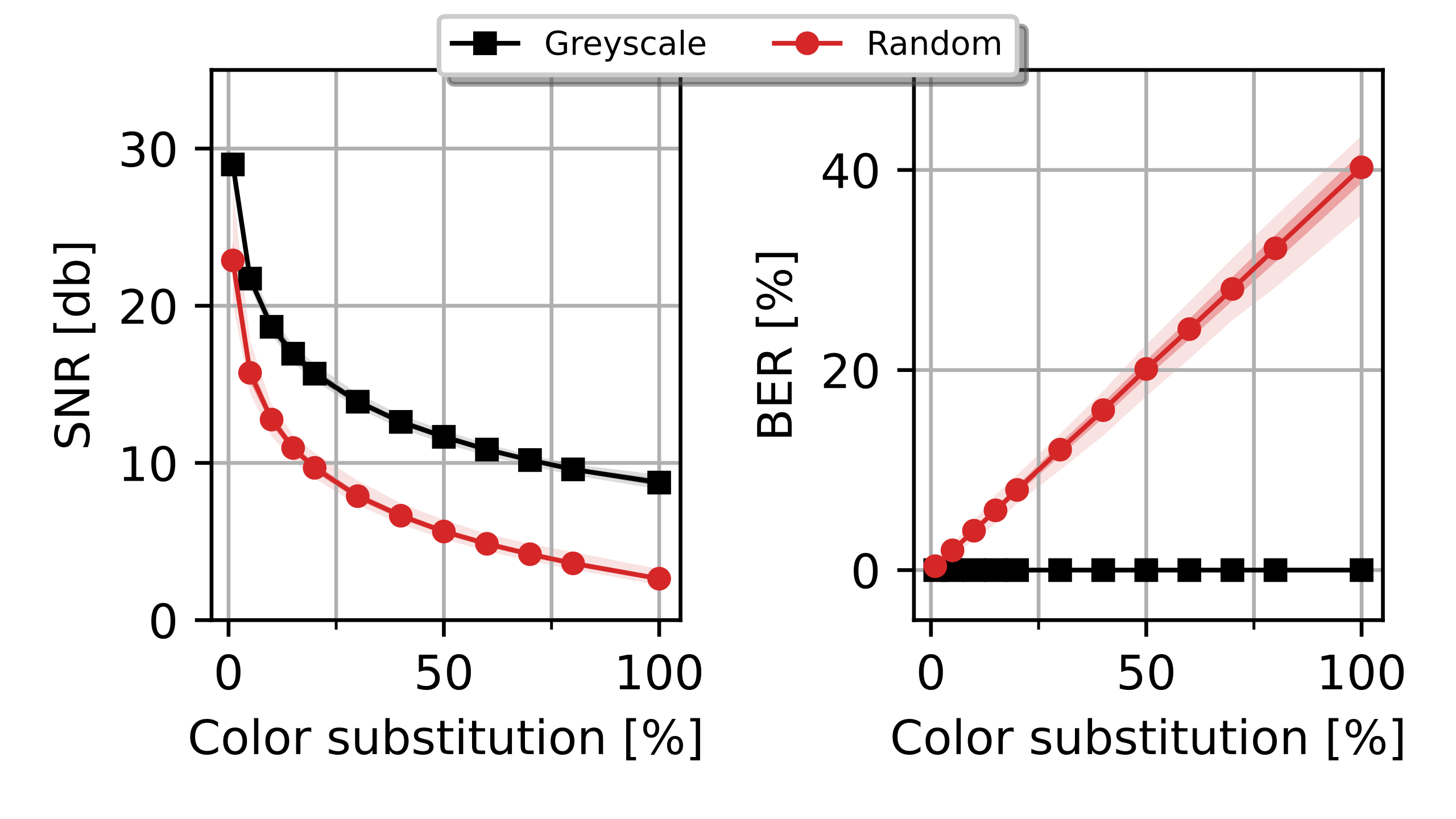

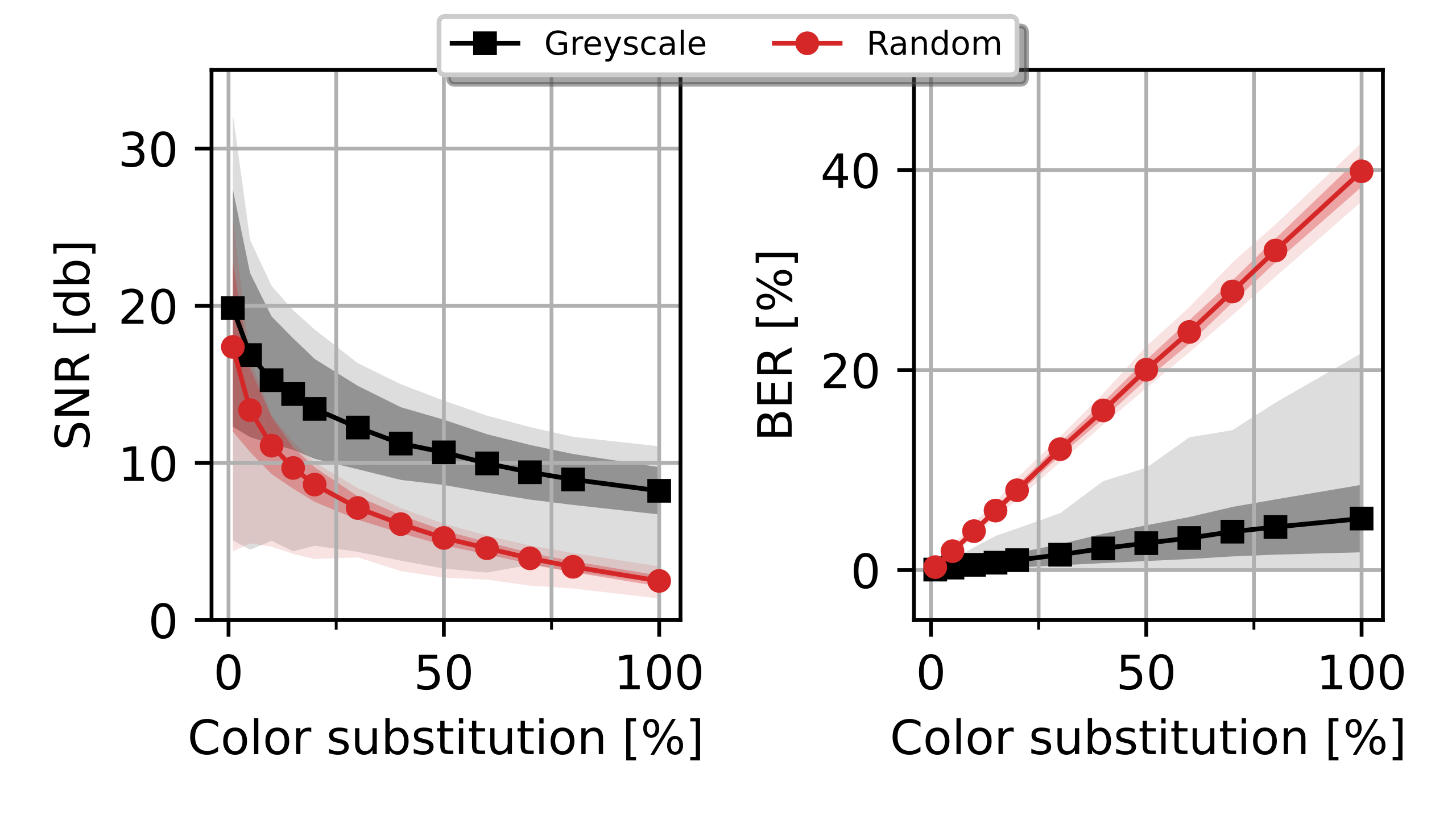

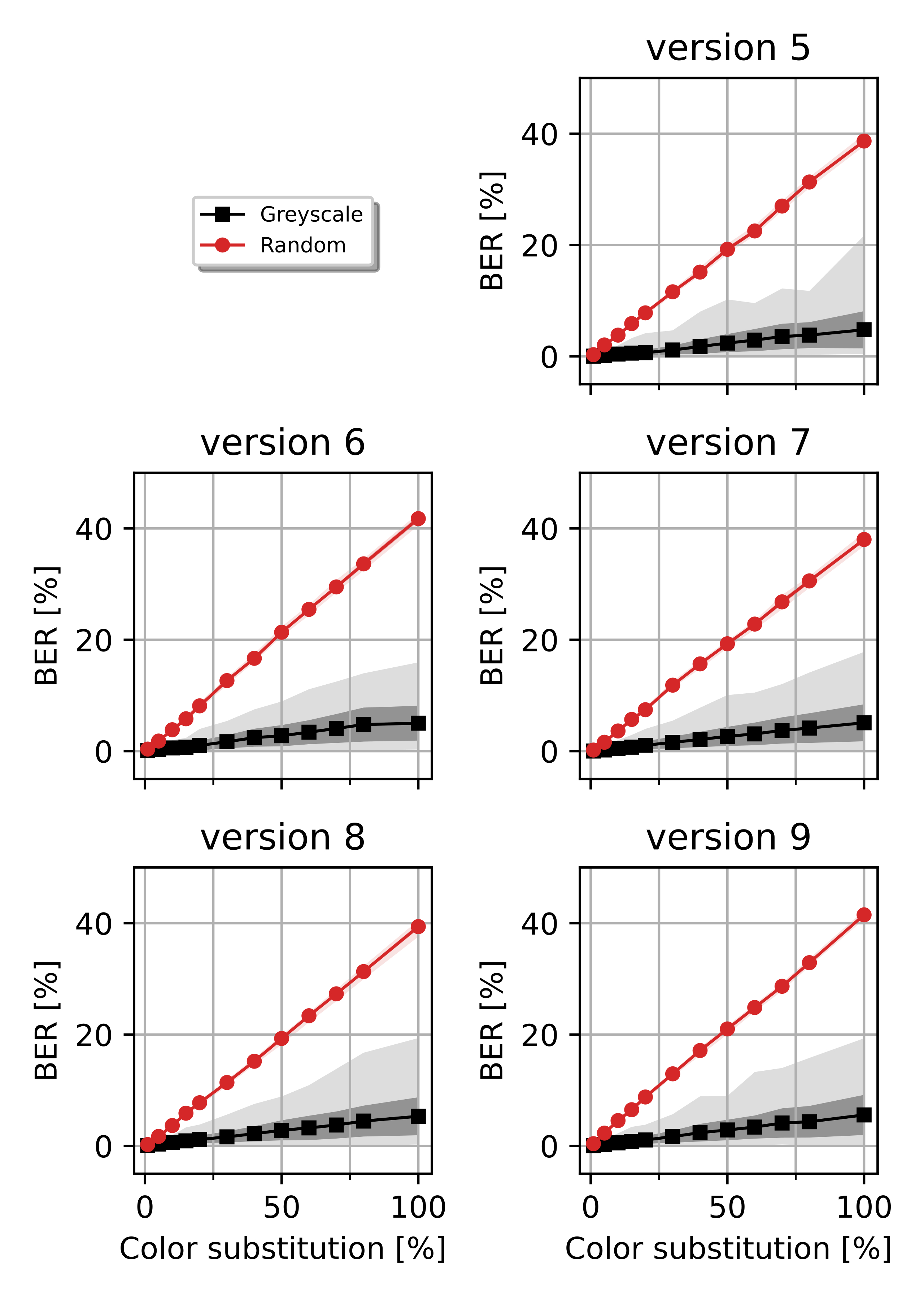

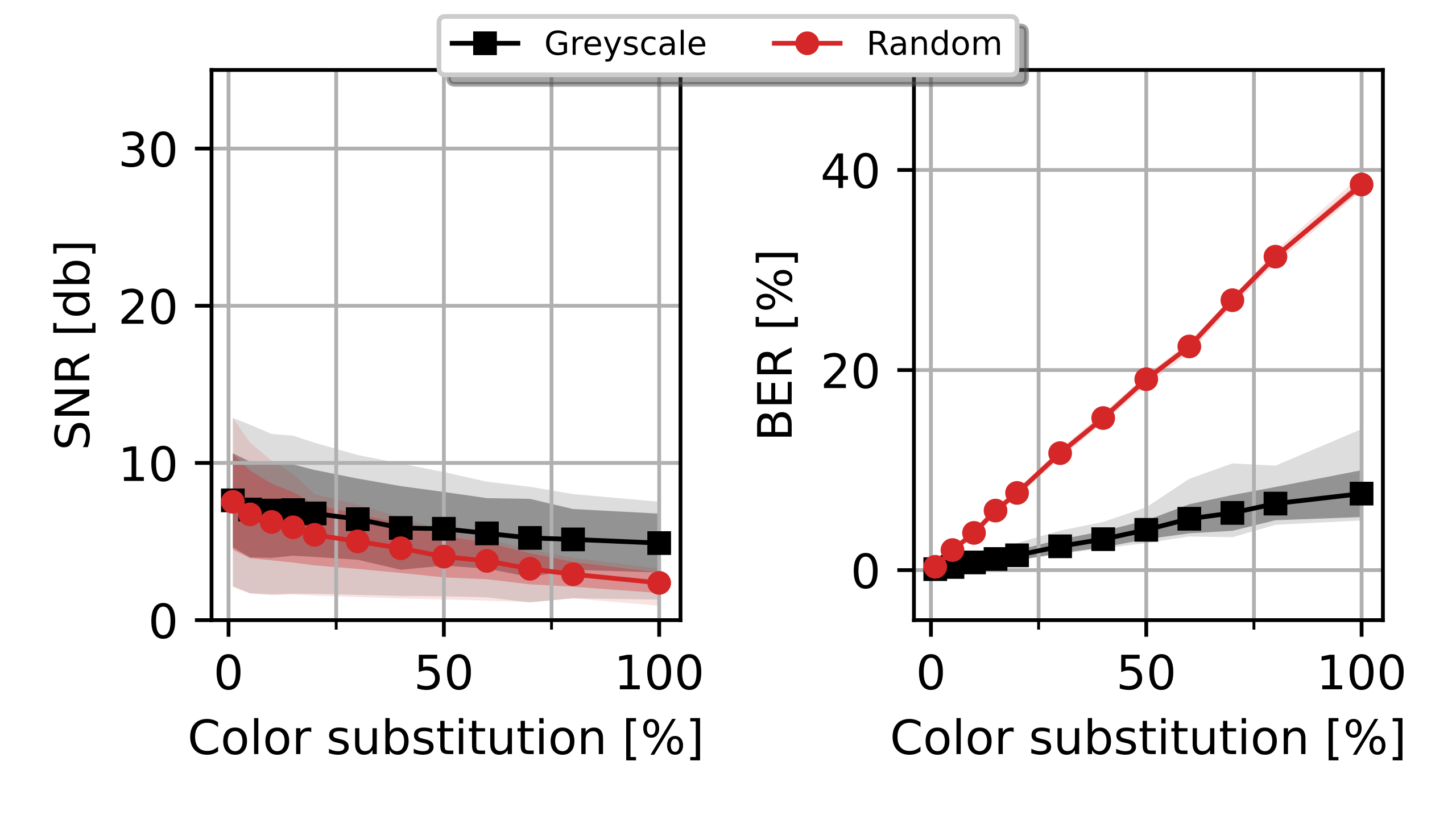

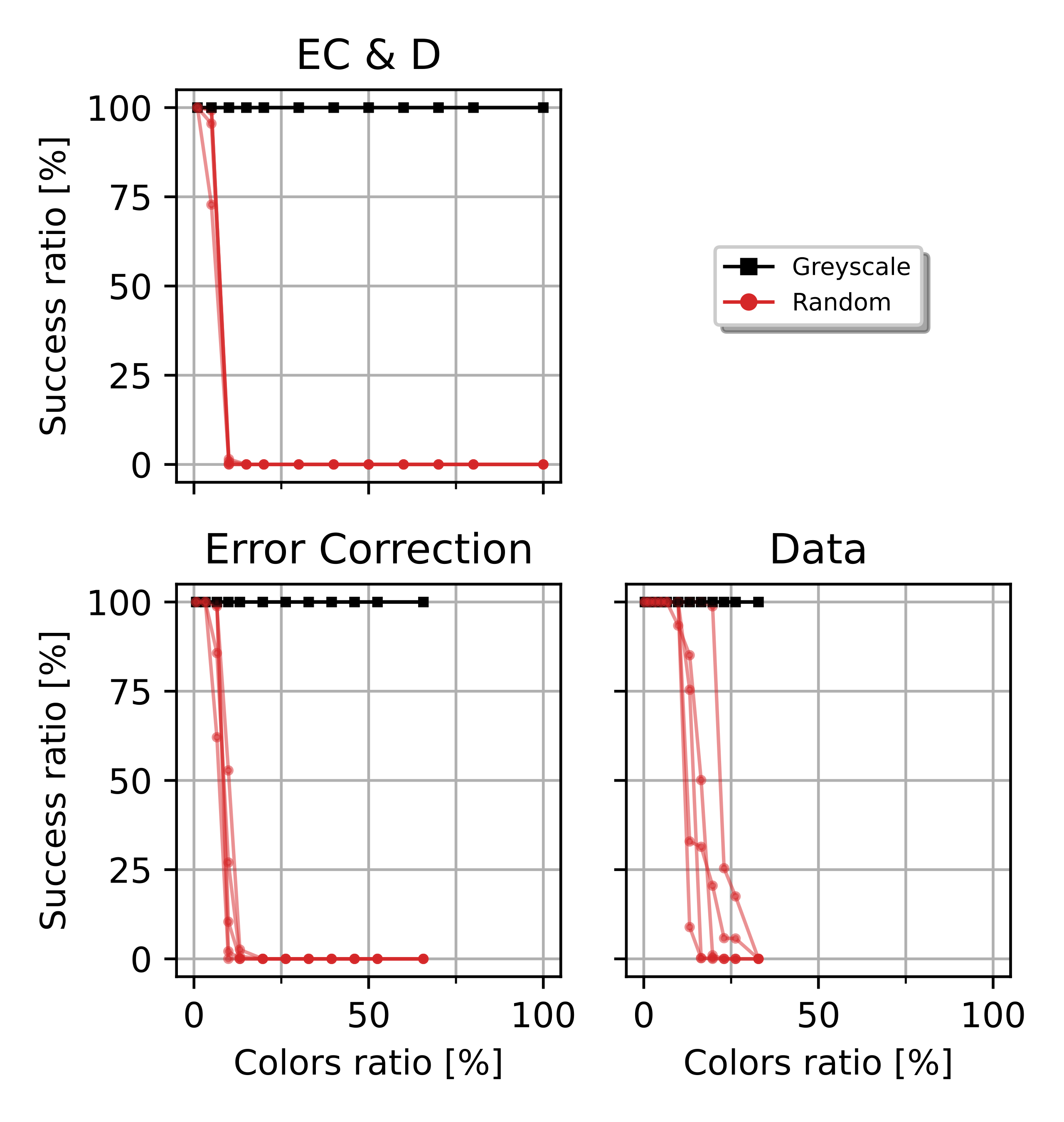

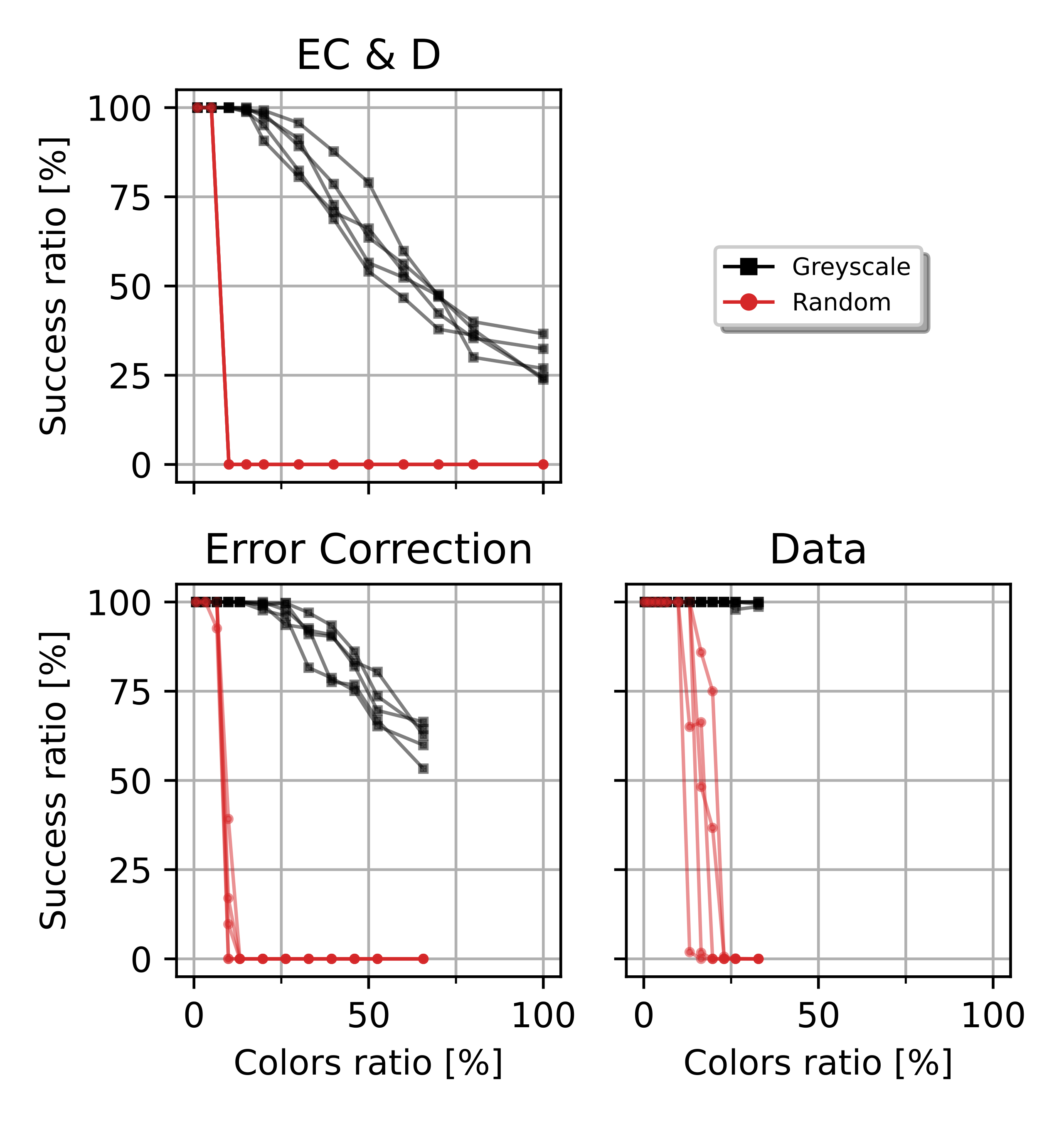

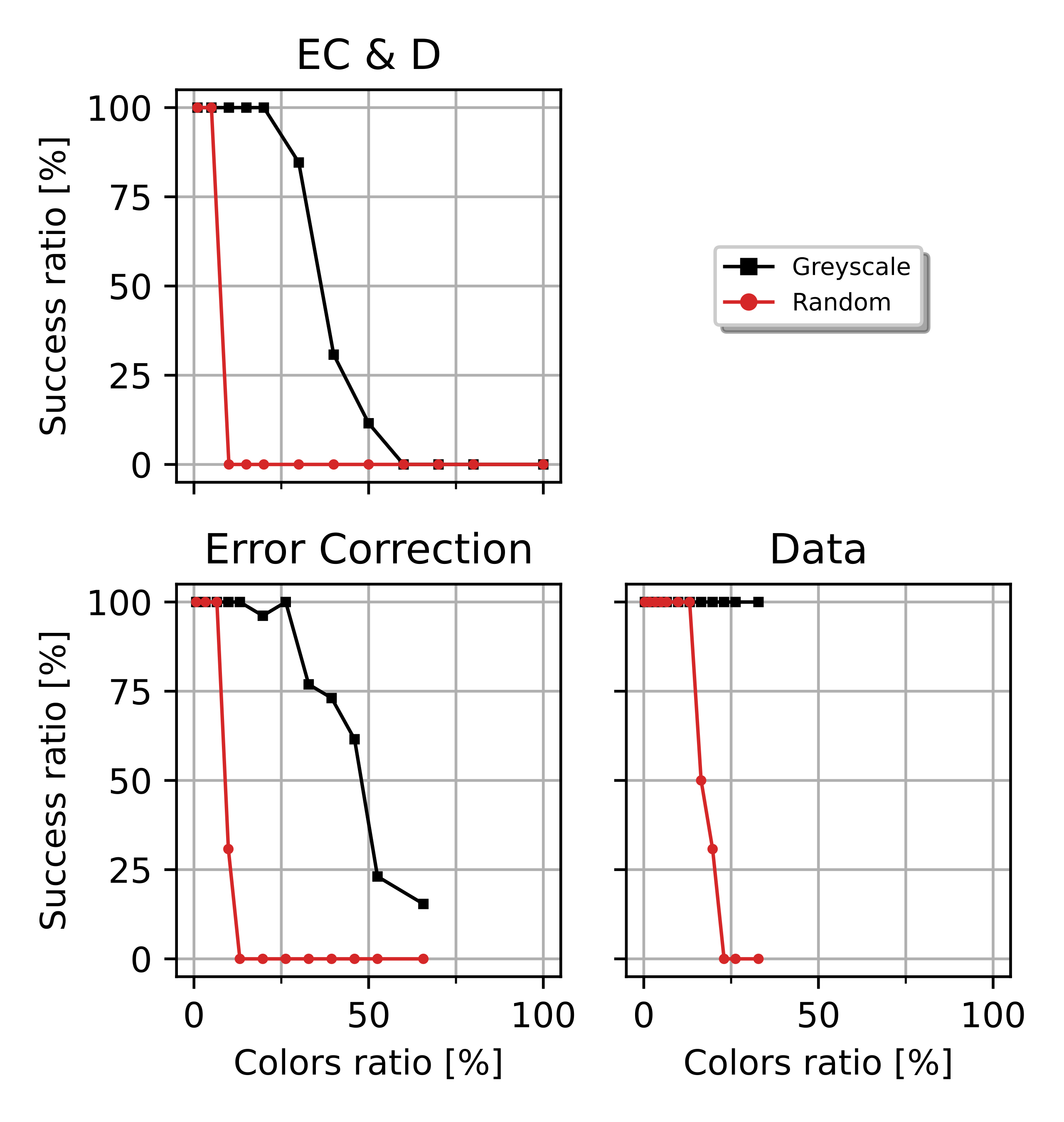

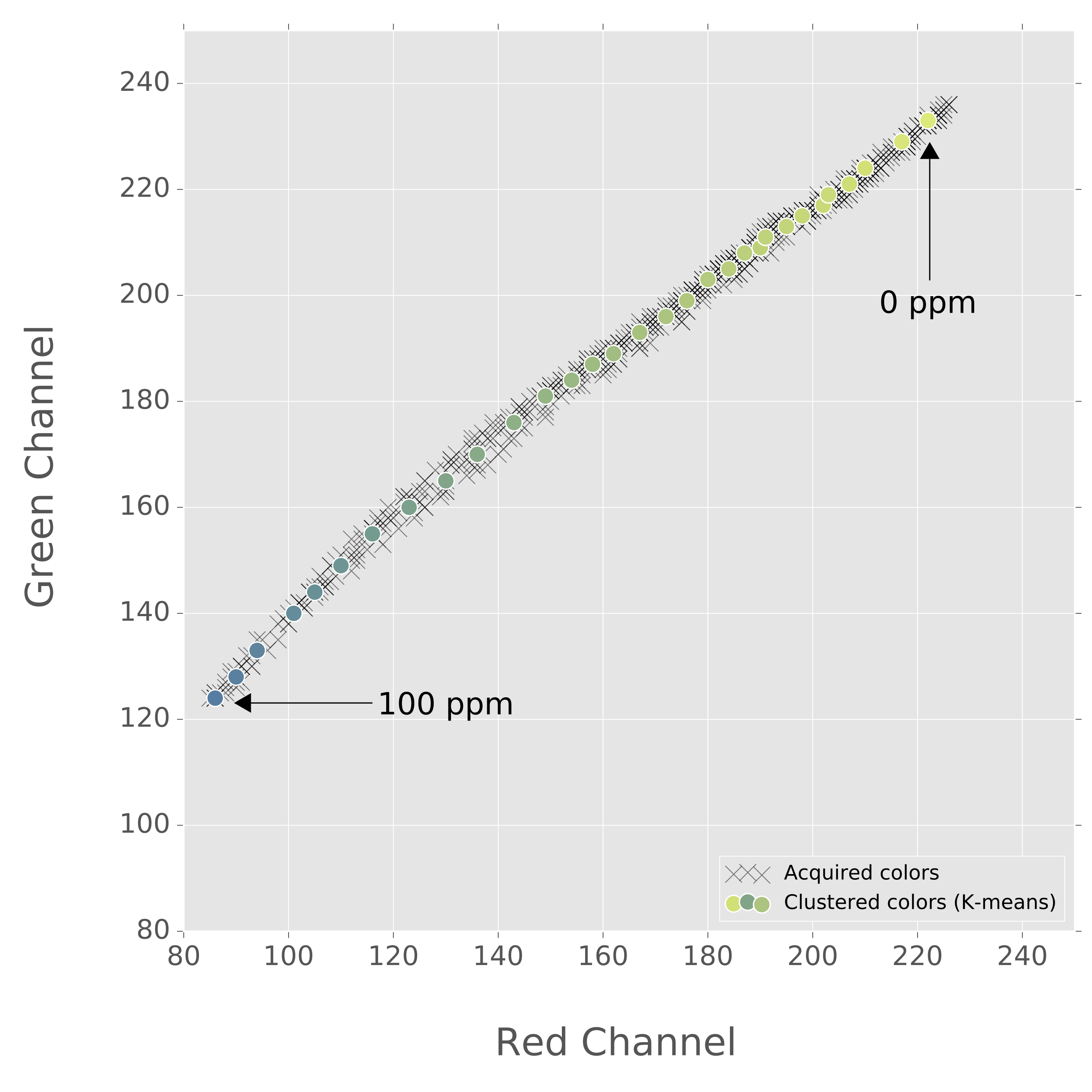

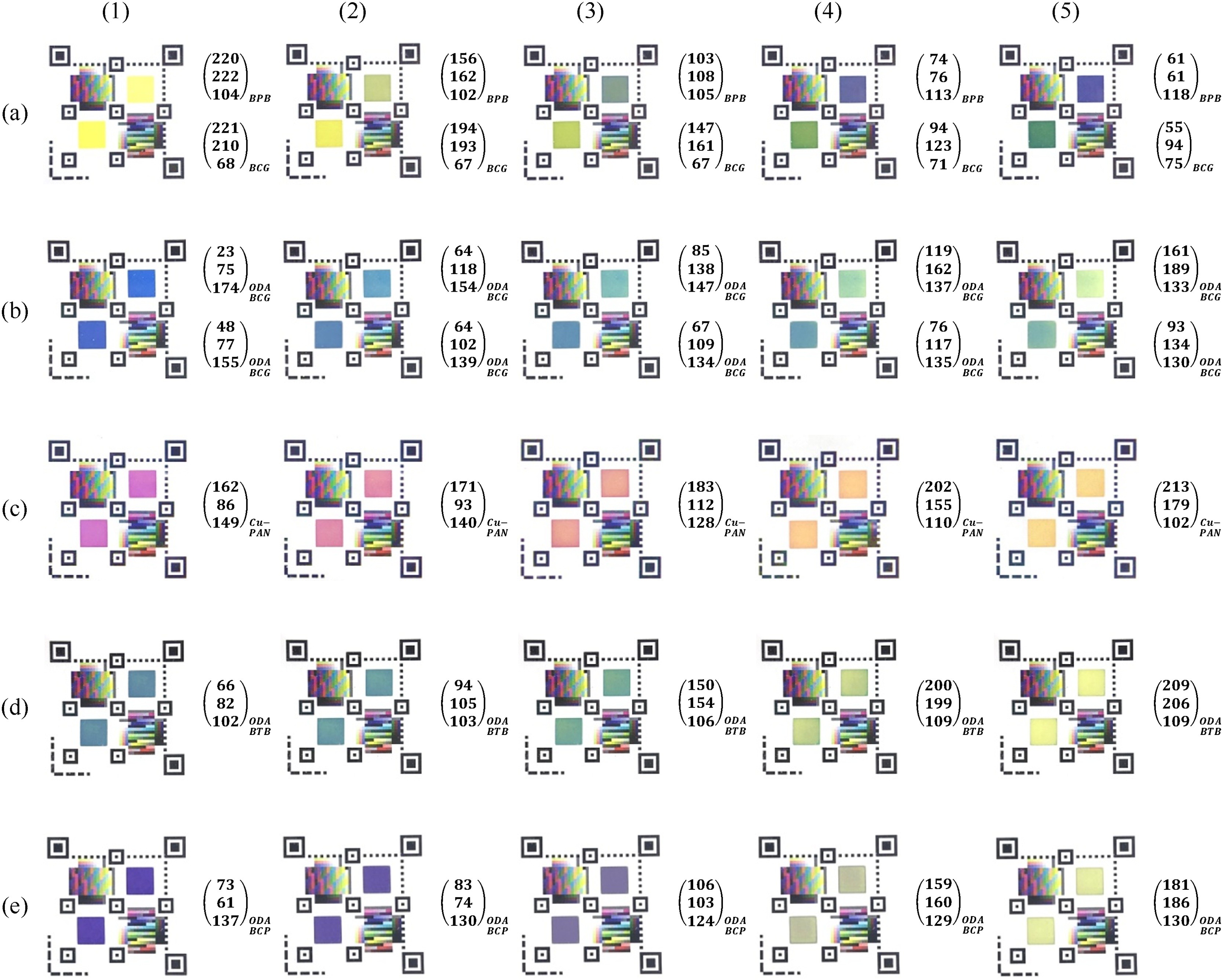

Second, in [ch:5] we introduced the main proposal of the thesis, the back-compatible Color QR Codes [23]. Here, we also introduced not only the machine-readable pattern proposal but also we benchmarked the different possible approaches to embed colors in a QR Code by taking into account its data encoding (i.e. which colors are to be embedded where, etc.) and how it affected the QR Code final readability.

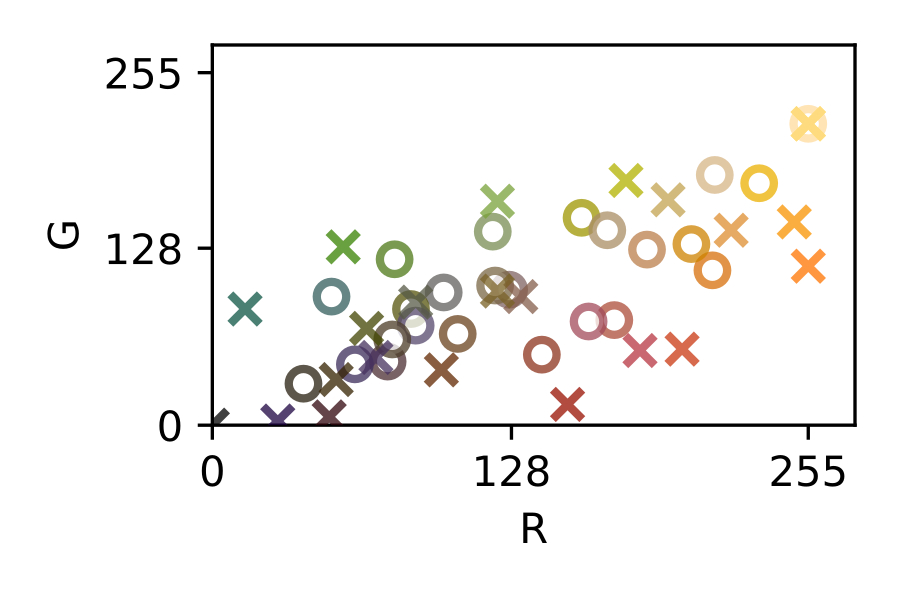

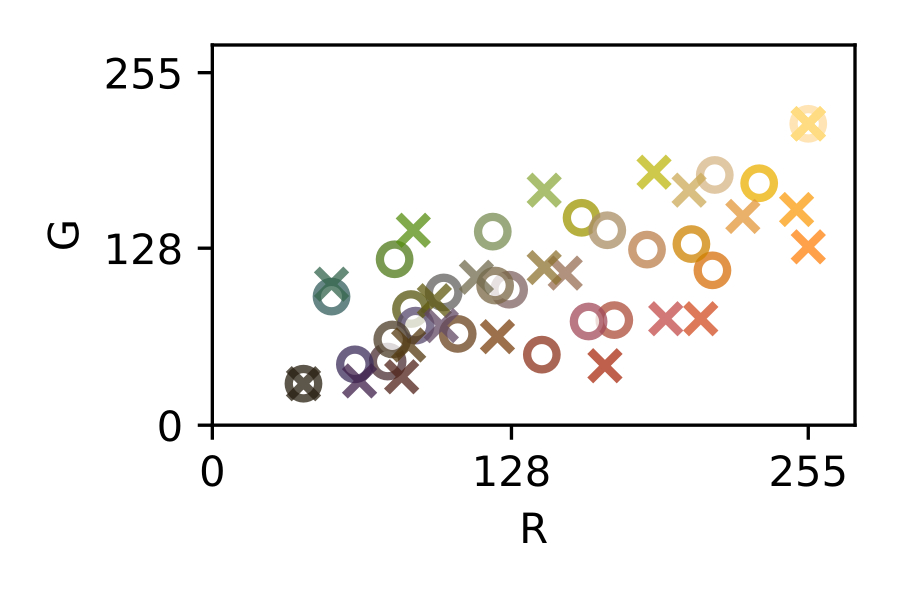

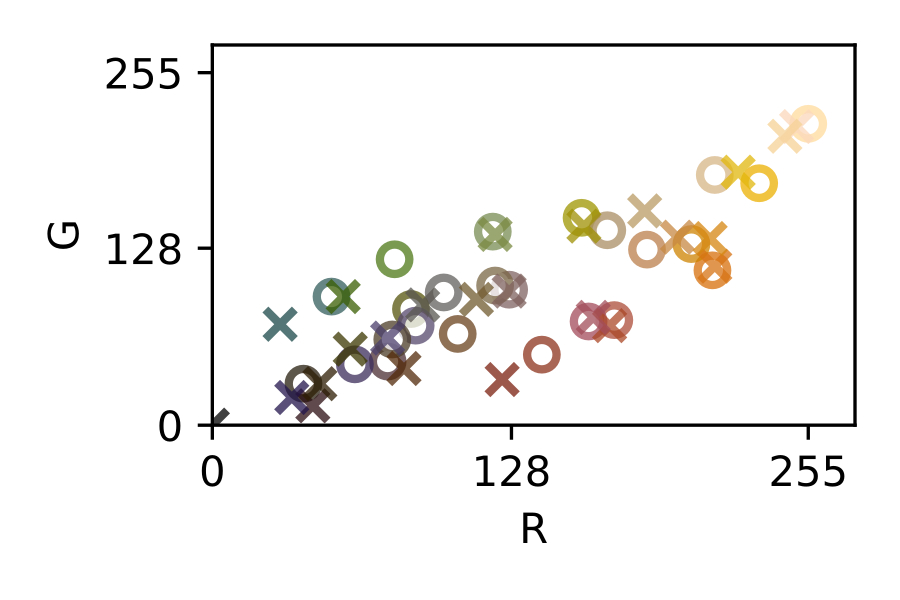

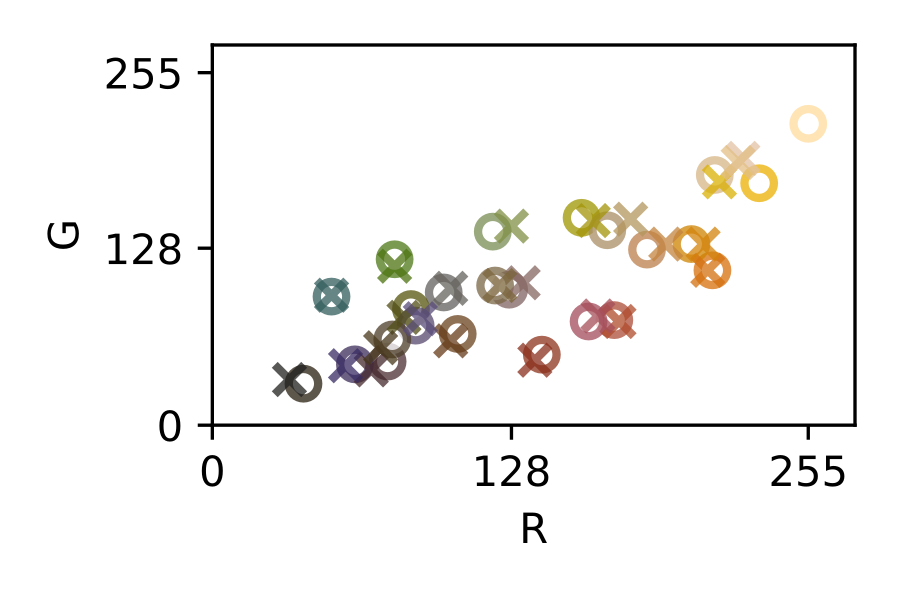

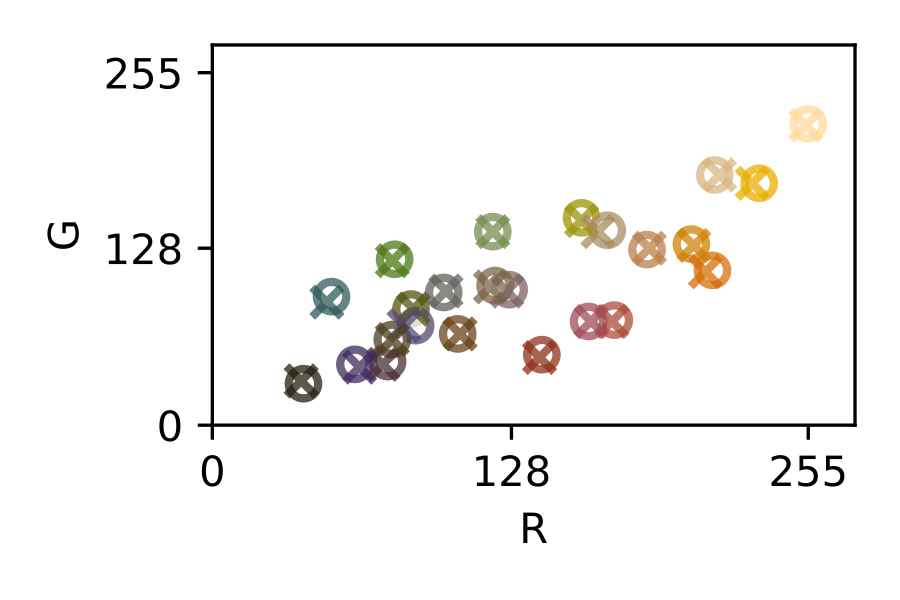

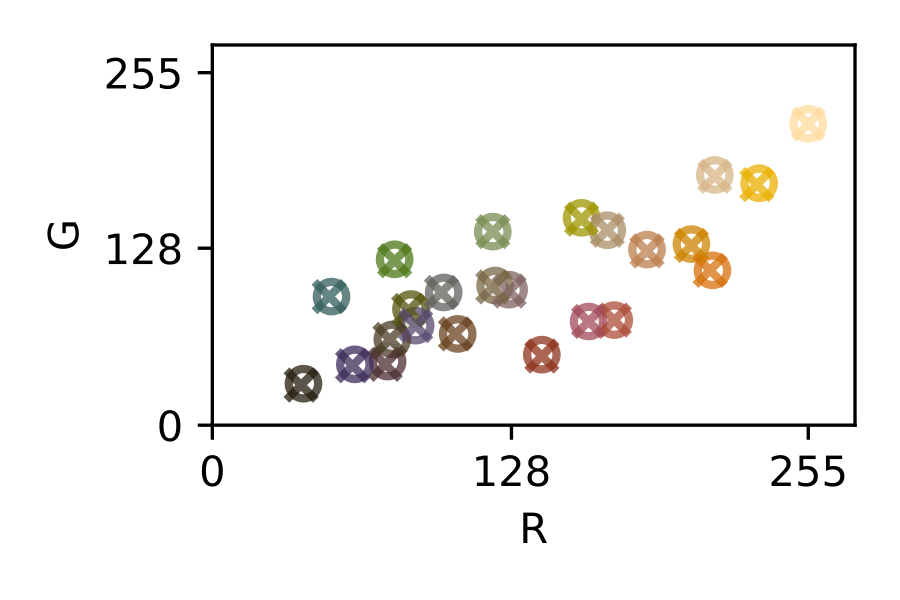

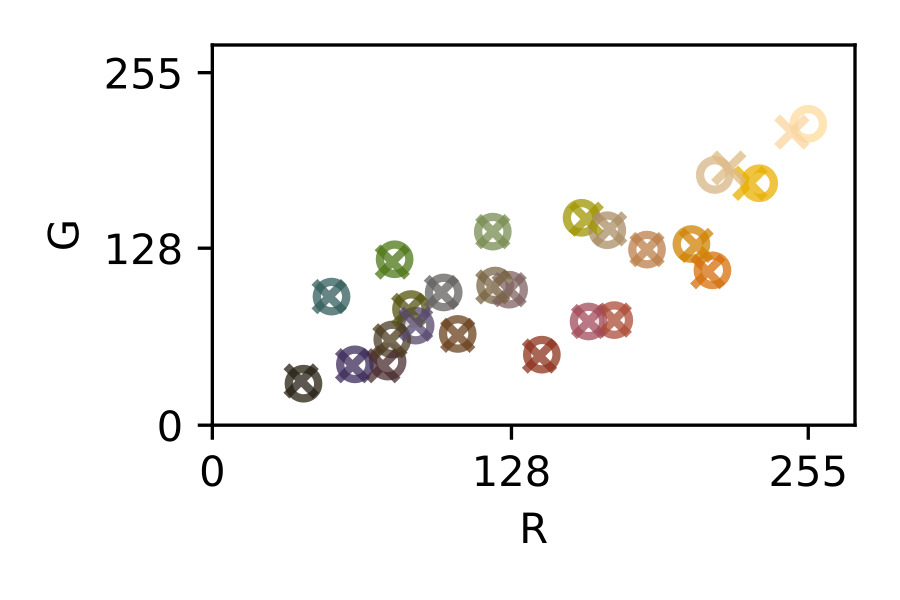

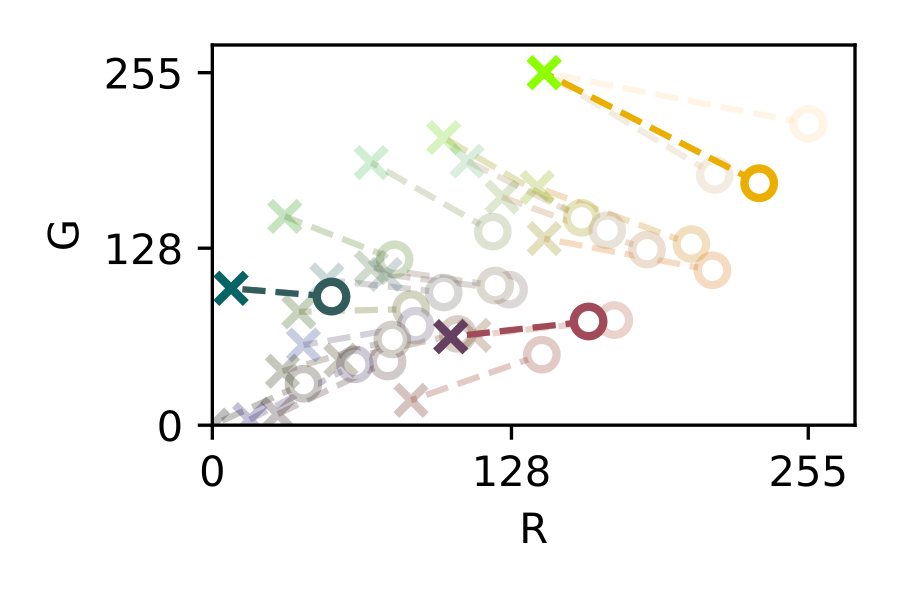

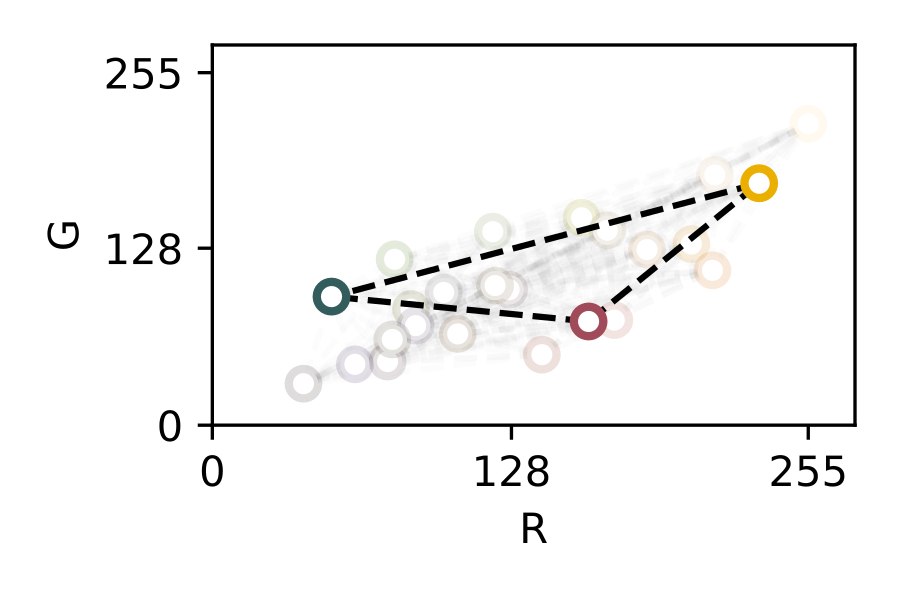

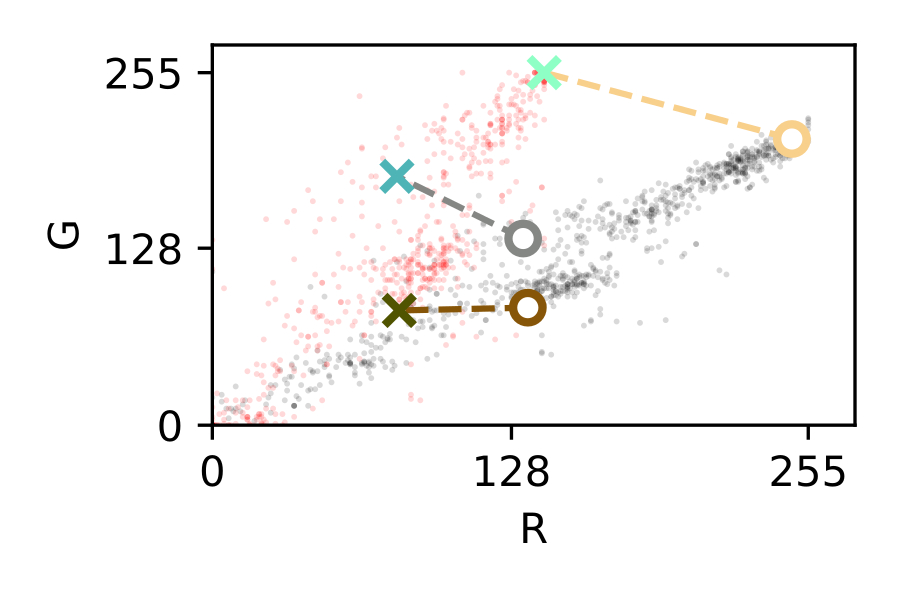

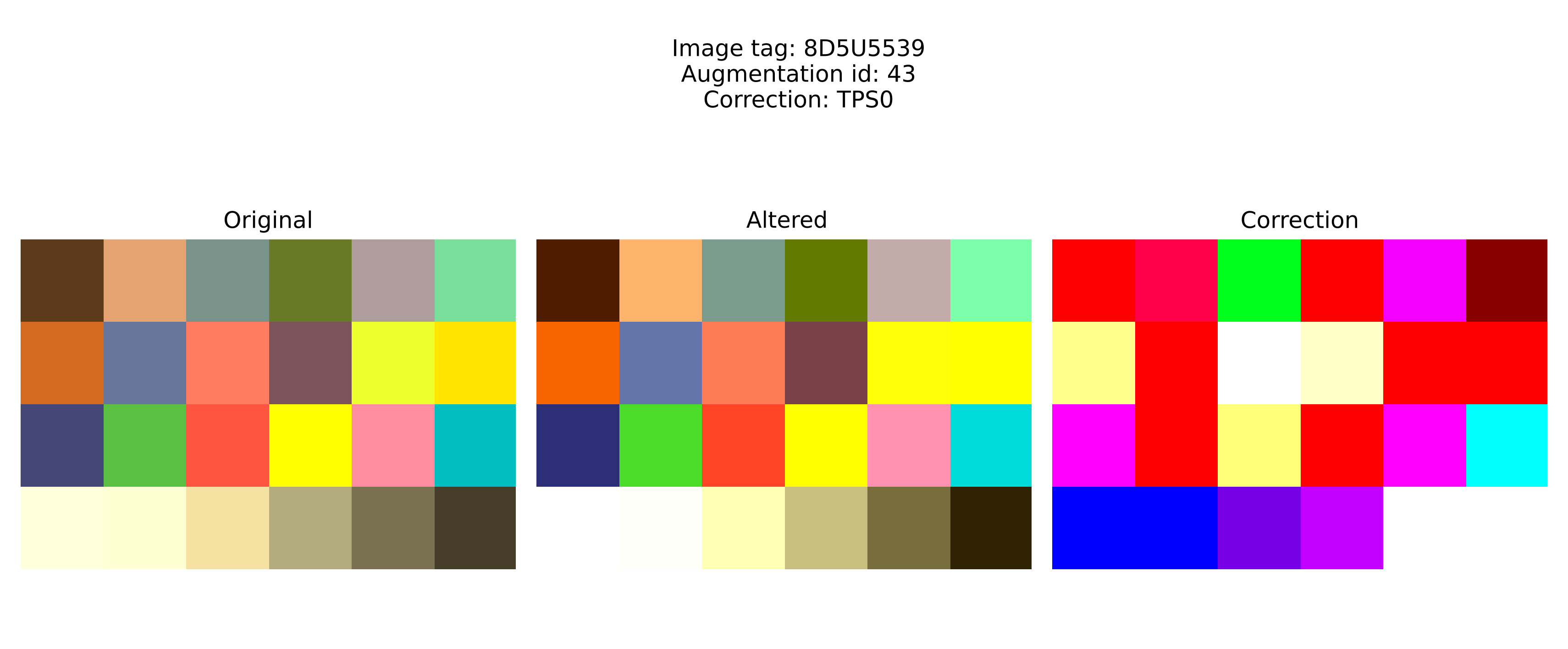

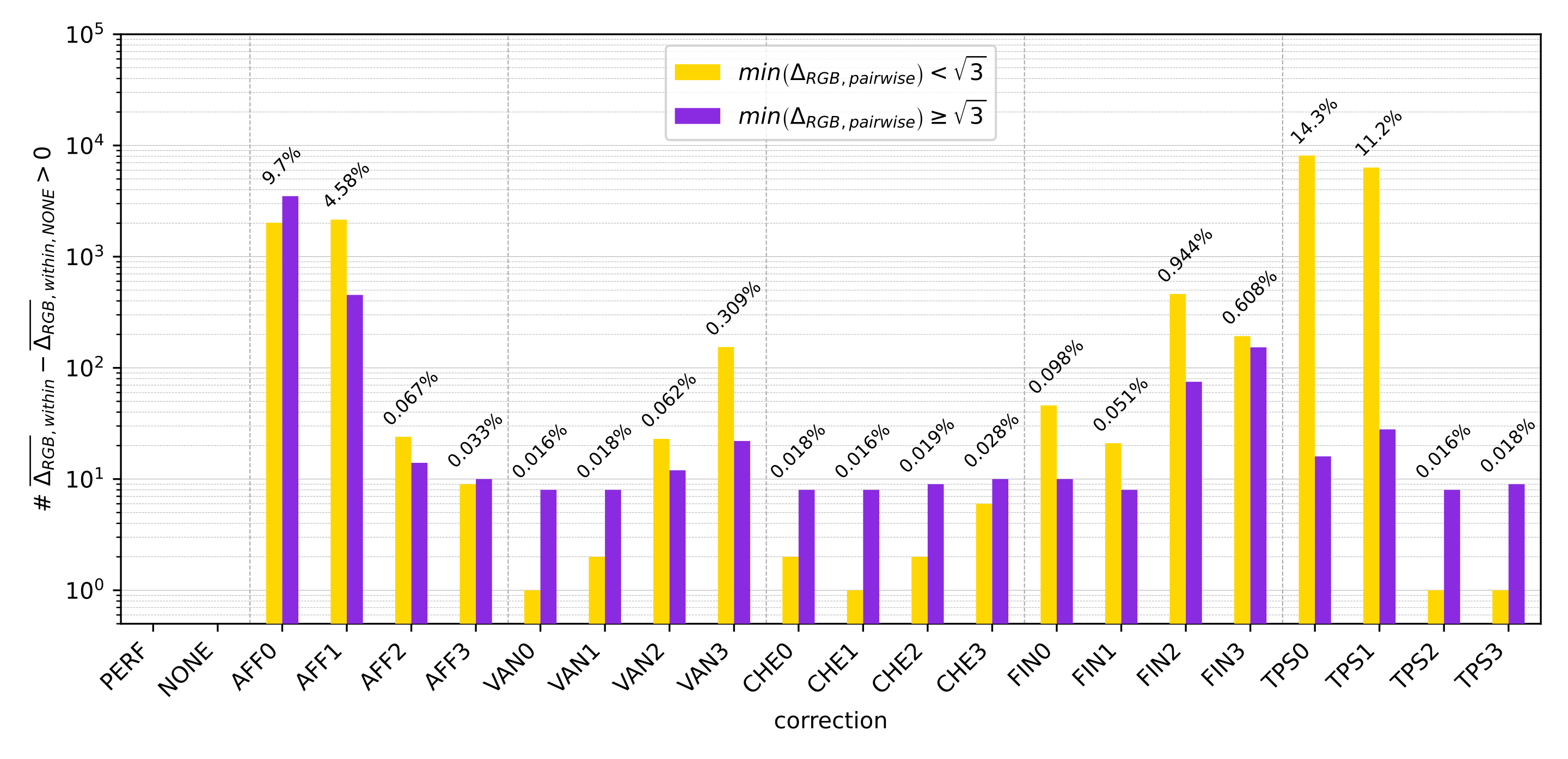

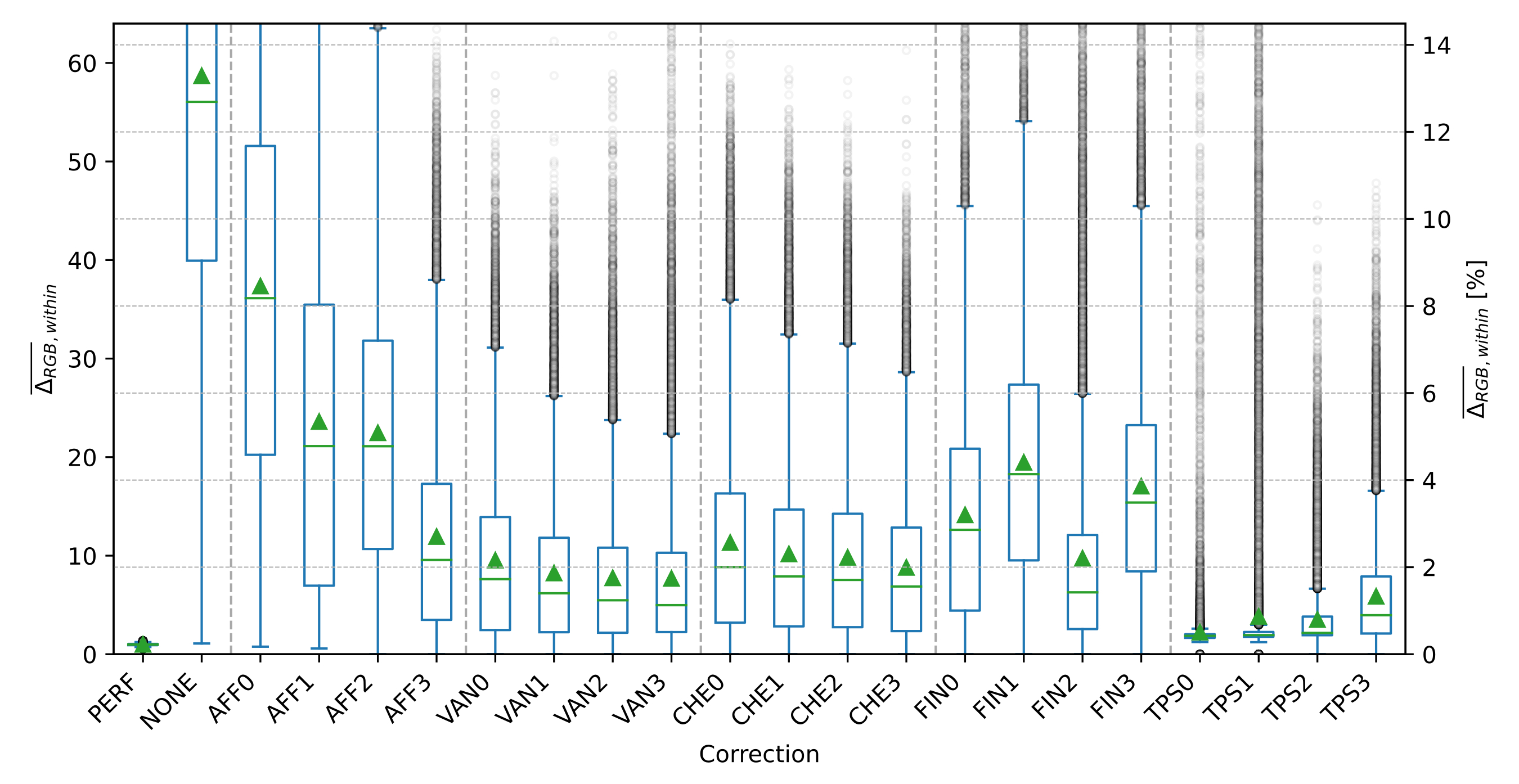

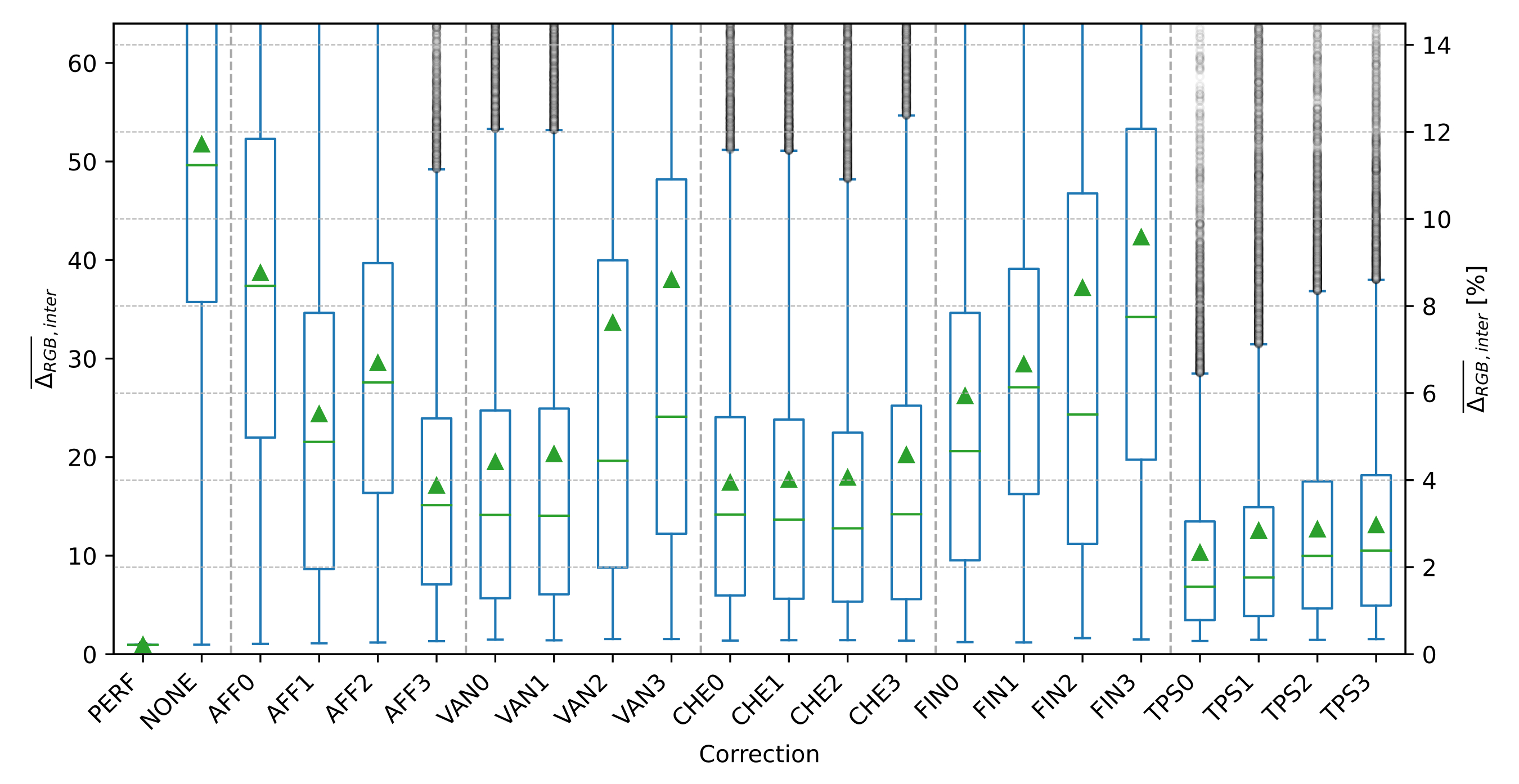

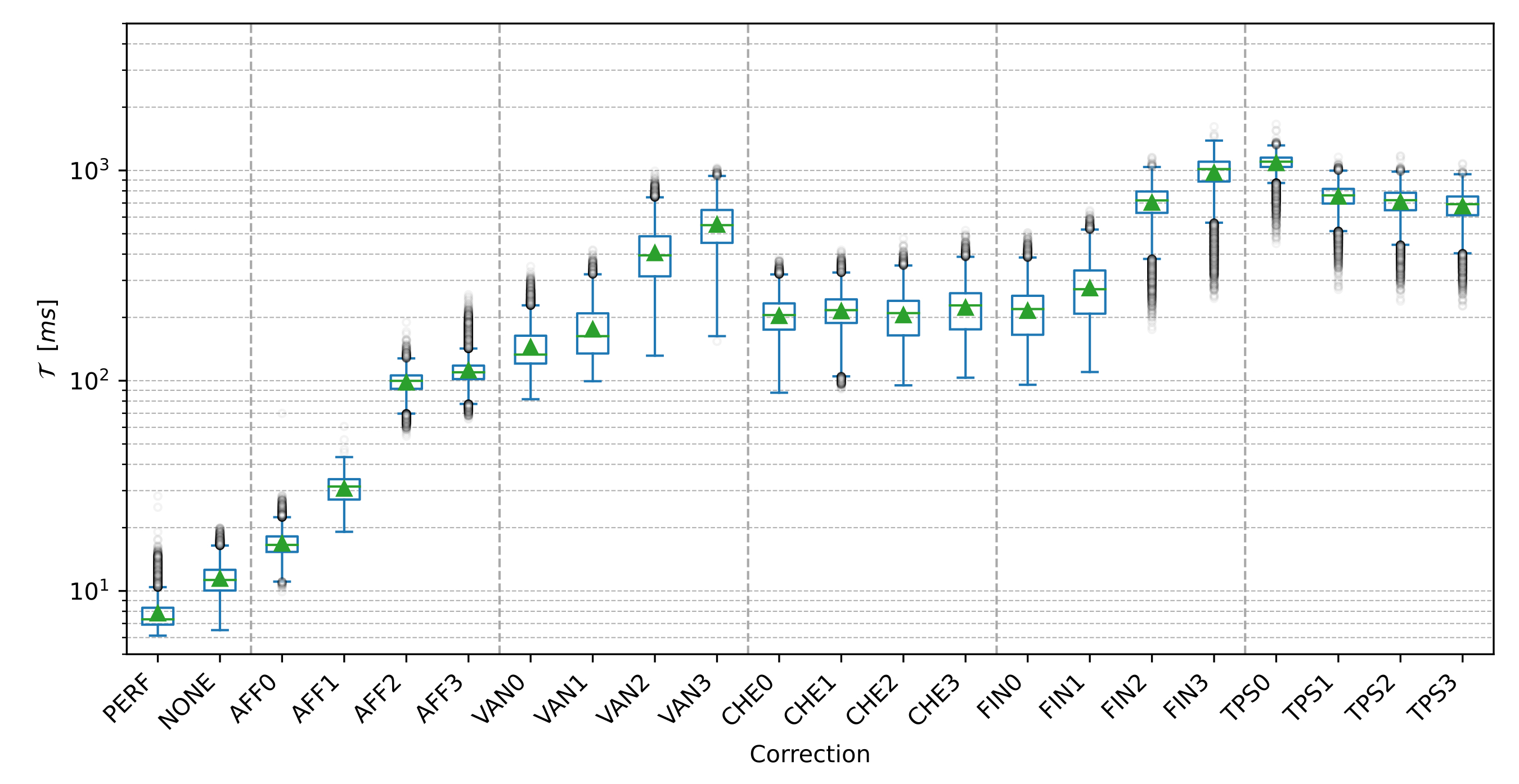

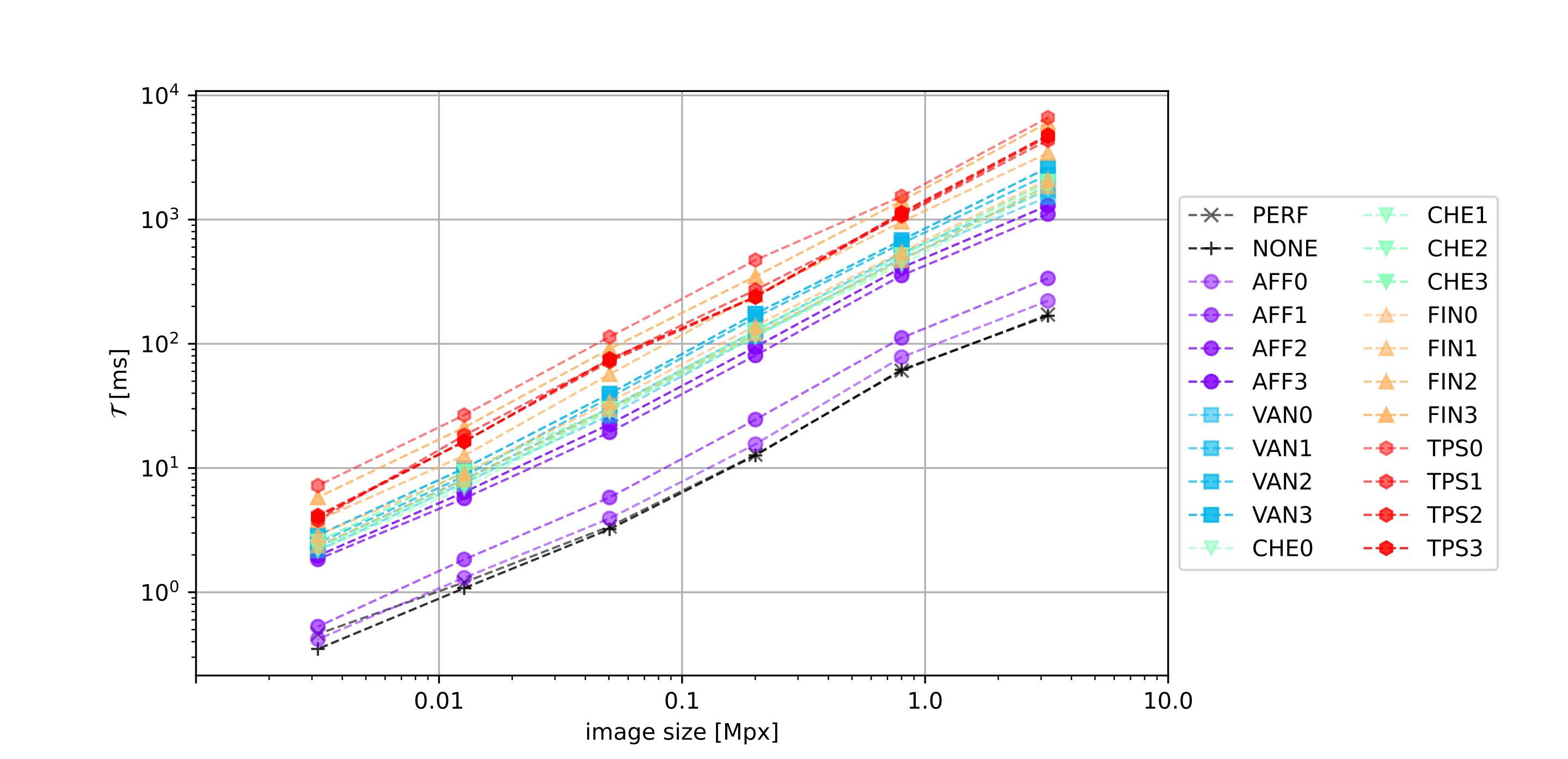

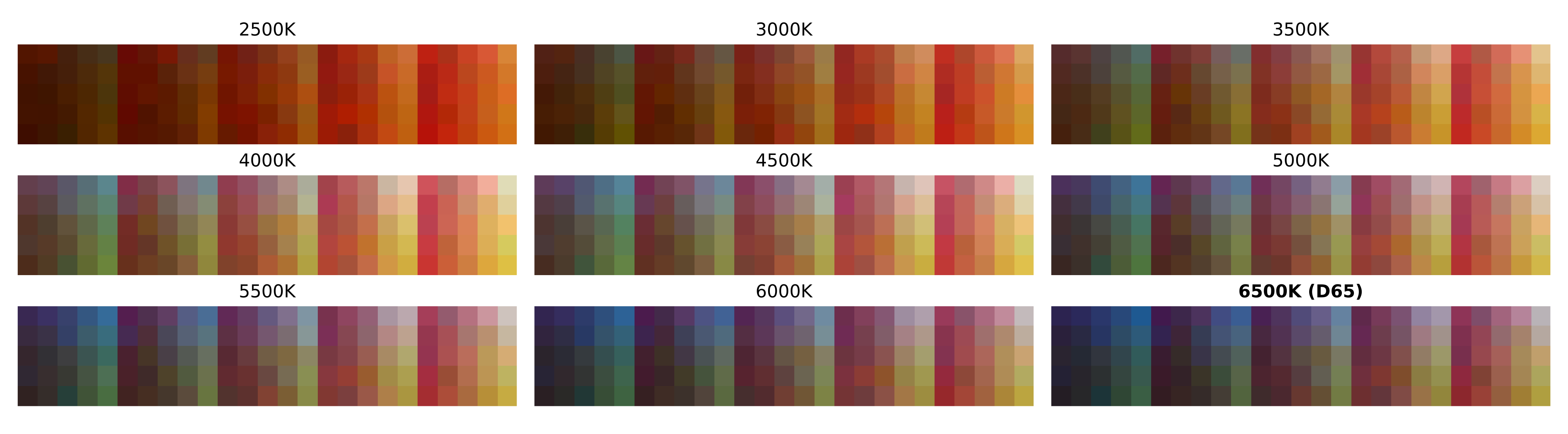

Third, in [ch:6] we sought for a unified framework of color correction based on affine [14], polynomial [27], [28], root-polynomial [28] and thin-plate splines [15] color corrections. Within that framework, we presented our new proposal for an improved TPS3D method to achieve image consistency.

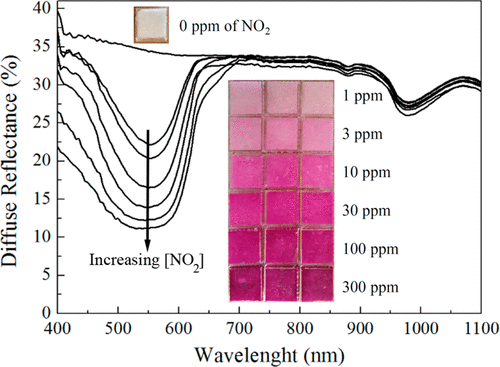

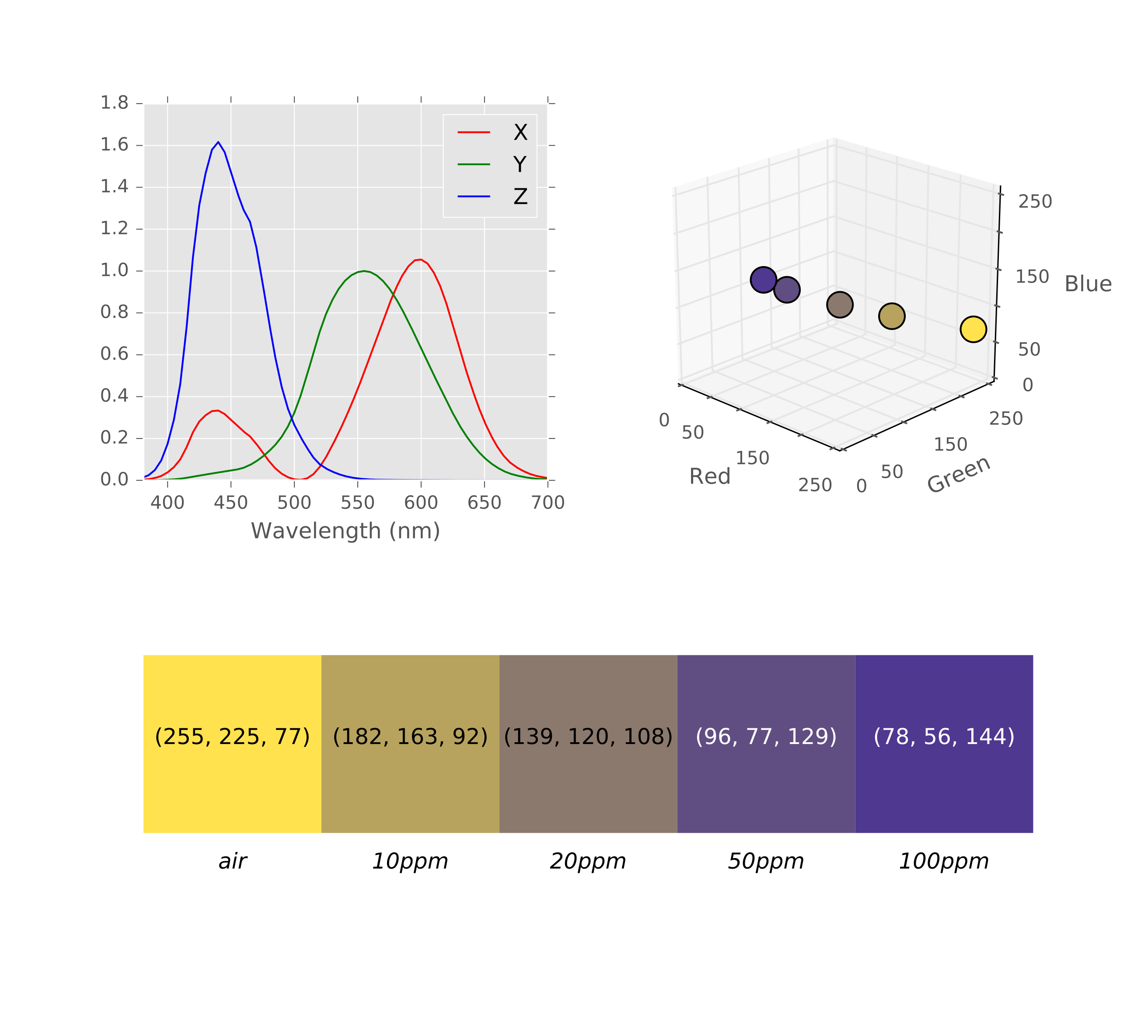

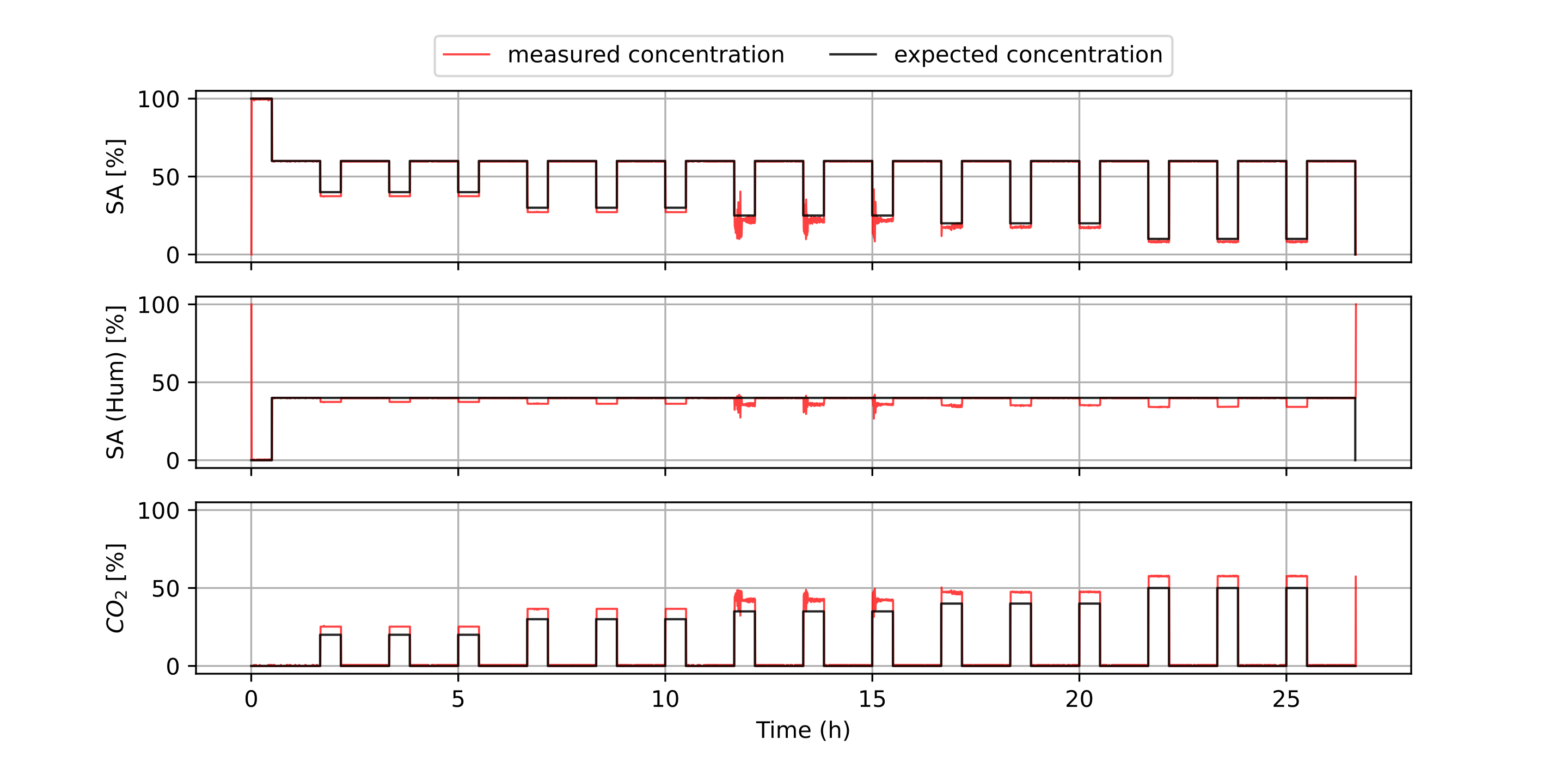

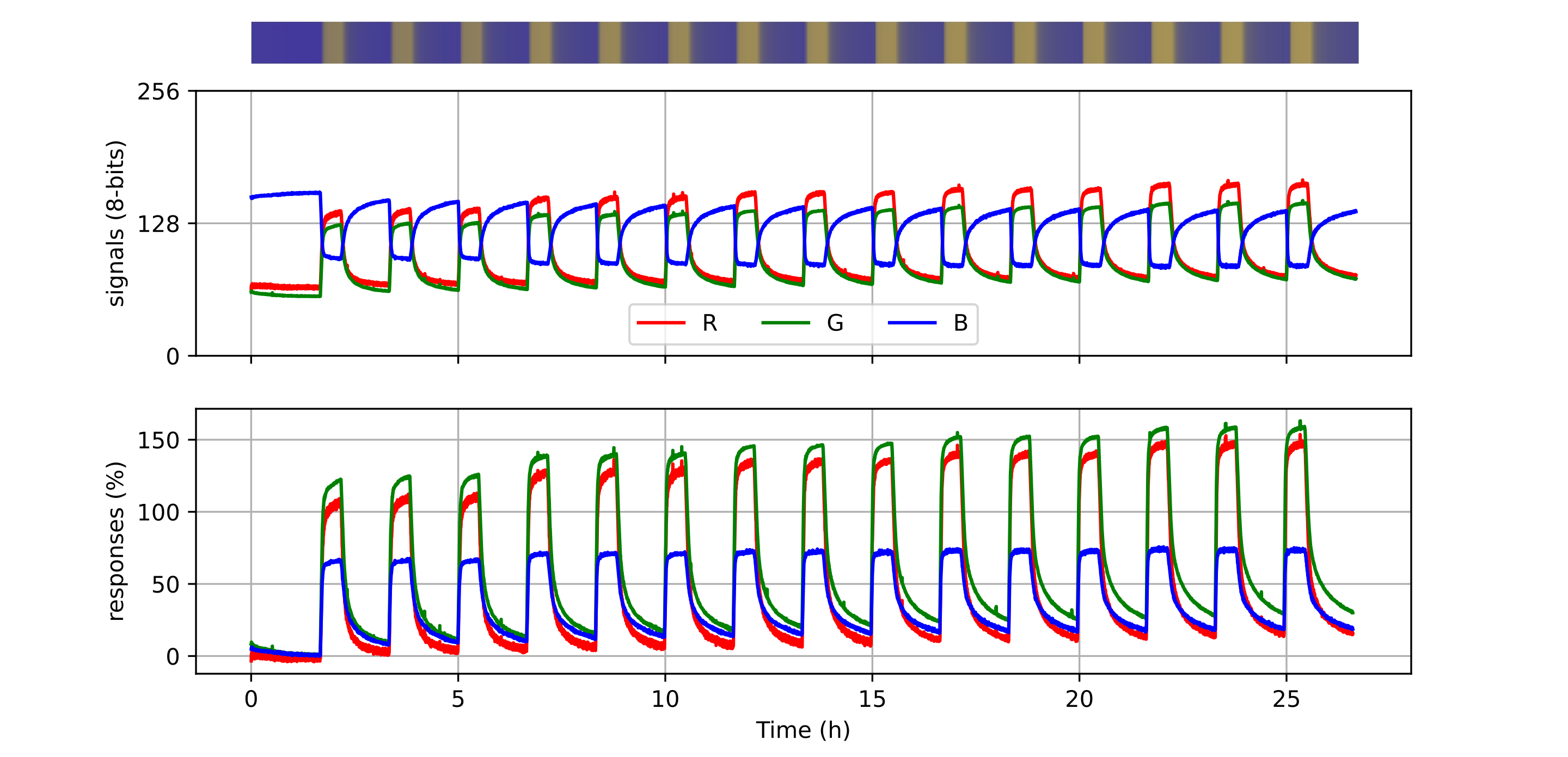

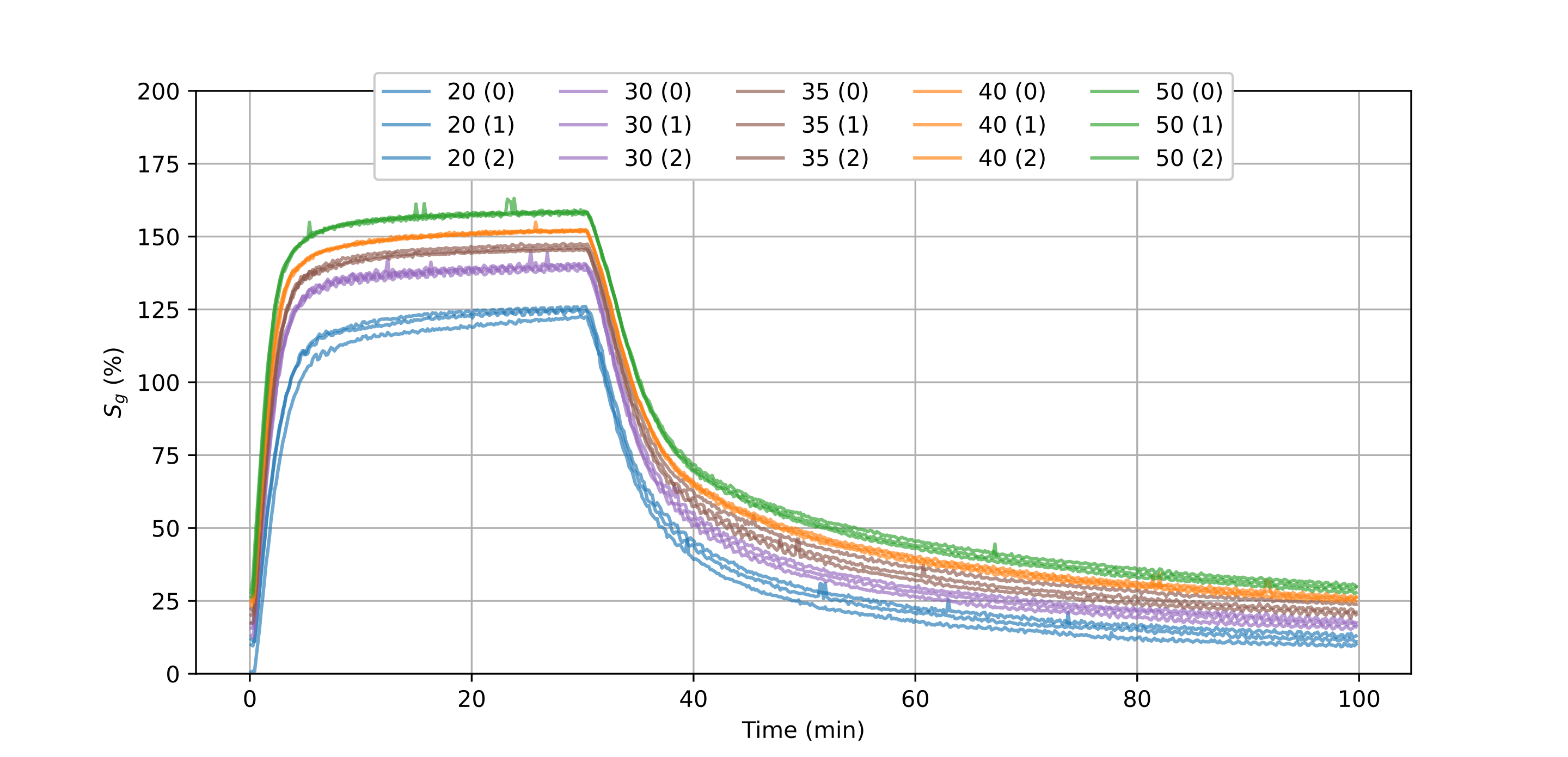

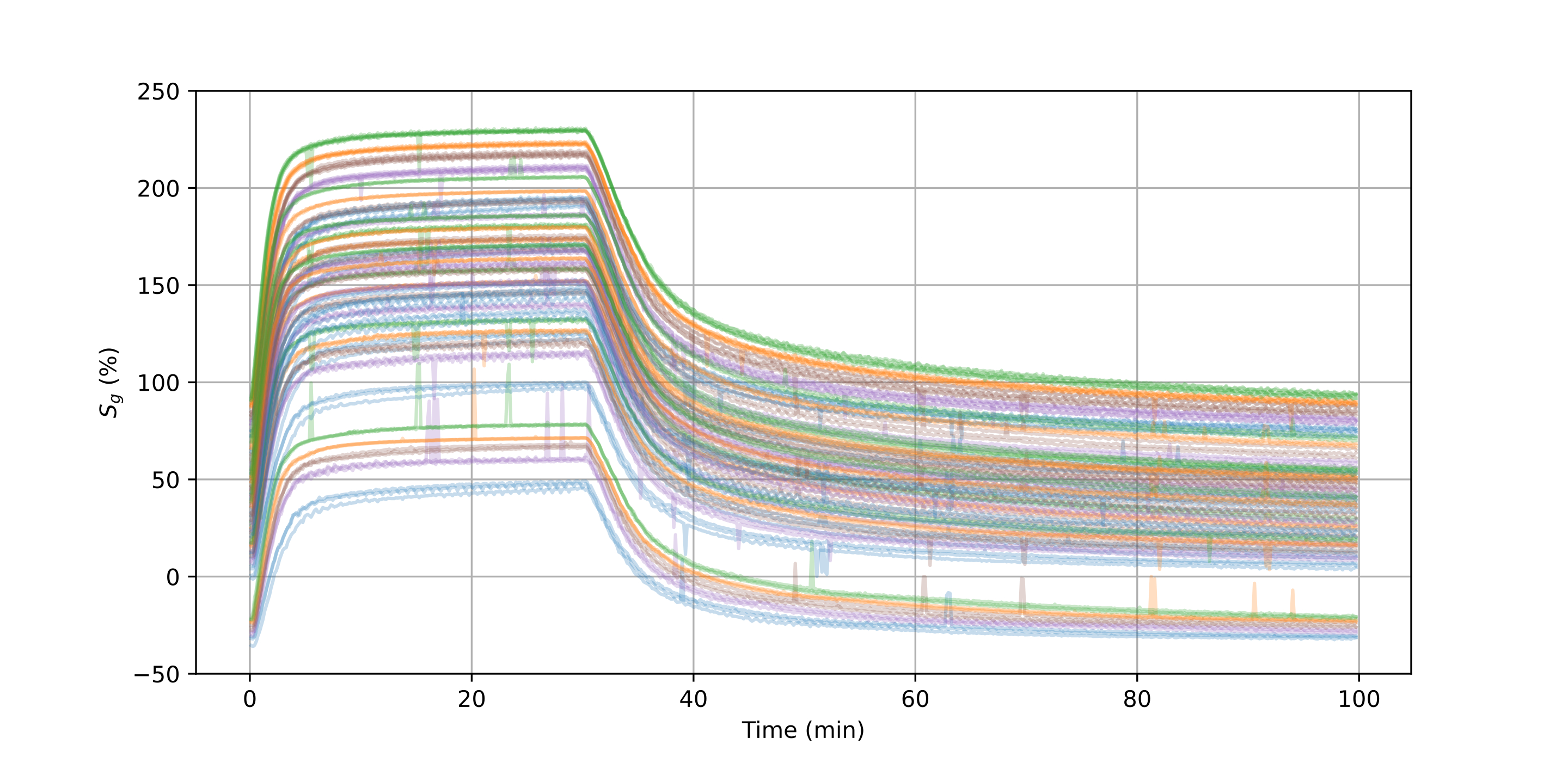

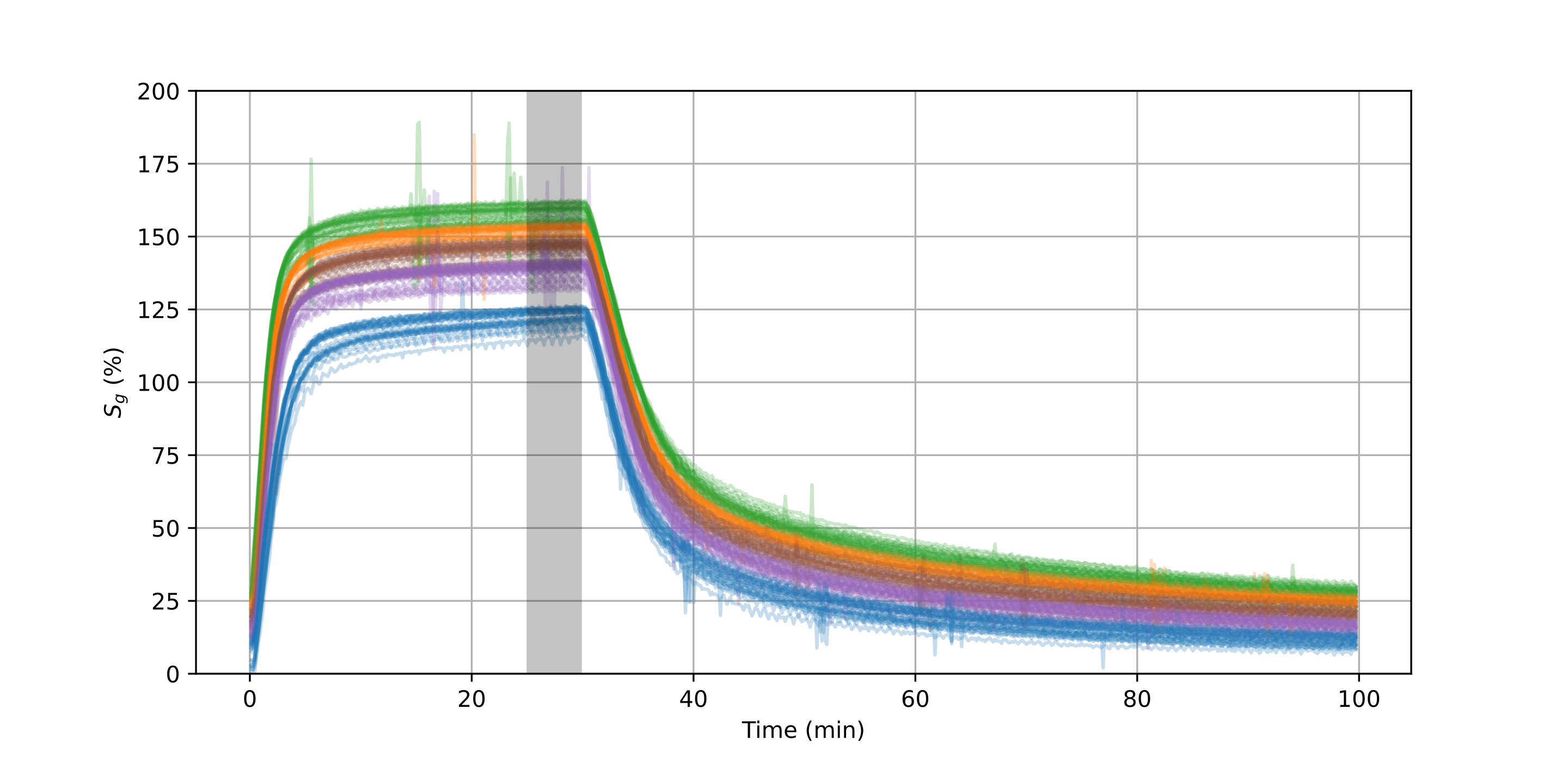

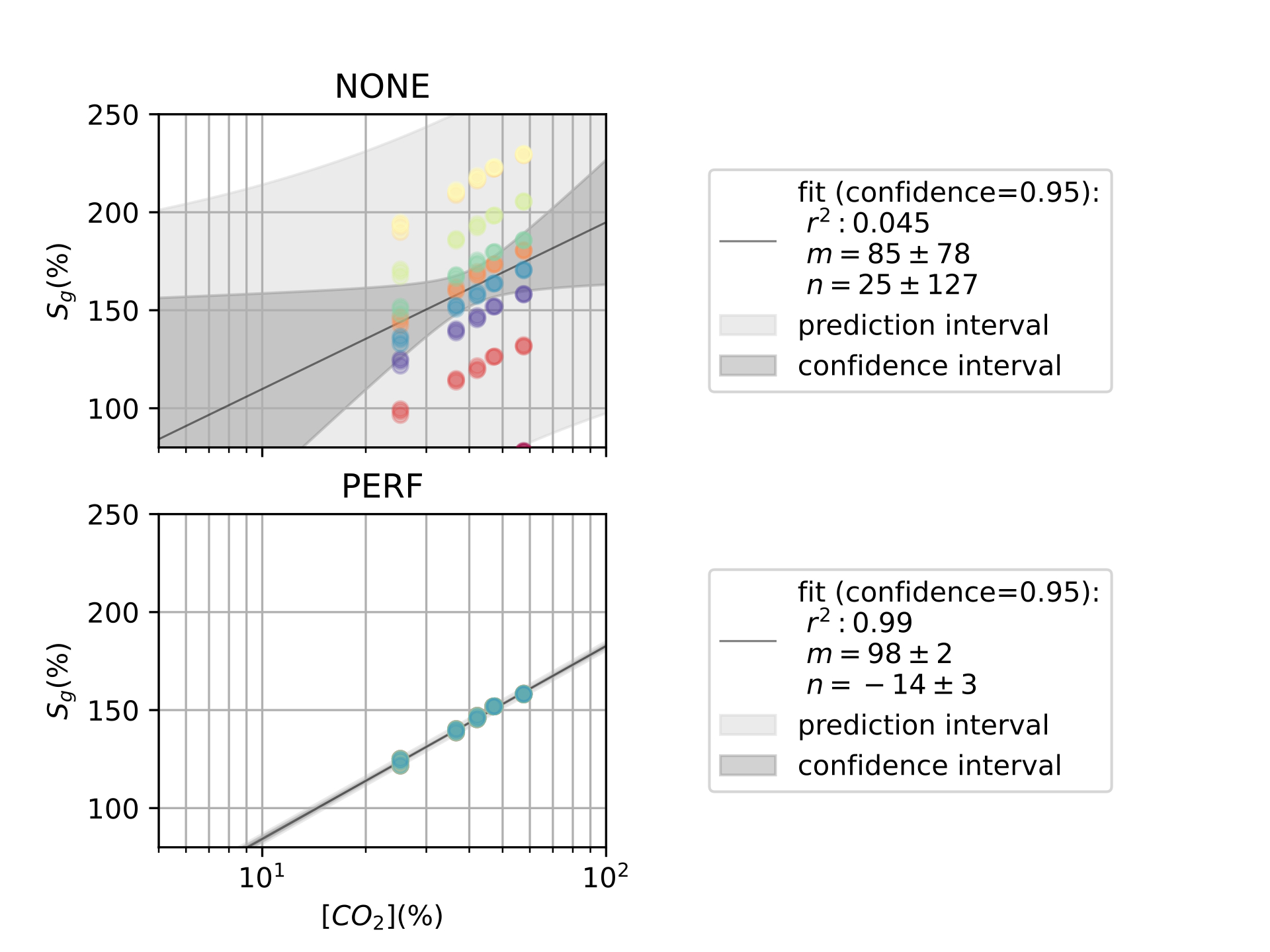

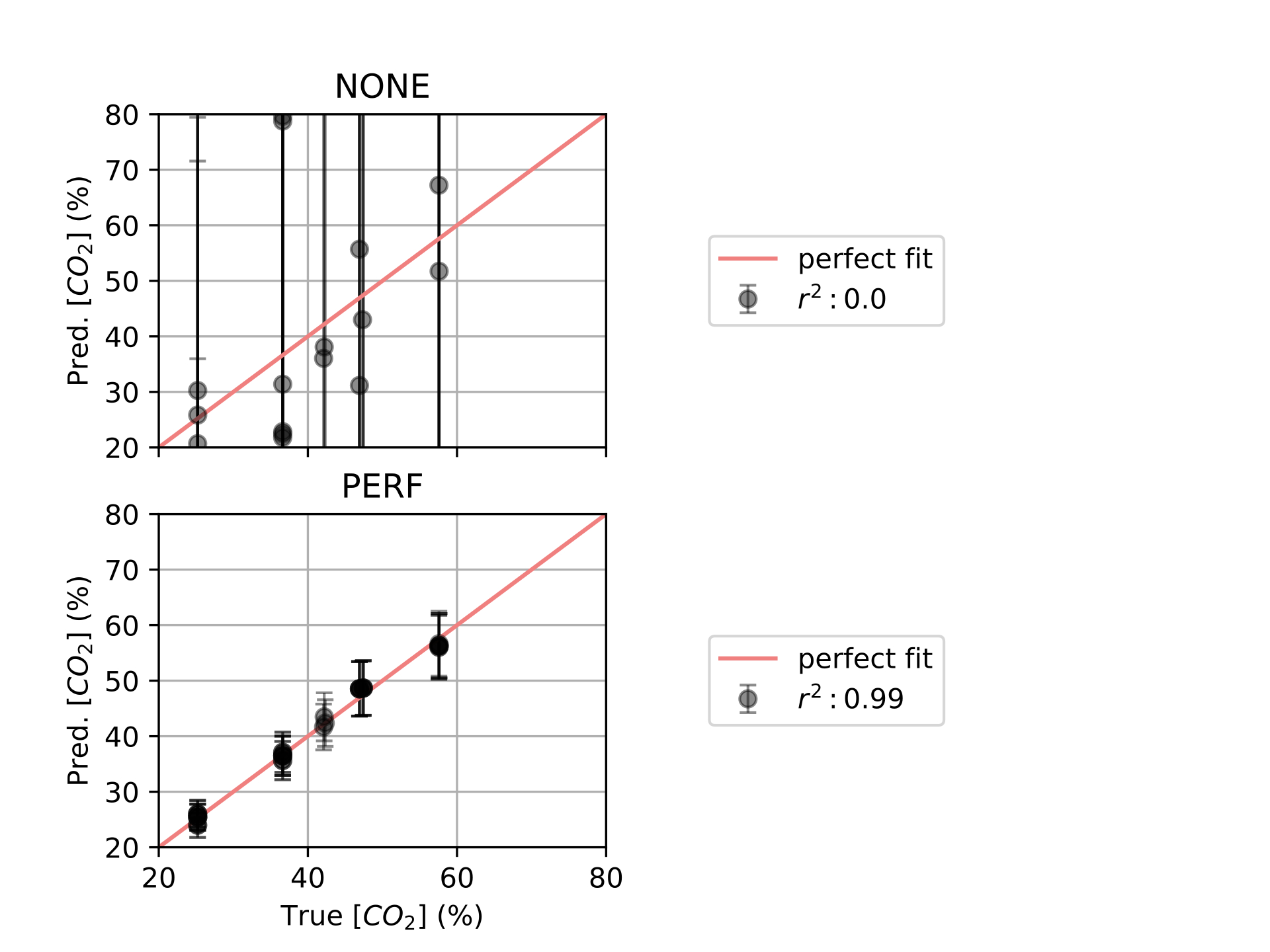

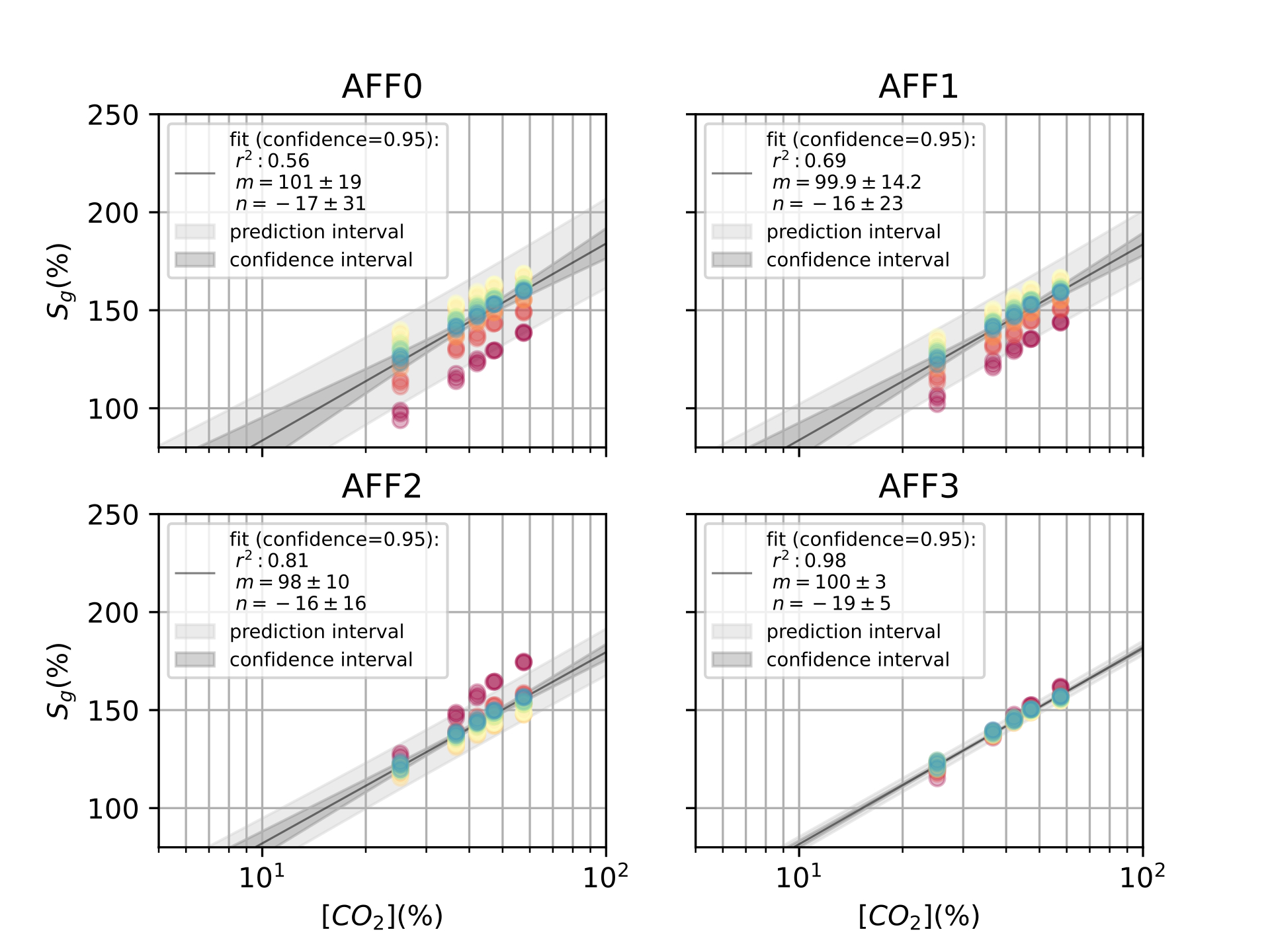

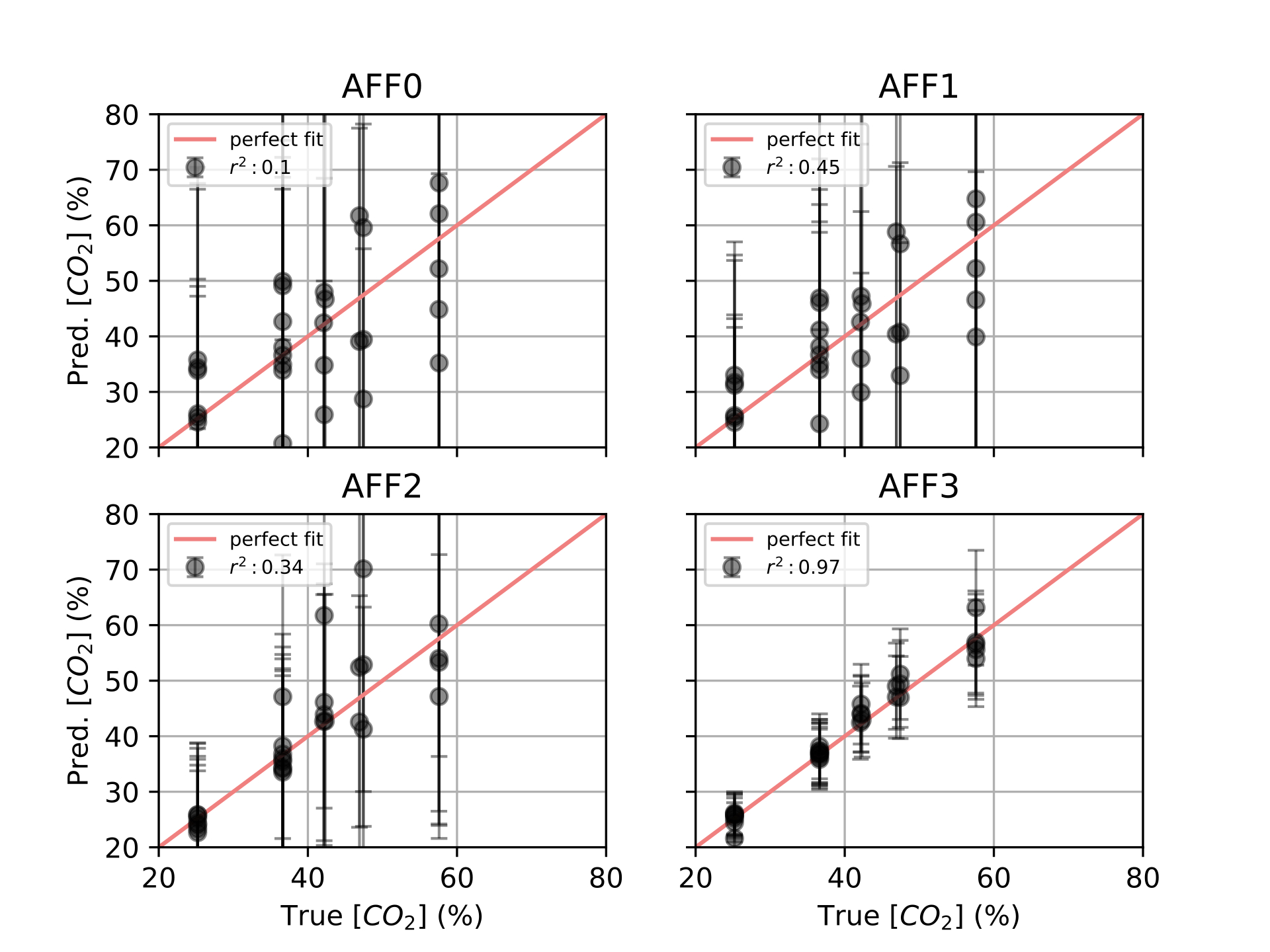

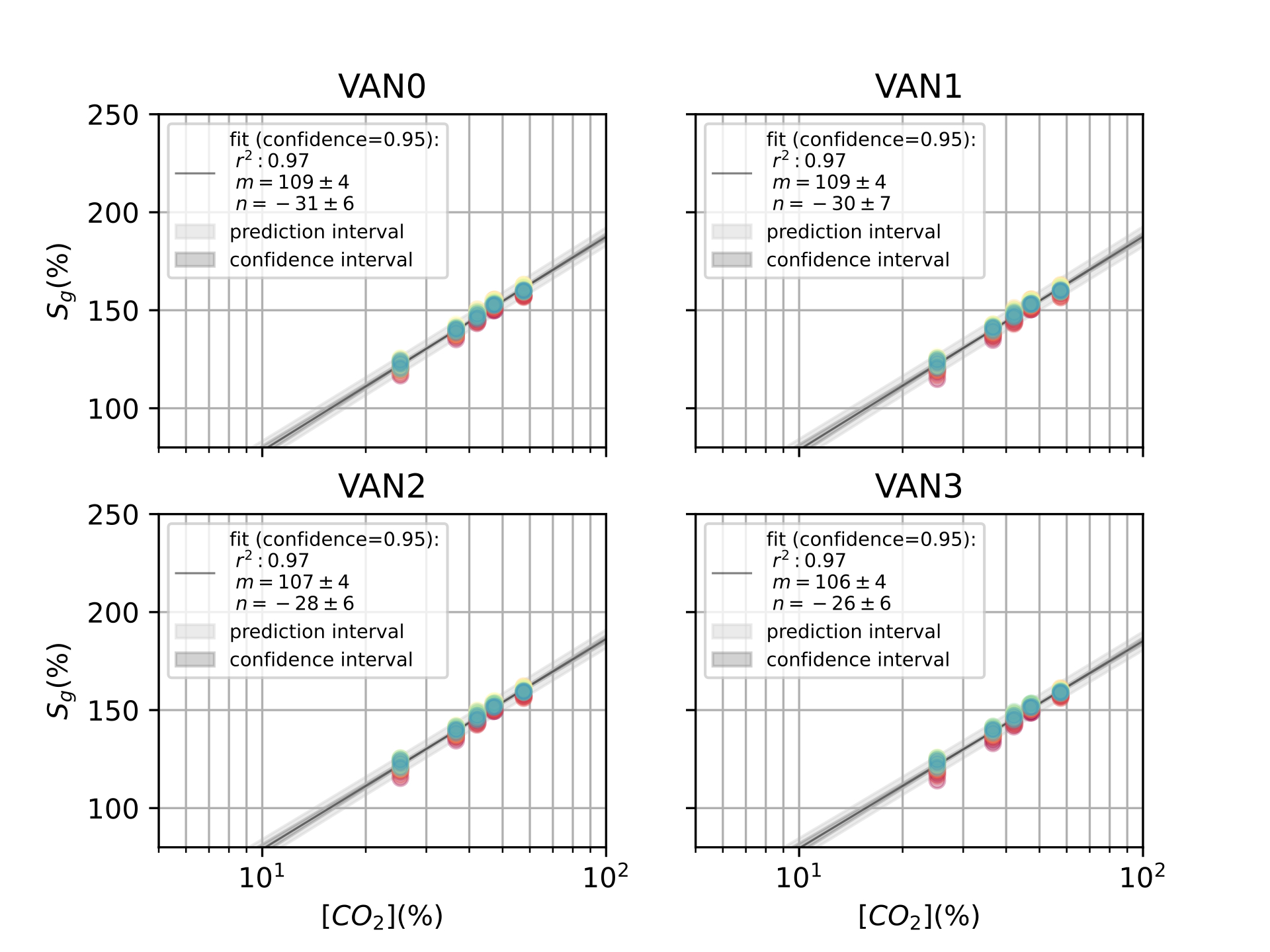

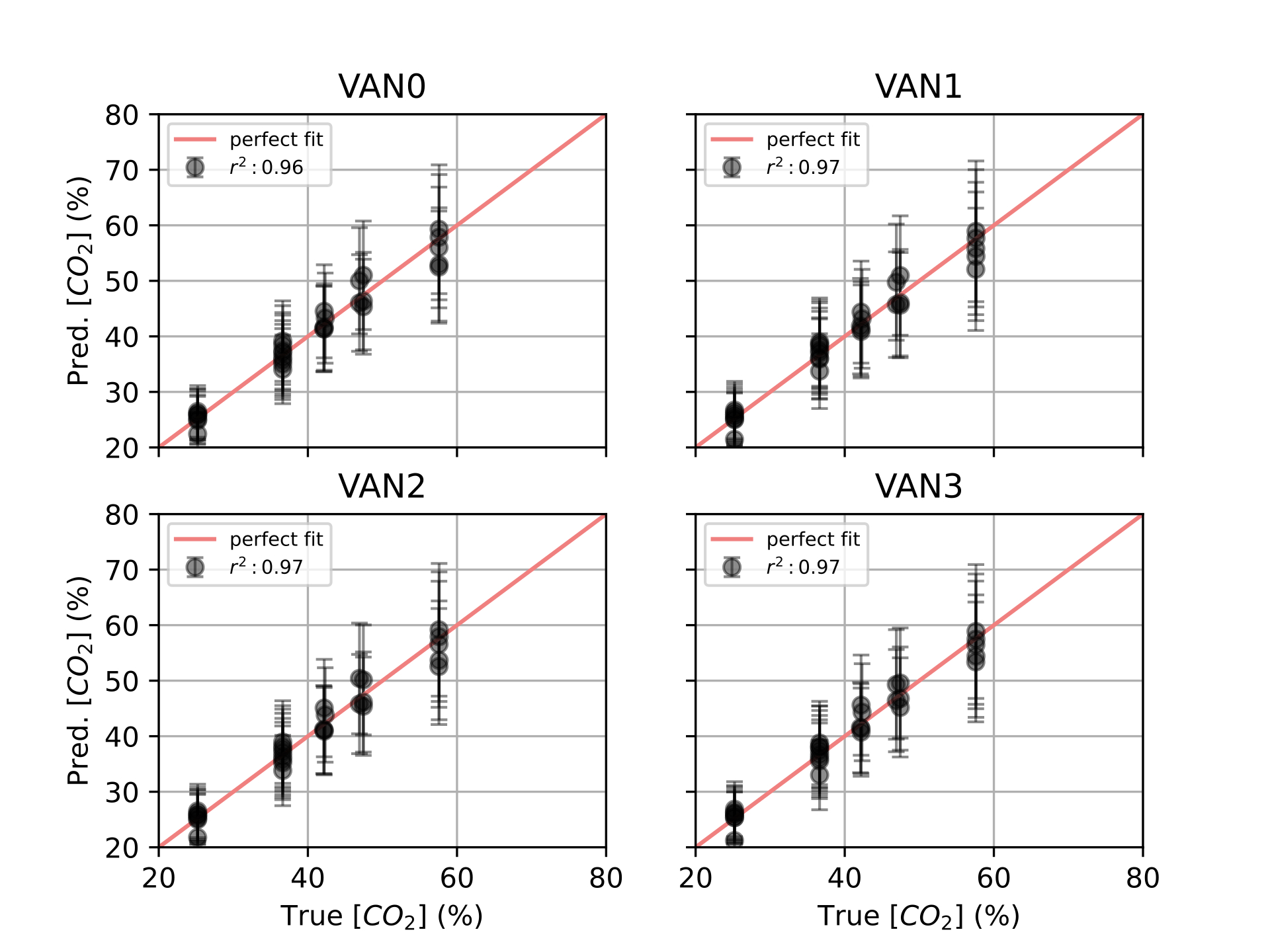

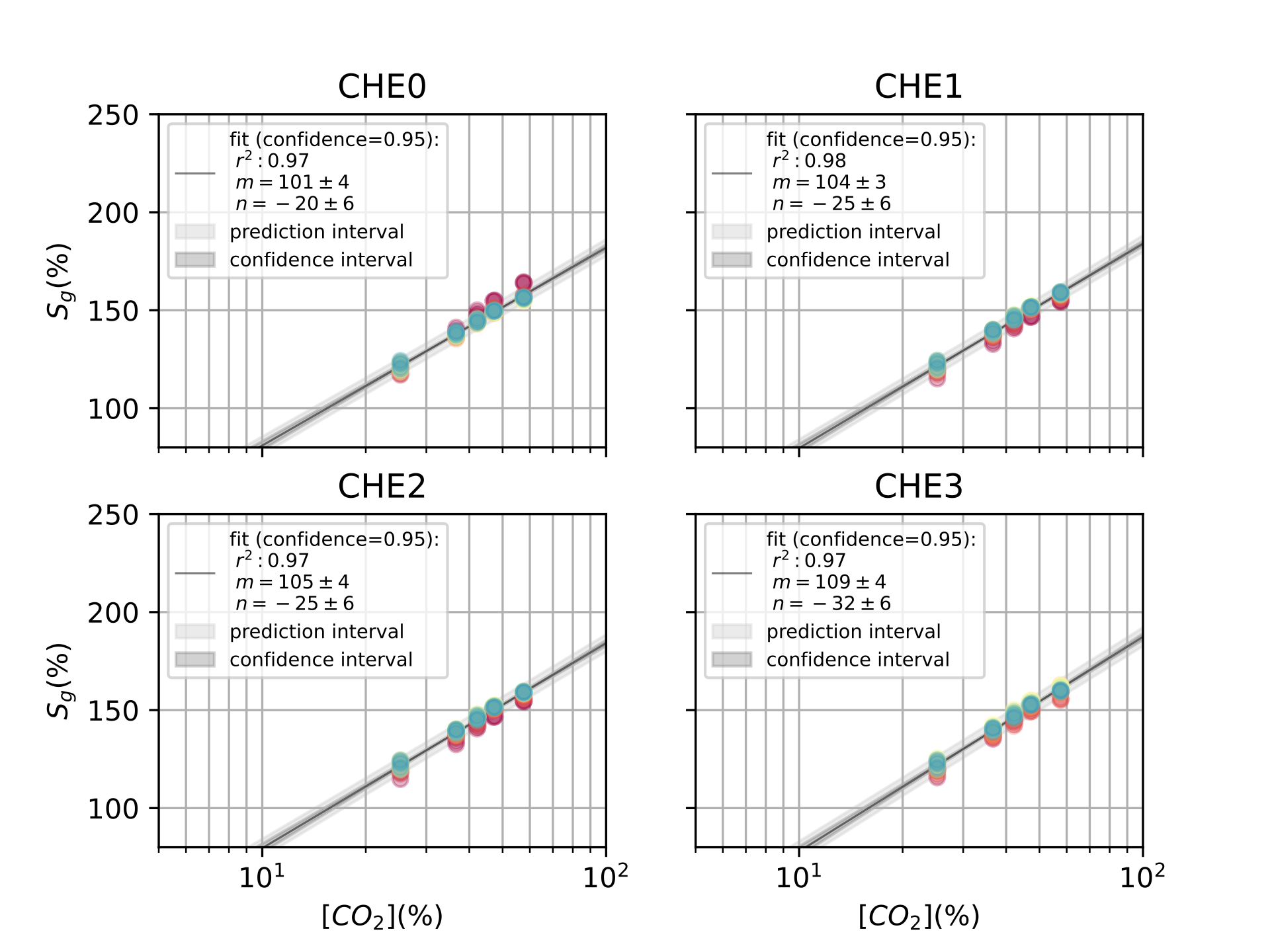

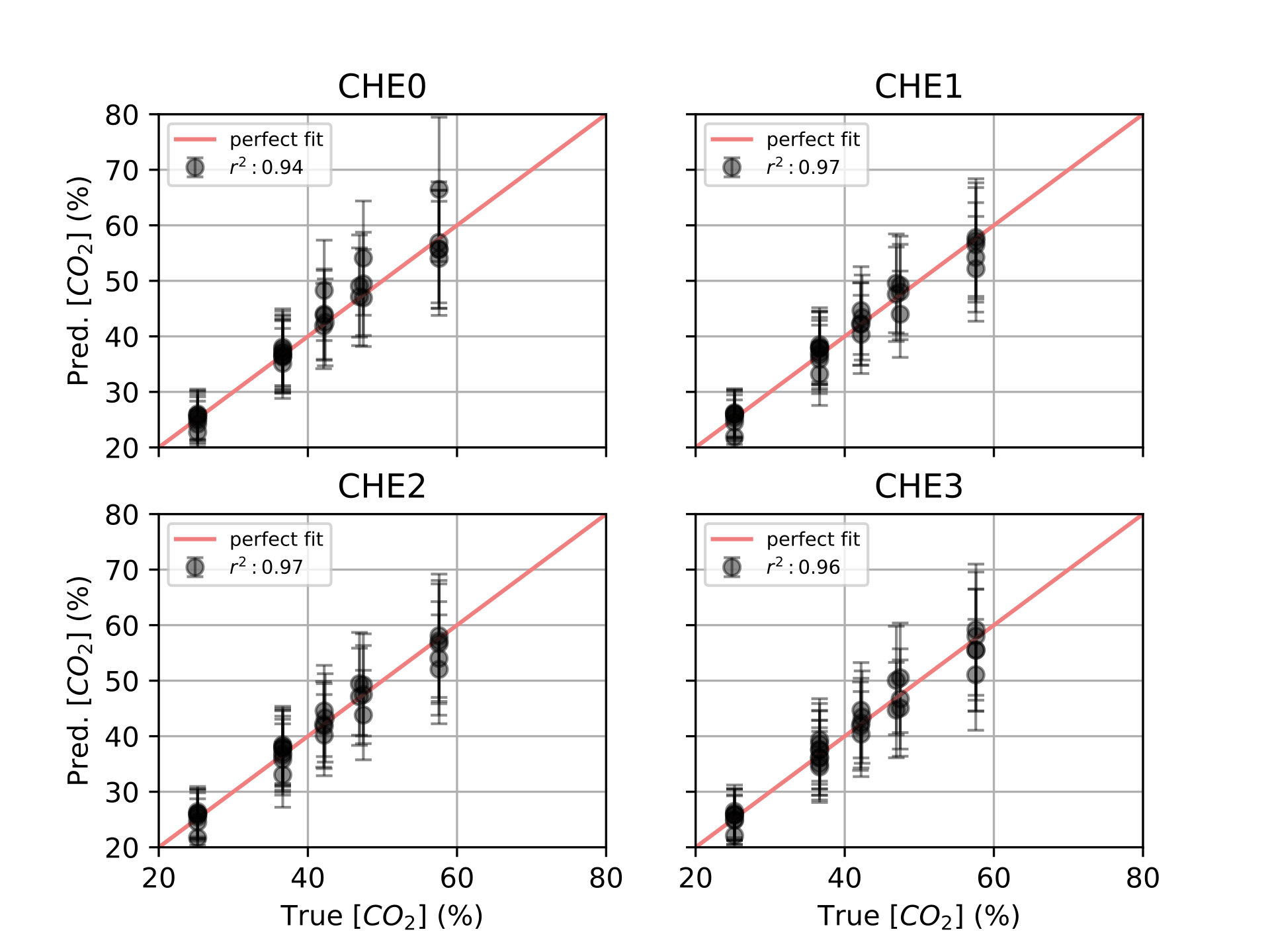

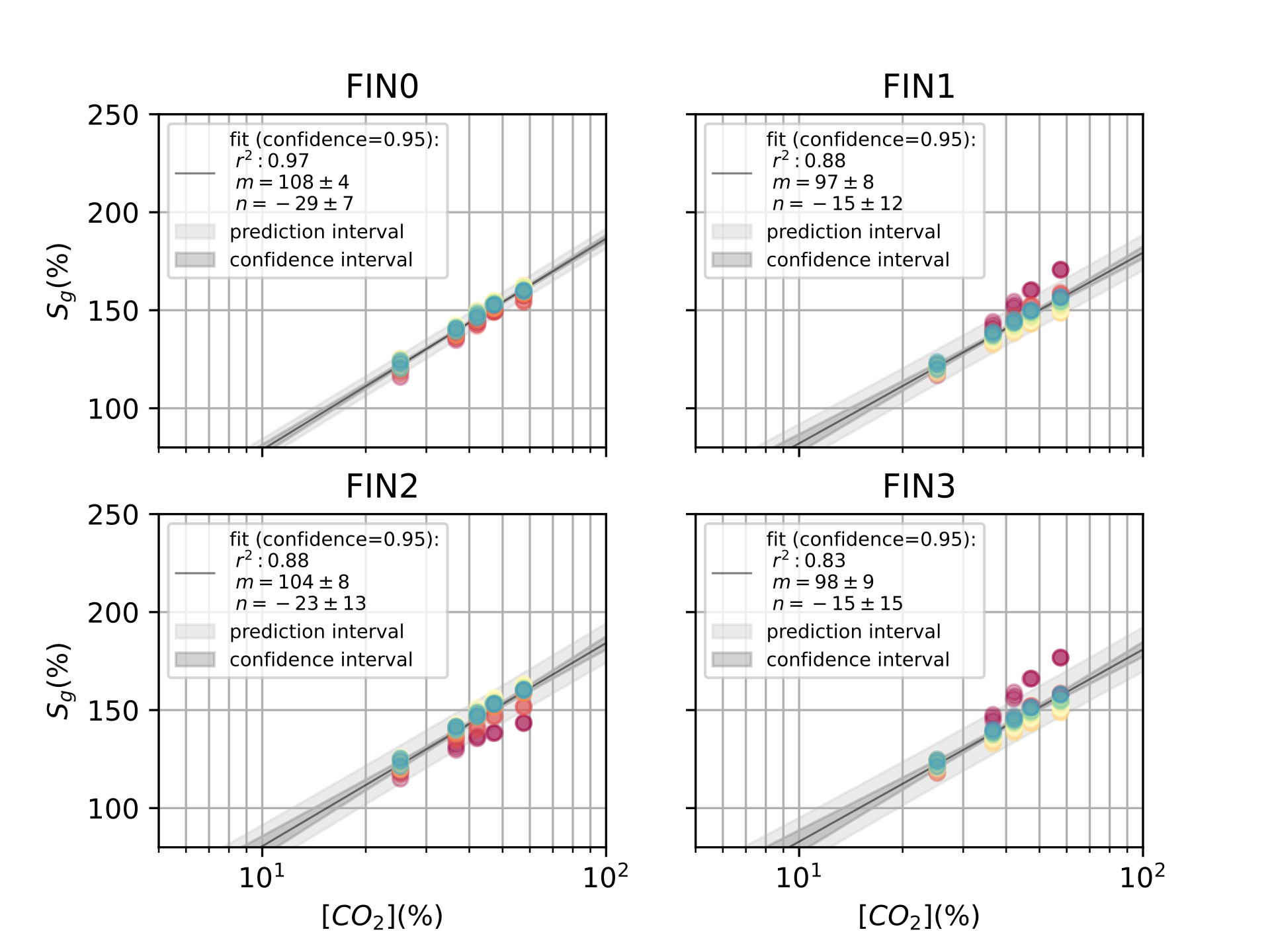

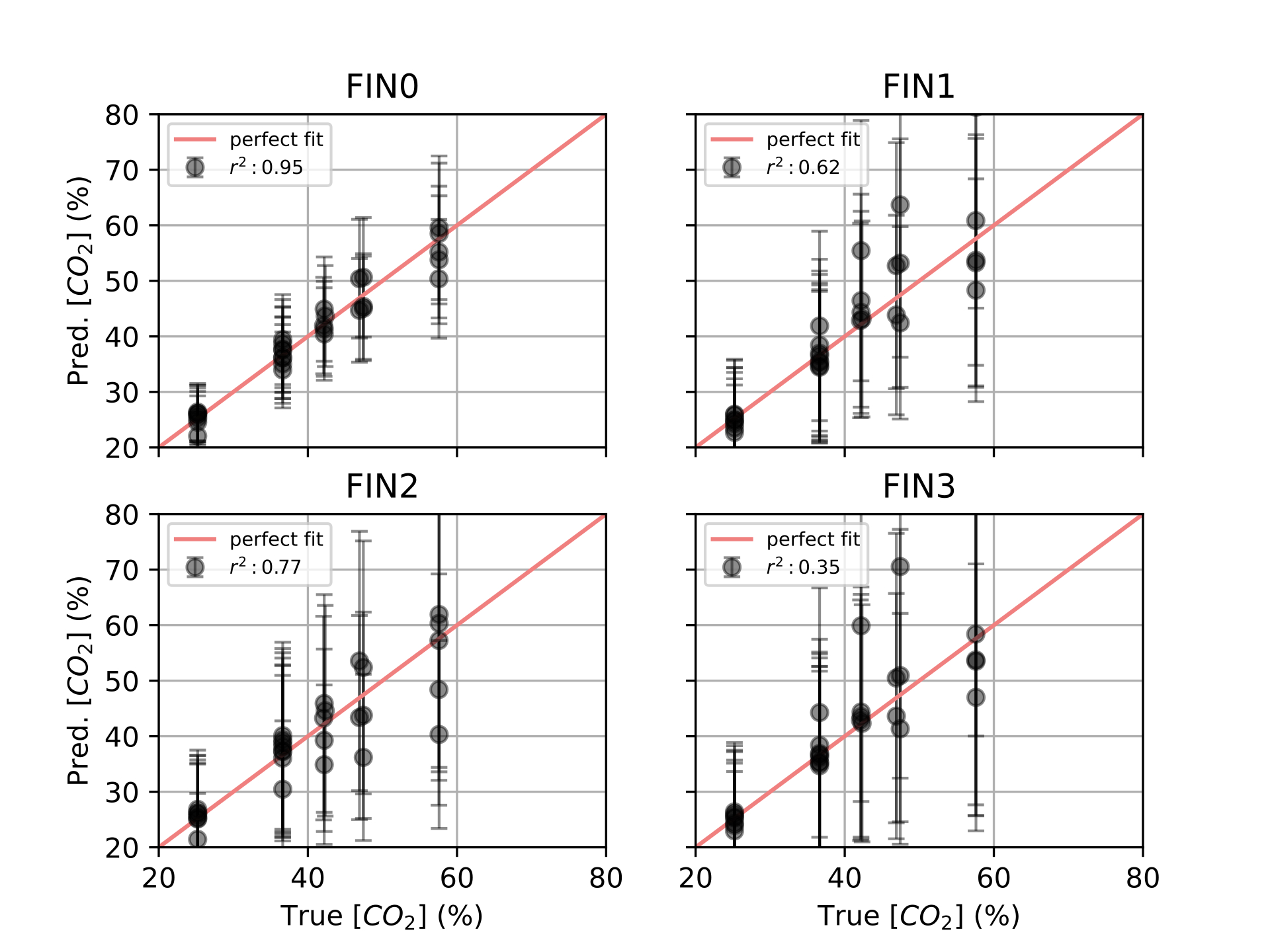

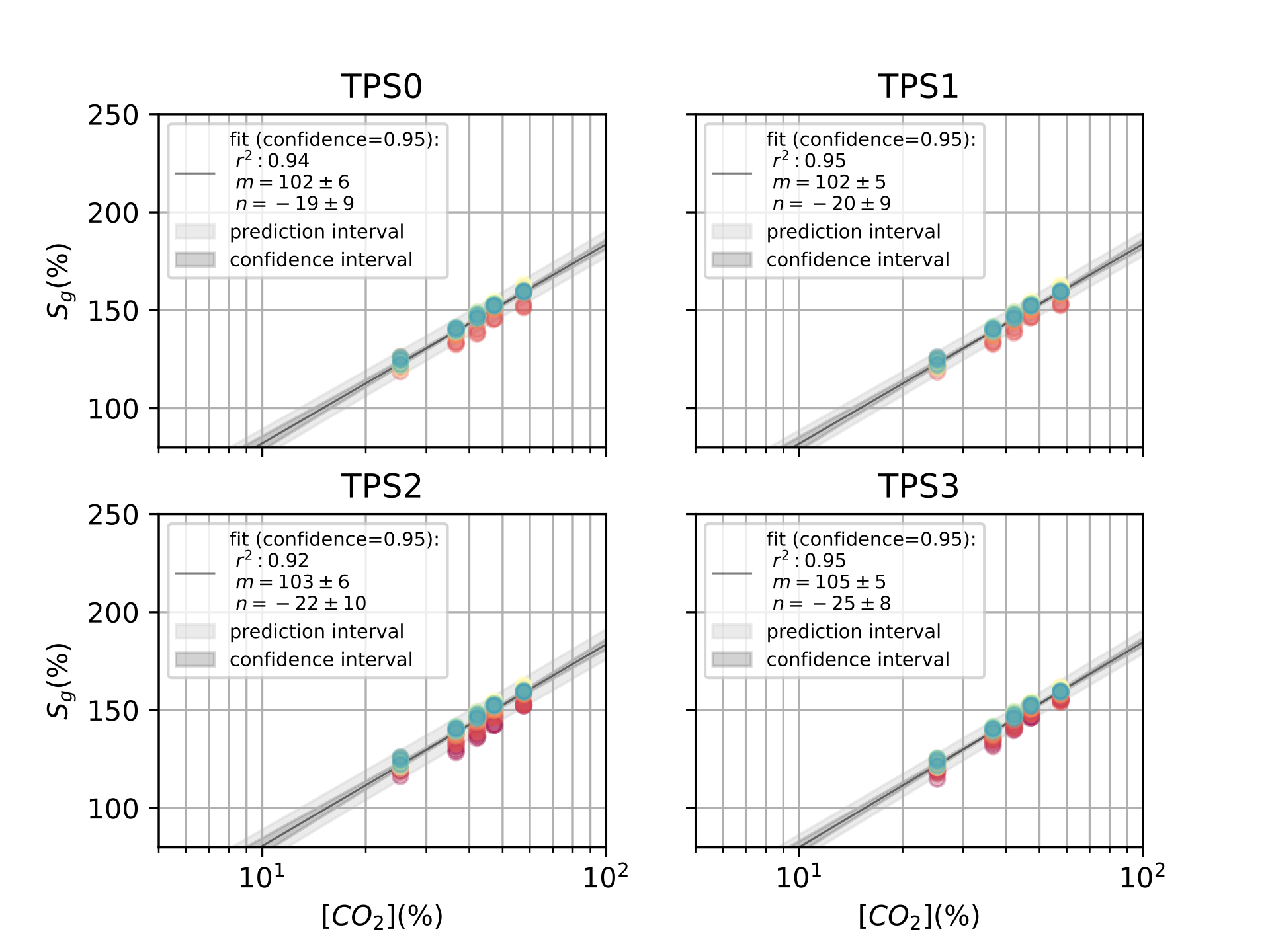

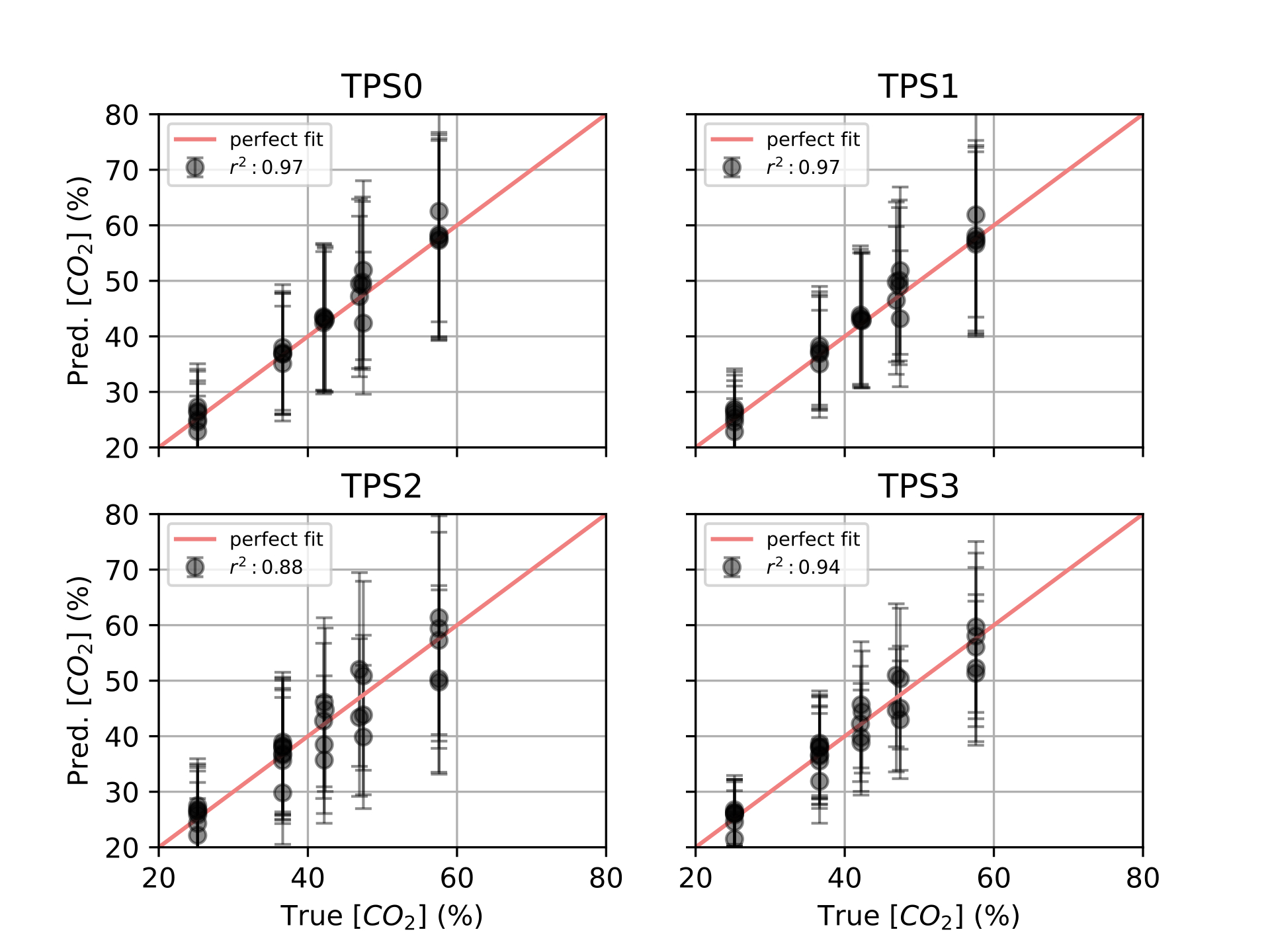

Finally, in [ch:7] we surveyed the different color sensors where we already used partial approaches to our solution [29], [30]. Then, we studied how tho apply our proposal to an actual application of a colorimetric indicator that sensed \(CO_2\) concentrations [31] in modified atmosphere packaging (MAP) [32].

Color reproduction is one of the most studied problems in the audiovisual industry, that is present in our daily lives, long before today’s smartphones, when color was introduced to the cinema, color analog cameras and color home TVs [11]. In the past years, reproducing and measuring color has also become an important challenge for other industries such as health care, food manufacturing and environmental sensing. Regarding health care, dermatology is one of the main fields where color measurement is a strategic problem, from measuring skin-tones to avoid dataset bias [33] to medical image analysis to retrieve skin lesions [34], [35]. In food manufacturing, color is used as an indicator to solve quality control and freshness problems [36]–[38]. As for environmental sensing [4], colorimetric indicators are widely spread to act as humidity [39], temperature [40] and gas sensors [41], [42].

In this section, we focus on image consistency, a reduced problem from color reproduction. While color reproduction aims at matching the color of a given object when reproduced in another device as an image (e.g. a painting, a printed photo, a digital photo on a screen, etc.), image consistency is the problem of taking different images of the same object in different illumination conditions and with different capturing devices, to finally obtain the same apparent colors for that object. In this problem, the apparent colors of an object do not need to match its “real” spectral color, they rather have to be just similar in each instance captured in different scenarios. In other words, all instances should match the first capture, or the reference capture, and not the real-life color. Therefore, image consistency is the actual problem to solve in the before-mentioned applications, in which it is more important to compare acquired images between them, so that consistent conclusions can be drawn with all instances, than comparing them to an actual reflectance spectrum.

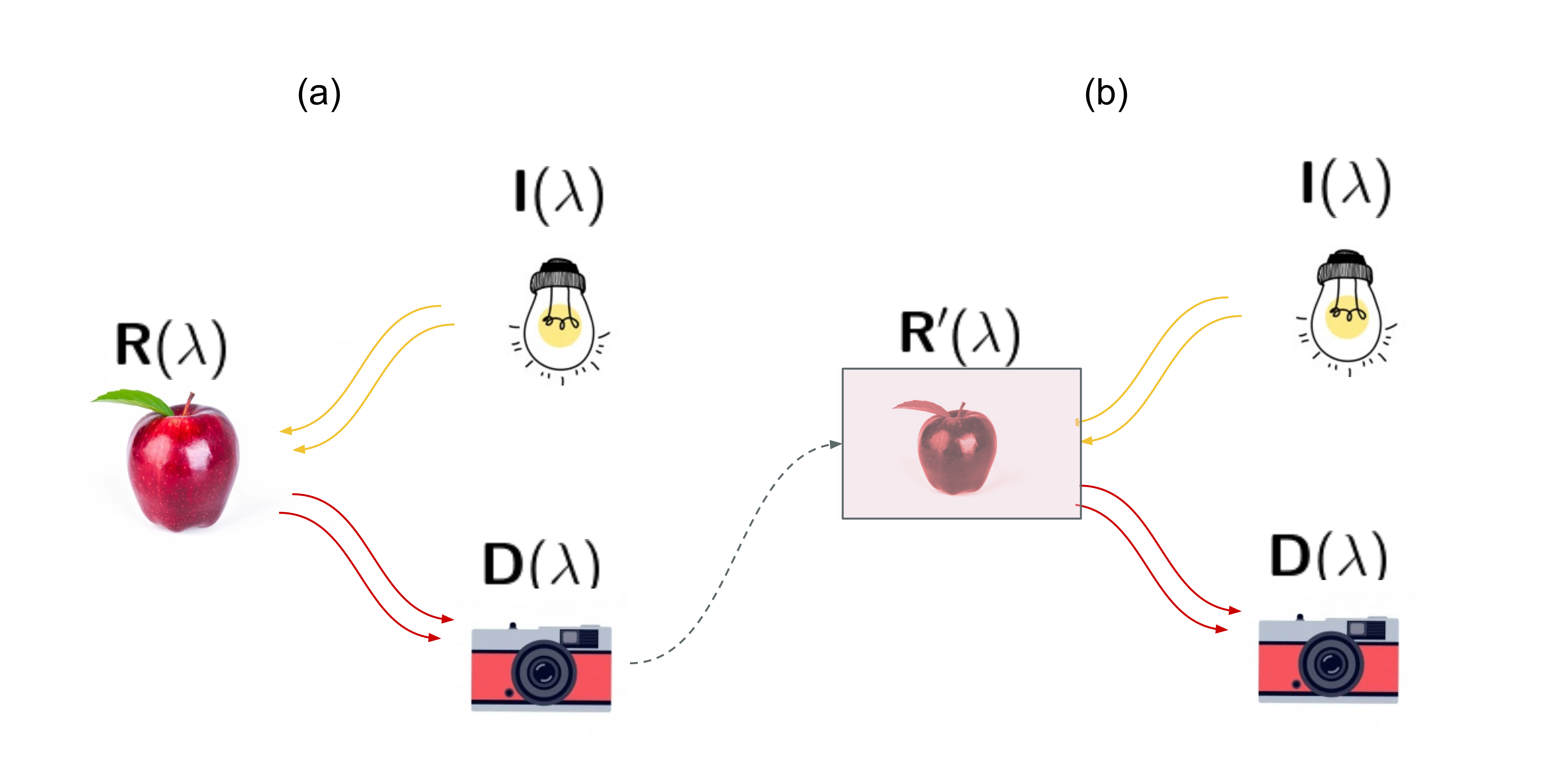

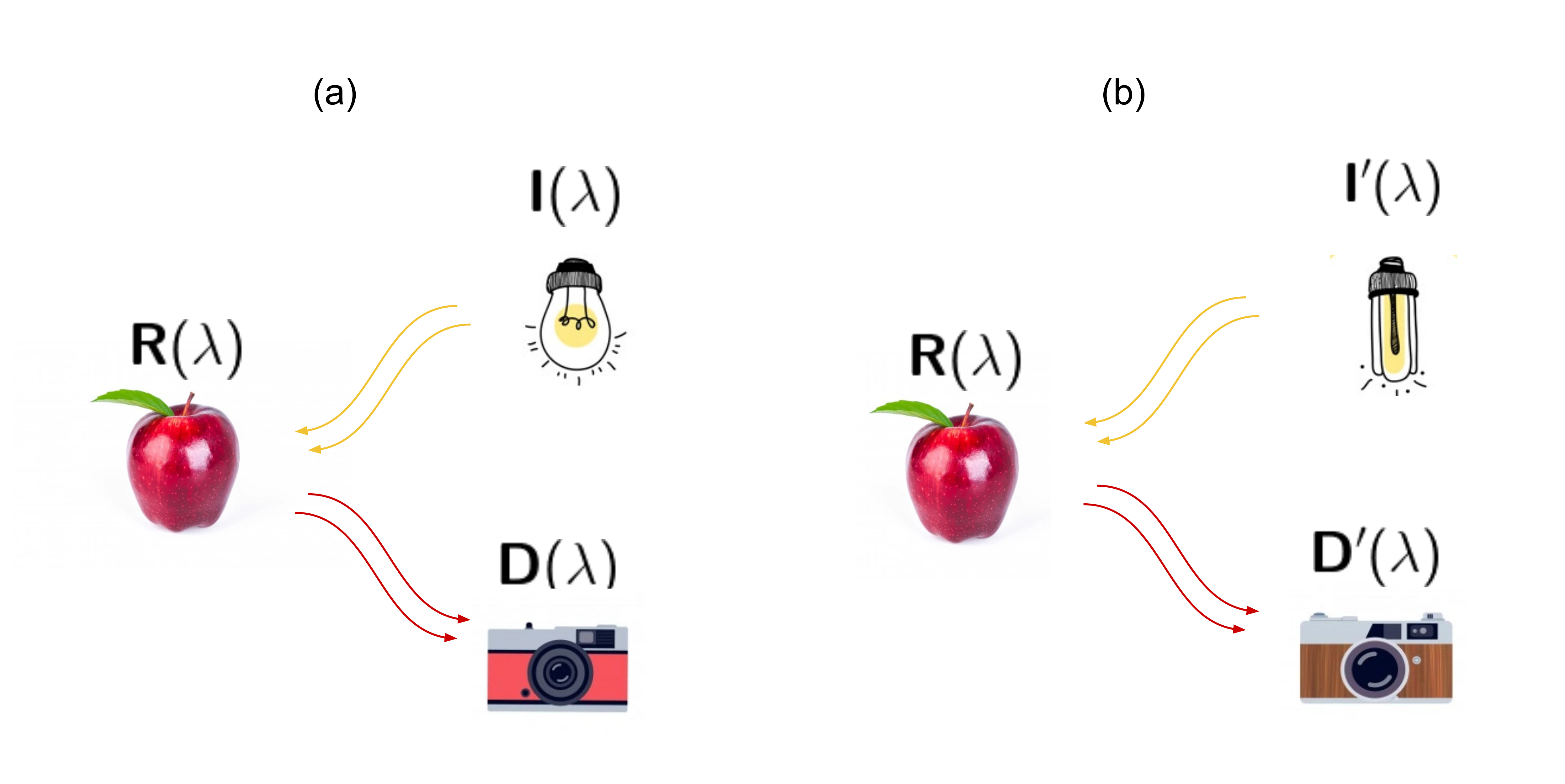

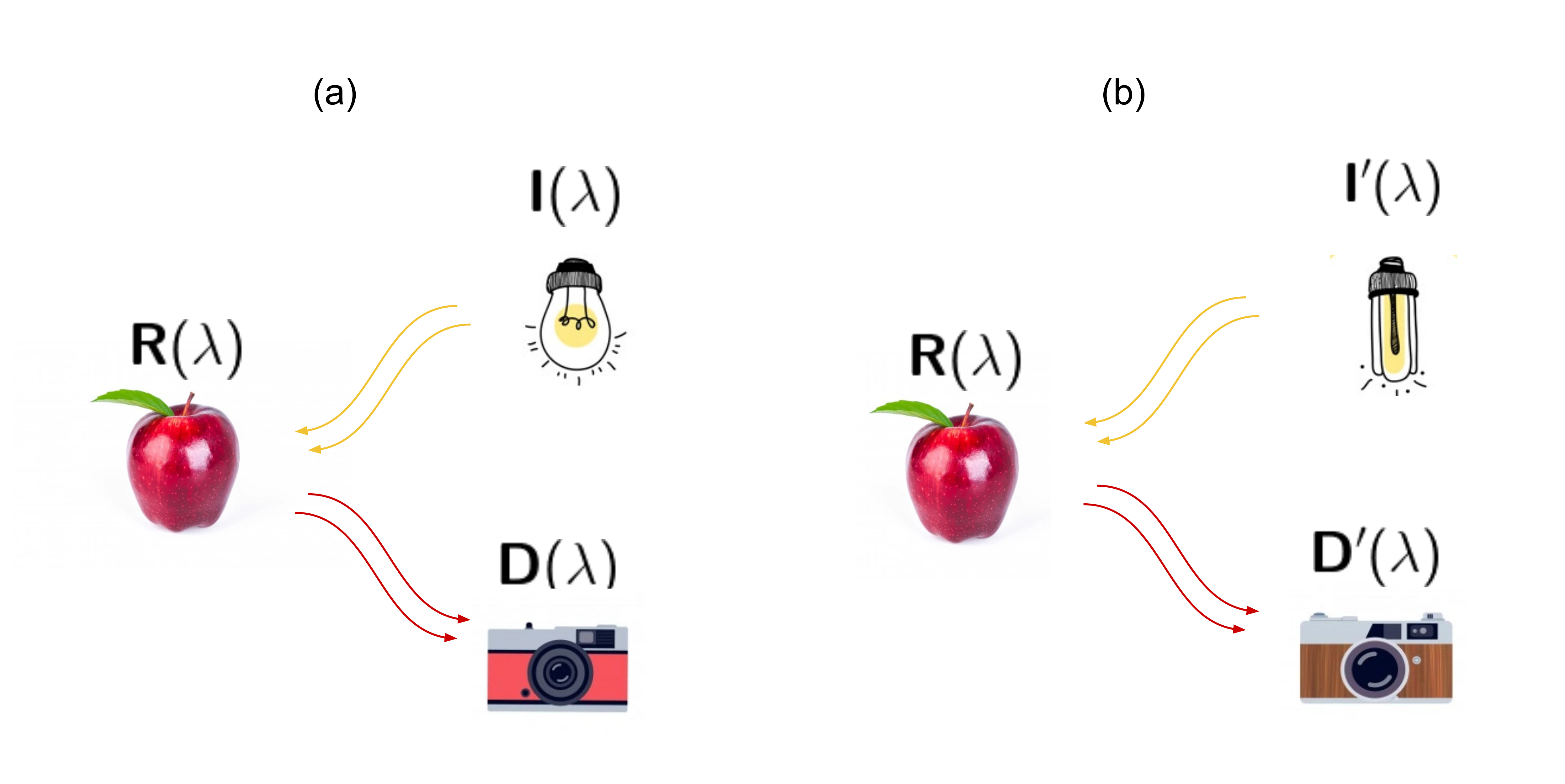

Color reproduction is the problem of matching the reflectance of an object with an image of this object [11]. This can be seen in [fig:colorreproduction].a, where an object (an apple), with a reflectance \(\mathrm{R (\uplambda)}\), is illuminated by a light source \(\mathrm{I (\uplambda)}\) and captured by a camera with a sensor response \(\mathrm{D (\uplambda)}\). In fact, digital cameras contain more than one sensor targeting different ranges of the visible spectrum: commonly, 3 types of sensors centered in red, green and blue colors [11].

In general, the signal acquired by one of the sensors inside the camera device can be modeled as [43]:

\[\mathrm {S_k \propto \int_{-\infty}^{\infty} I(\uplambda) \ R(\uplambda) \ D_k(\uplambda) \ d\uplambda} \label{eq:colorsingalintegral}\]

where \(k \in \{ 1, \dots , \ N \}\) are the channels of the camera, \(N\) is the total number of channels and \(\mathrm{\uplambda}\) are the visible spectra wavelengths. Then, [fig:colorreproduction].b portrays the color reproduction of the object, where now a new reflectance will be recreated and captured with the same conditions. Since our image is a printed image, the new reflectance will be:

\[\mathrm{R'(\uplambda)} = \sum_{i=0}^{M} f_i (S_1, \dots, S_N) \cdot \mathrm{R_i(\uplambda)} \label{eq:reproductionsum}\]

where \(\mathrm{R_i (\uplambda)}\) are the reflectance spectra of the \(M\) reproduction inks, which will be printed as a function of the acquired \(\mathrm{(S_1, \dots, S_N)}\) channel contributions. The color reproduction problem now can be written as the minimization problem to the distance of both reflectances:

\[\mathrm{\left \| R'(\uplambda) -R(\uplambda) \right \| \rightarrow 0} \label{eq:reproductionmin}\]

for each wavelength, for each illumination and for each sensor. The same formulation could be written when displaying images on a screen by changing \(\mathrm{R (\uplambda)}\) for \(\mathrm{I (\uplambda)}\) of the light emitting screen.

Color reproduction is a wide open problem, and with each step towards its general solution, the goal of achieving image consistency when acquiring image datasets is nearer. Since color reproduction solutions aim at attaining better acquisition devices and better reproduction systems, the need for solving the image consistency problem will eventually disappear. But this is not yet the case.

However, the image consistency problem is far simpler than the color reproduction problem. The image consistency problem can be seen as the problem to match the acquired signal of any camera, under any illumination for a certain object. This can be seen in [fig:imageconsistency].a: an object (an apple), which has a reflectance \(\mathrm{R (\uplambda)}\), is illuminated by a light source \(\mathrm{I (\uplambda)}\) and it is captured by a camera with a sensor response \(\mathrm{D (\uplambda)}\). Now, in [fig:imageconsistency].b, the object is not reproduced but exposed again to different illumination conditions \(\mathrm{I' (\uplambda)}\) and captured by a different camera \(\mathrm{D' (\uplambda)}\).

Under their respective illumination, each camera will follow [eq:colorsingalintegral], providing three different \(\mathrm{S_k}\) channels. Considering we can write a vector signal from the camera as:

\[\mathbf{s} = \mathrm{(S_1, \dots, S_N)} \ \mathrm{,} \label{eq:colorvector}\]

the image consistency problem can be written as the minimization problem to the distance between acquired signals:

\[\left \| \mathbf{s}' - \mathbf{s} \right \| \rightarrow 0 \label{eq:consistencymin}\]

for each camera, for each illumination for a given object.

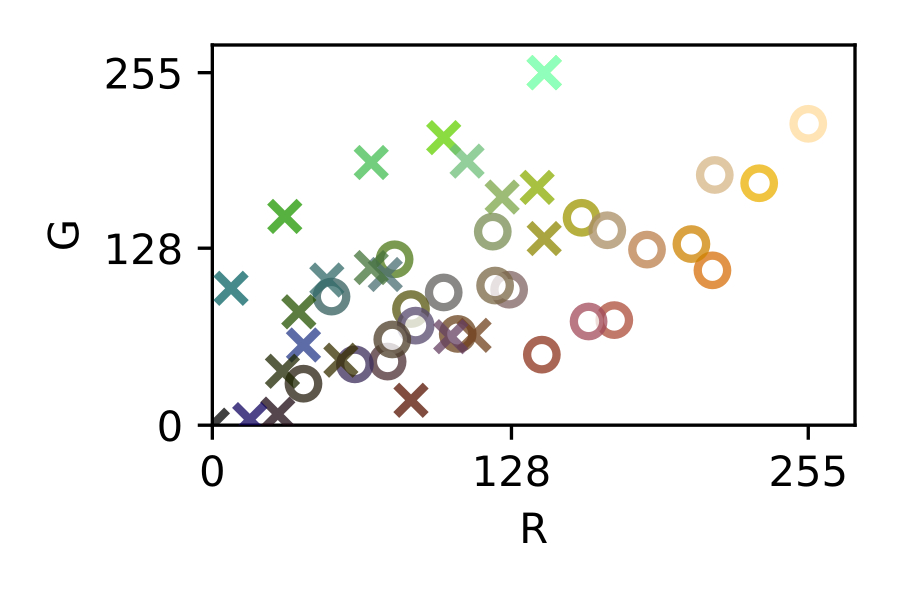

The image consistency problem is easier to solve, as we have changed the problem from working with continuous spectral distributions (see [eq:reproductionmin]) to N-dimensional vector spaces (see [eq:consistencymin]). These spaces are usually called color spaces, and the mappings between those spaces are usually called color conversions. Deformations or corrections inside a given color space are often referred to as color corrections. In this thesis, we will be using RGB images from digital cameras. Thus, we will work with device-dependent color spaces.

This means that the mappings will be performed between RGB spaces. Then, we can rewrite the color vector definition for RGB colors following [eq:colorvector] as:

\[\mathbf{s} = \mathrm{(r, g, b),} \ \ \mathbf{s} \in \mathbb{R}^3 \ \mathrm{,} \label{eq:colorvectorrgb}\]

where \(\mathbb{R}^3\) represents here a generic 3-dimensional RGB space. In [subsec:colorspaces], we detail how color spaces are defined according to their bit resolution and color channels.

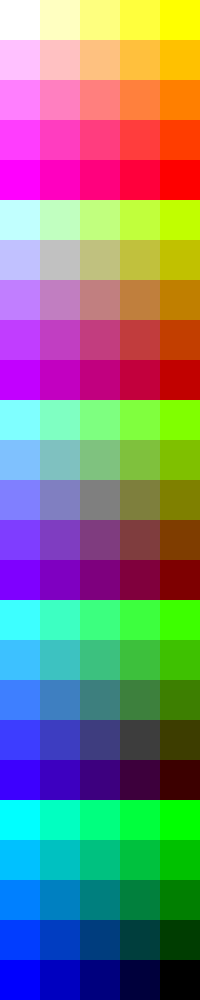

The traditional approach to achieve a general purpose color correction is the use of color rendition charts, introduced by C.S. McCamy et. al. in 1976 [13] (see [fig:colochecker]). Color charts are machine-readable patterns placed in a scene that embed reference patches of a known color, where in order to solve the problem, several color references are placed in a scene to be captured and then used in a post-capture color correction process.

These color correction processes involve algorithms to map the color references seen in the chart to their predefined nominal colors. This local color mapping is then extrapolated and applied to the whole image. There exists many ways to correct the color of images to achieve consistency.

The most extended way to do so is to search for device-independent color spaces (i.e. CIE Lab, CIE XYZ, etc.) [11]. But in the past decade, there have appeared solutions that involve direct corrections between device-dependent color spaces, without the need to pass through device-independent ones.

The most simple color correction technique is the white balance, that only involves one color reference [44]. A white reference inside the image is to be mapped to a desired white color and then the entire image is transformed using a scalar transformation. Beyond that, other techniques that use more than one color reference can be found elsewhere, using affine [44], polynomial [27], [28], root-polynomial [28] or thin-plate splines [15] transforms.

It is safe to say that, in most of these post-capture color correction techniques, increasing the number and quality of the color references offers a systematic path towards better color calibration results. This strategy however, comes along with more image area dedicated to accommodate these additional color references and therefore, a compromise must be found.

This led X-Rite (a Pantone subsidiary company), to introduce improved versions of the ColorChecker, like the ColorChecker Passport Photo 2 ® kit (see Figure 4.a). Also in this direction, Pantone presented in 2020 an improved color chart called Pantone Color Match Card ® (see Figure 4.b), based on the ArUco codes introduced by S. Garrido-Jurado et al. in 2015 [19] to facilitate the location of a relatively large number of colors. Still, the size of these color charts is too big for certain applications with size constraints (e.g. smart tags for packaging [30], [45]).

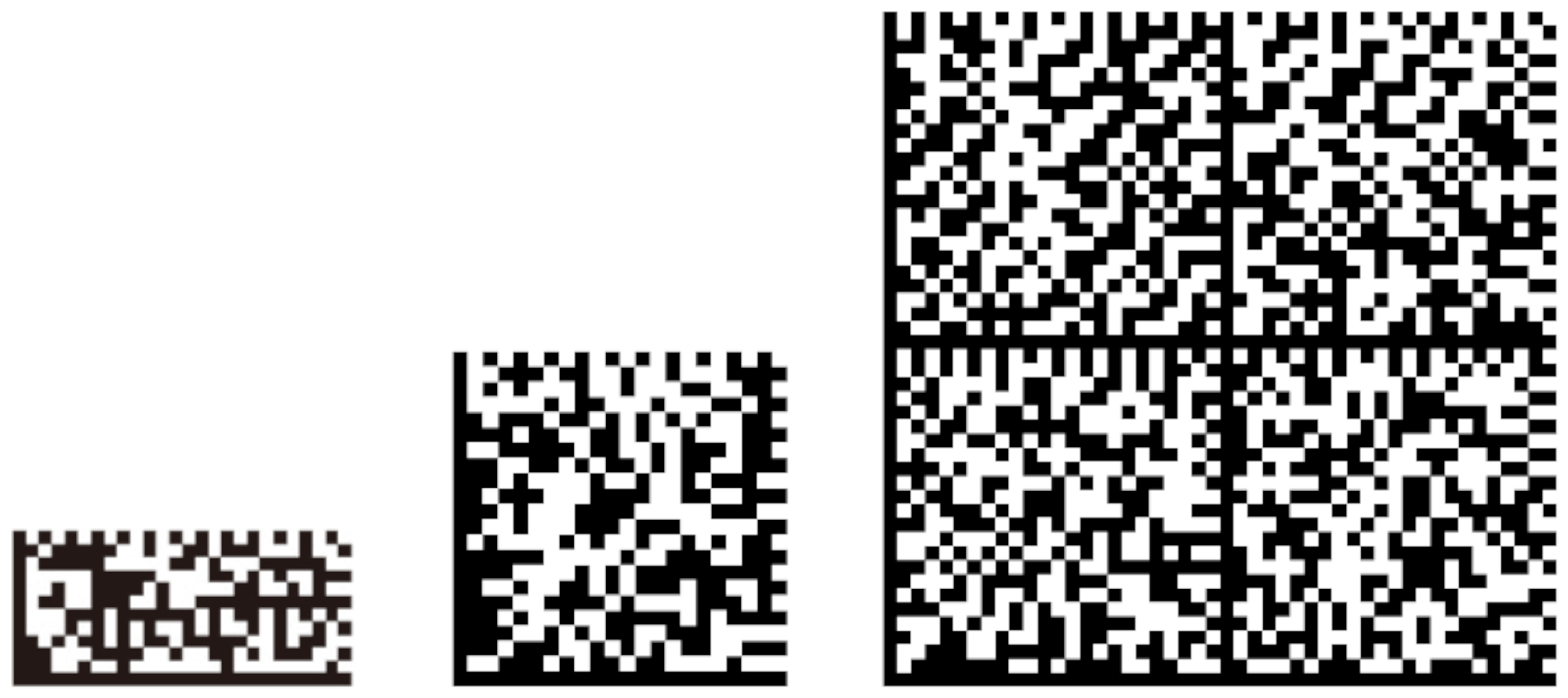

Quick-Response Codes, popularized as QR Codes, are 2D barcodes introduced in 1994 by Denso Wave [20], which aimed at replacing traditional 1D barcodes in the logistic processes of this company. However, the use of QR Codes has escalated in many ways and are now present in manifold industries: from manufacturing to marketing and publicity, becoming a part of the mainstream culture. In all these applications, QR Codes are either printed or displayed and later acquired by a reading device, which normally includes a digital camera or barcode scanner. Also, there has been an explosion of 2D barcode standards [46]–[50] (see [fig:2dbarcodes]).

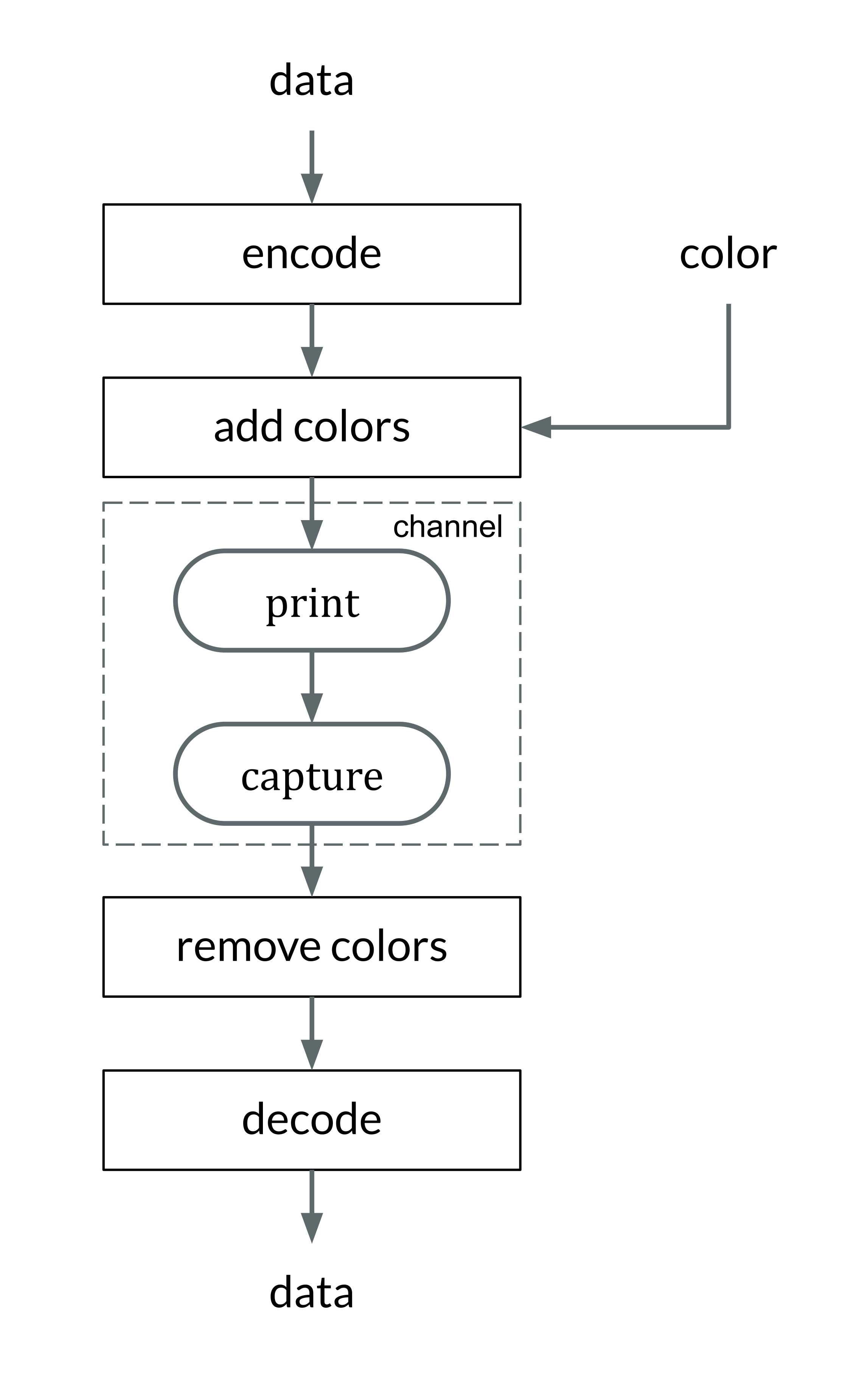

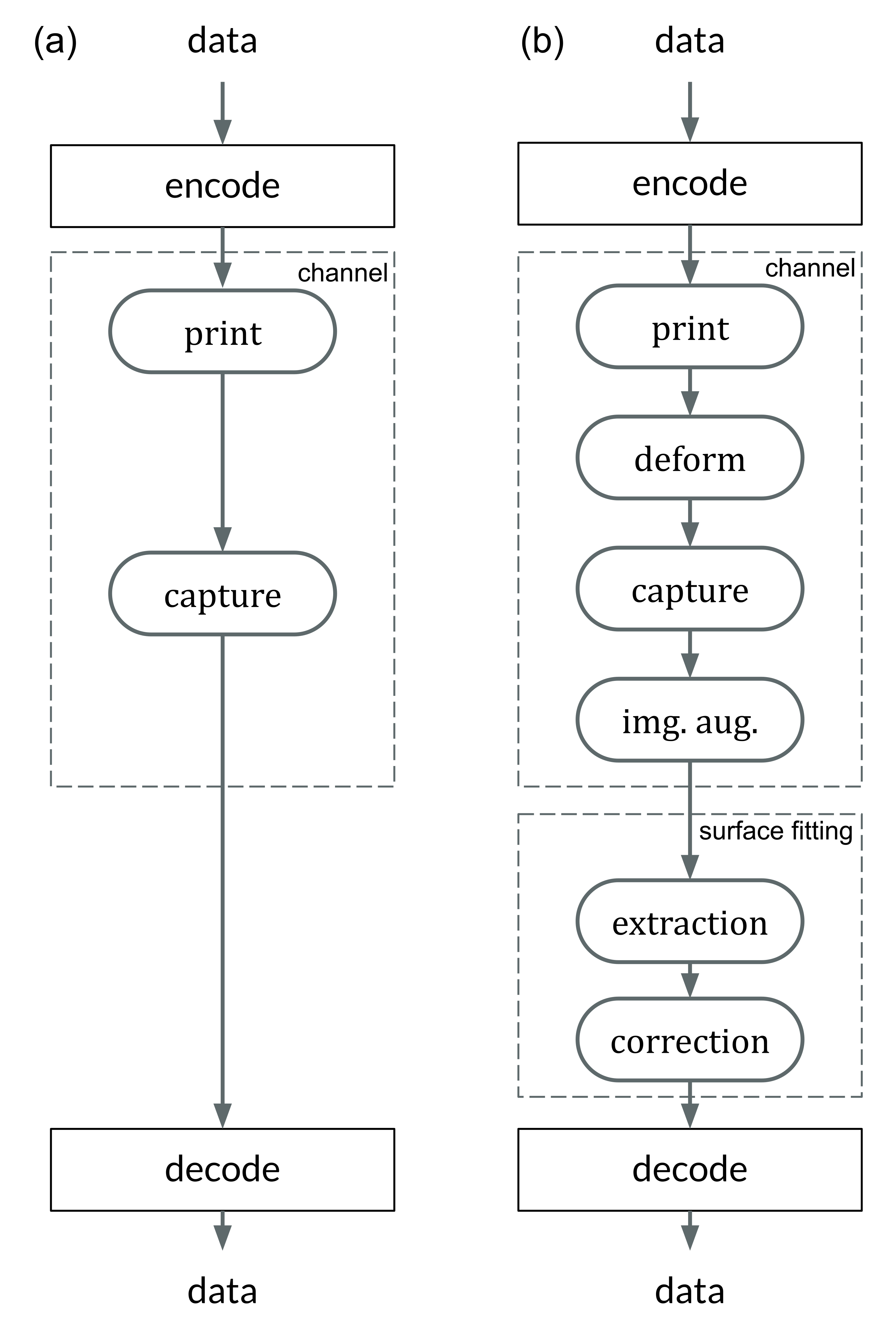

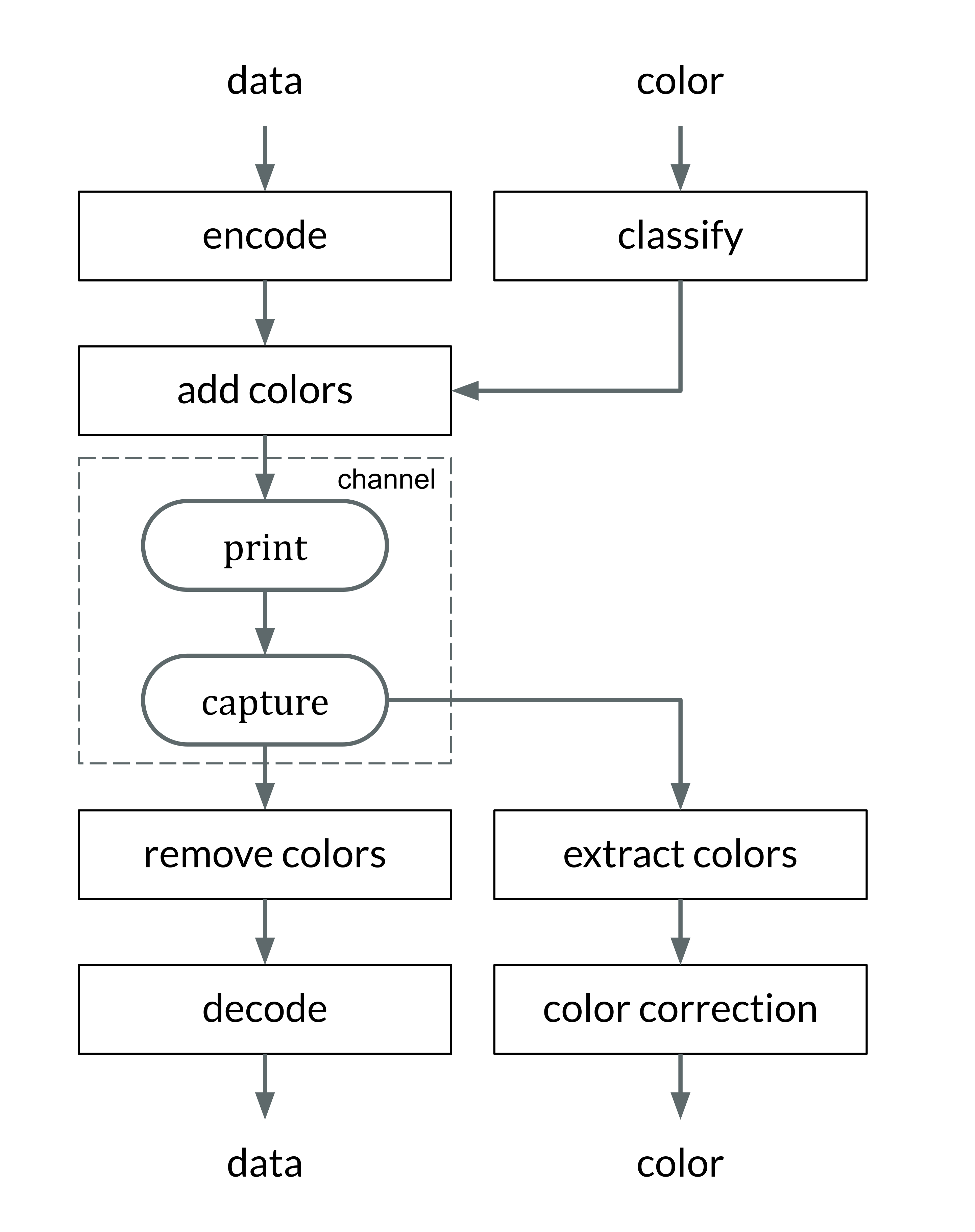

The process of encoding and decoding a QR Code could be considered as a form of communication through a visual channel (see [fig:defaultqrflow]): a certain message is created, then split into message blocks, these blocks are encoded in a binary format, and finally encoded in a 2D array. This 2D binary array is an image that is transmitted through a visual channel (printed, observed under different illuminations and environments, acquired as a digital image, located, resampled, etc.). On the decoder side, the binary data of the 2D binary array is retrieved, the binary stream is decoded, and finally the original message is obtained.

From the standpoint of a visual communication channel, many authors before explored the data transmission capabilities of the QR Codes, especially as steganographic message carriers (data is encoded in a QR Code, then encoded in an image) due to their robust error correction algorithm [51], [52].

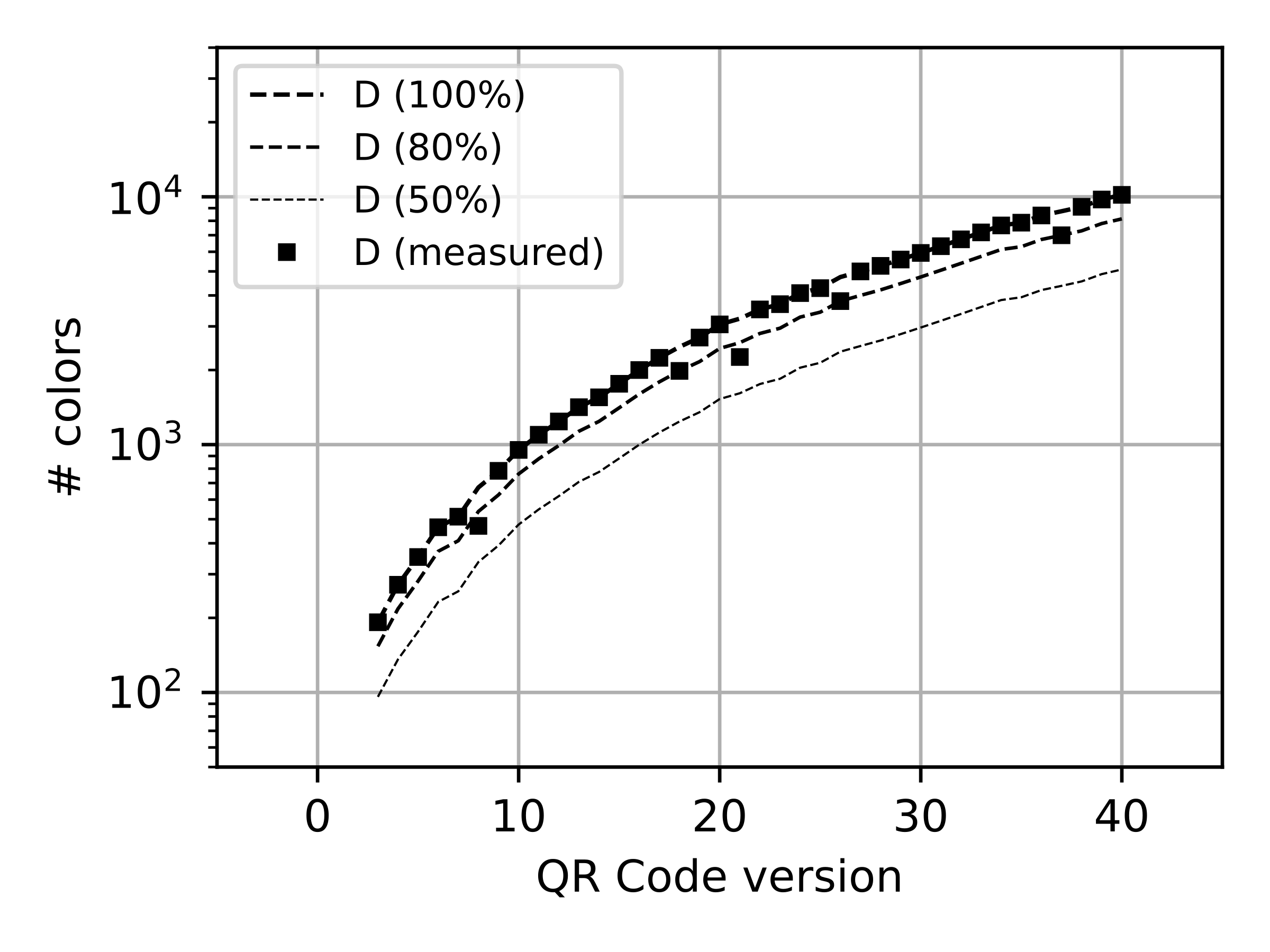

Many 2D barcode standards allow modulating the amount of data encoded in the barcode. For example, the QR Code standard implements different barcode versions from version 1 to version 40. Each version increases the edges of the QR Code by 4 pixels, the so-called modules. From the starting \(\mathrm{21 \times 21}\) (\(\mathrm{v1}\)) modules up to \(\mathrm{144 \times 144}\) modules (\(\mathrm{v40}\)) [20].

For each version, the location of every computer vision feature is fully specified in the standard (see [fig:qrversions]), in [subsec:qrfeatures] we will focus on these features. Some other 2D barcode standards are flexible enough to cope with different shapes, such as rectangles in the DataMatrix codes (see [fig:dmversions]), which can be easier to adapt to different substrates or physical objects [46].

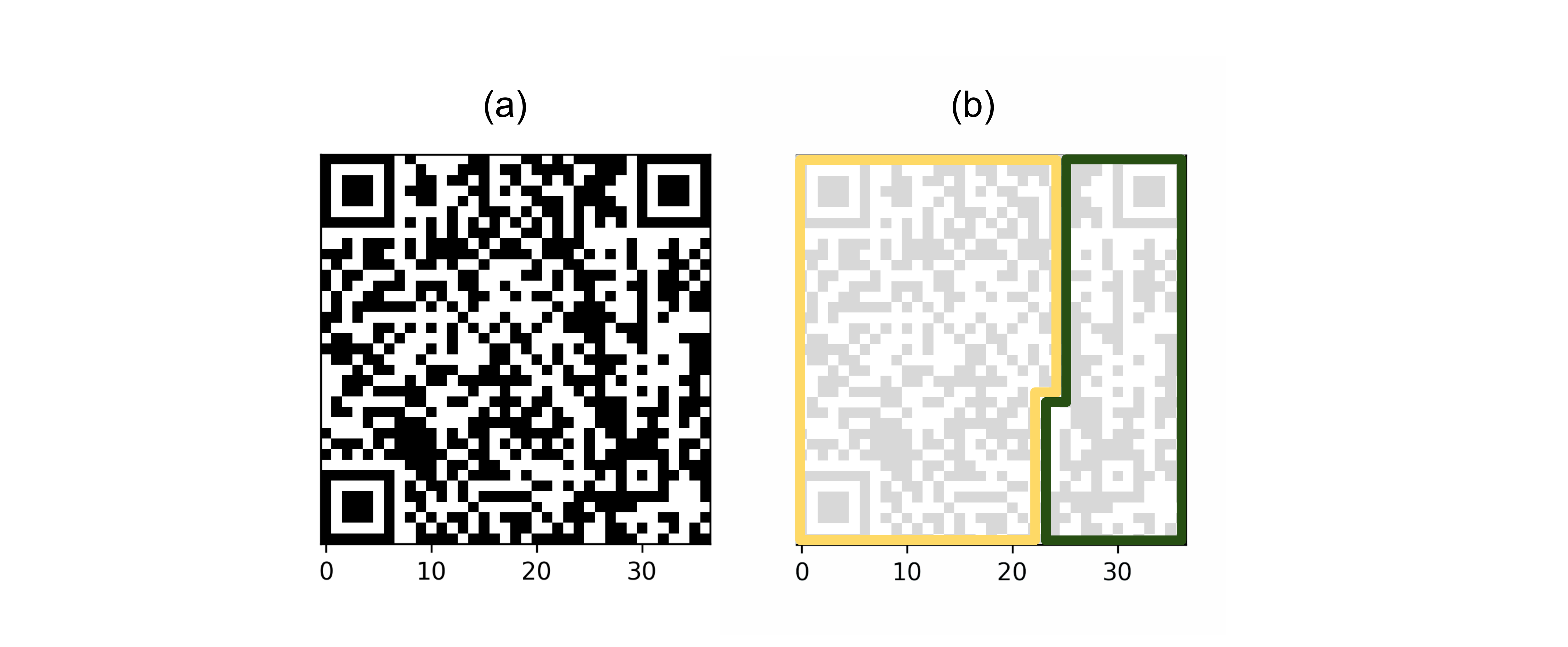

These different possible geometries must be considered when adding colors to a 2D barcode. In the case of the QR Codes and DataMatrix codes, the larger versions are built by replicating a basic squared block. Therefore, the set of color references could be replicated in each one of these blocks, to gain in redundancy and in a more local color correction. Alternatively, different sets of color references could be used in each periodic block to facilitate a more thorough color correction based on a larger set of color references.

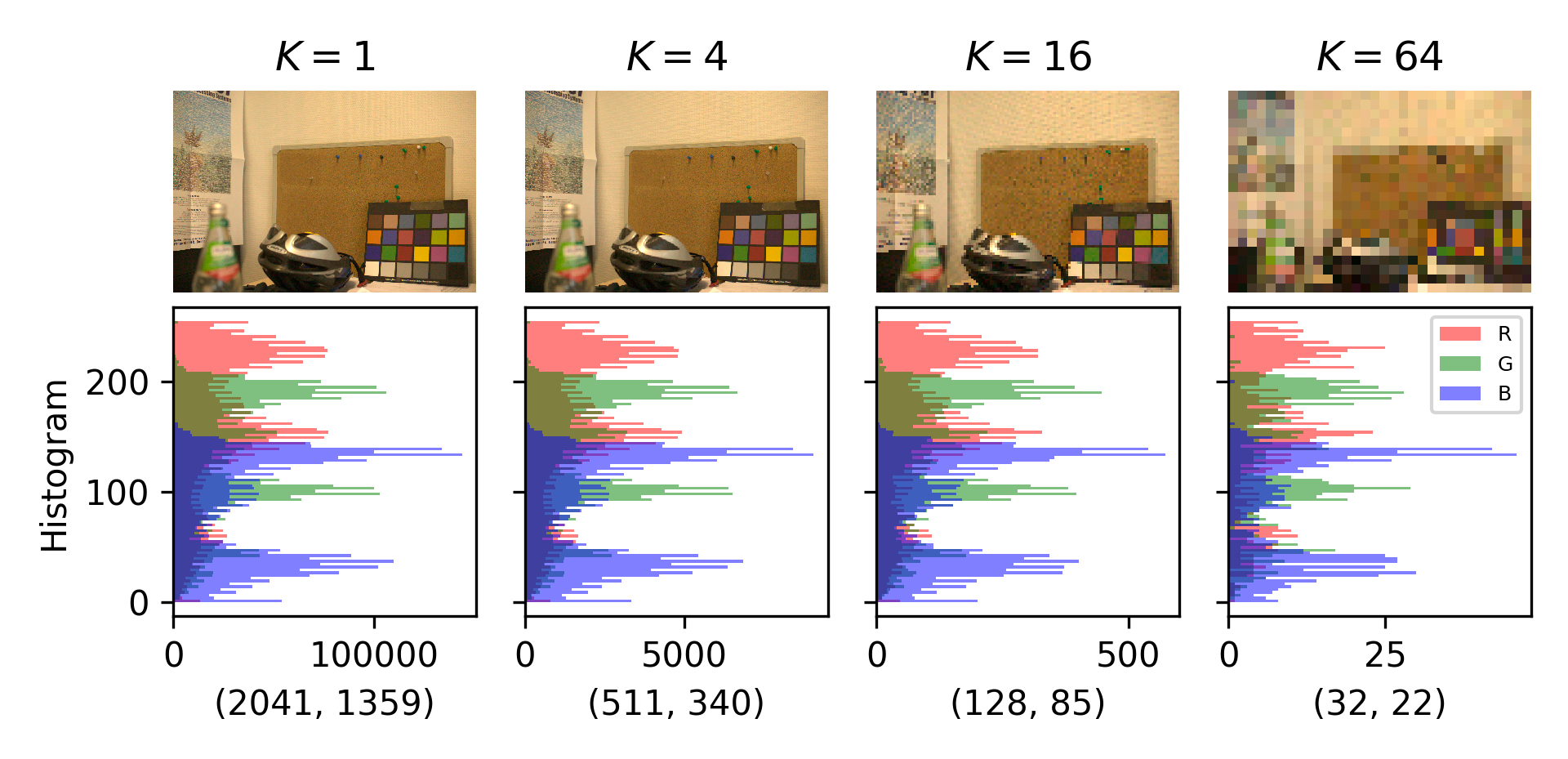

Regarding this size and shape modularity in 2D barcode encoding, there exist a critical relationship between the physical size of the modules and the pixels in a captured image. This is a classic sampling phenomena [53]: for a fixed physical barcode size and a fixed capture (same pixels), as the version of the QR Code increases the amount of modules in a given space increases as well.

Thus, when the apparent size of the module in the captured image decreases, the QR Code module is hardly a bunch of image pixels, and we start to see aliasing problems [54]. In turn, this problem leads to a point where QR Codes cannot be fully recognized by the QR Code decoding algorithm. This is even more important if we substitute these black and white modules with colors, where the error in finding the right reference area may lead to huge errors in the color evaluation. Therefore, this sampling problem will accompany the implementation of our proposal taking into account the size of the final QR Code depending on the application field and the typical resolution of the cameras used in those applications.

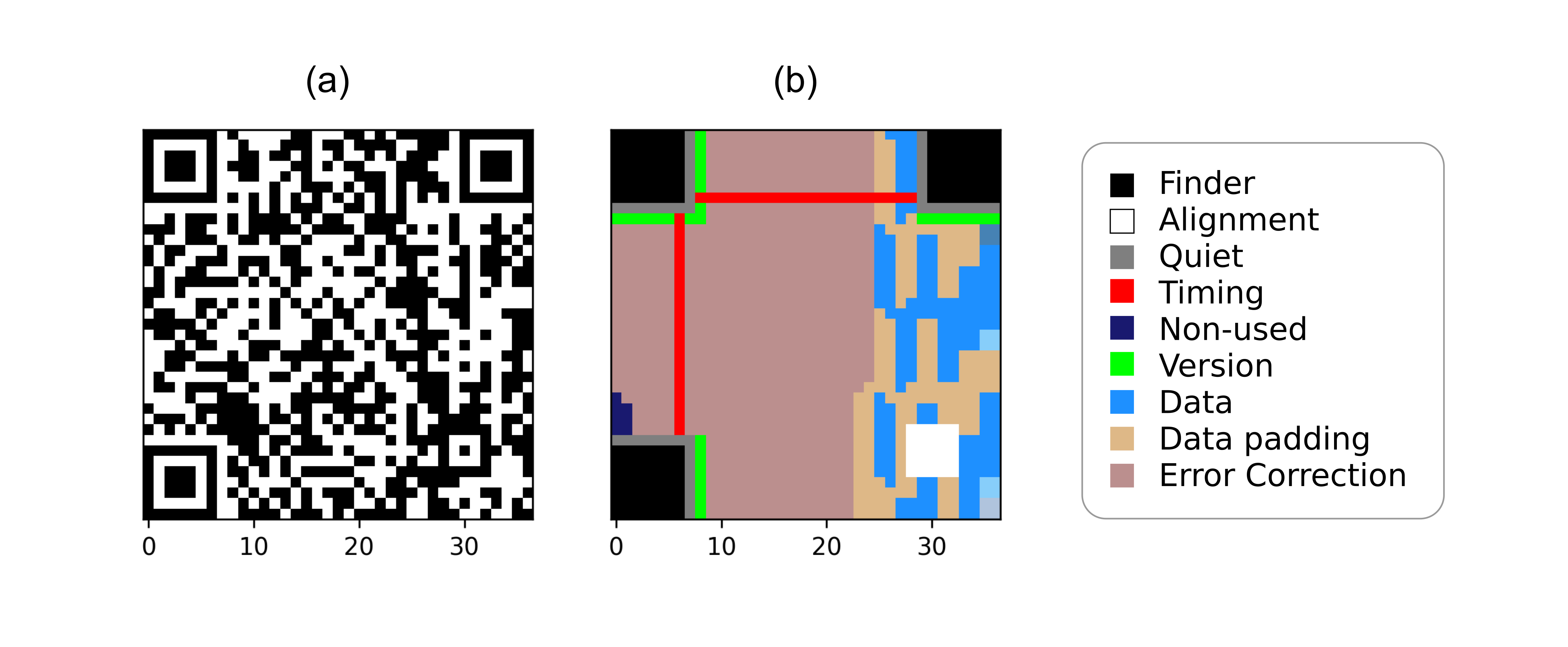

The QR Code standard presents a complex encoding layout (see [fig:qrencoding]). Encoding a message into a QR Code form implies several steps.

First, the message is encoded as binary data and split into various bytes, namely data blocks. Since QR Codes can support different data types, the binary encoding for those data types will be different in order to maximize the amount of data to encode in the barcode (see [tab:qrcapacity]).

Second, additional error correction blocks are computed based on the Reed-Solomon error correction theory [55]. Third, the minimal version of the QR Code is determined, which defines the size of the 2D array to “print” the error correction and data blocks, as a binary image. When this is done, the space reserved for the error correction blocks is larger than the space reserved for the data blocks (see [fig:qrencodingsimple]).

Finally, a binary mask is implemented in order to randomize as much as possible the QR Code encoding [20].

During the generation of a QR Code, the level of error correction (ECC) can be selected, from high to low capabilities: H (30 %), Q (25%), M (15%) and L (7%). This should be understood as the maximum number of error bits that a certain barcode can support (maximum Bit Error Ratio, detailed in [ch:5]). Notice the error correction capability is independent of the version of the QR Code. However, both combined define the maximum data storage capacity of the QR Code. For a fixed version, higher error correction implies a reduction of the data storage capacity of the QR Code.

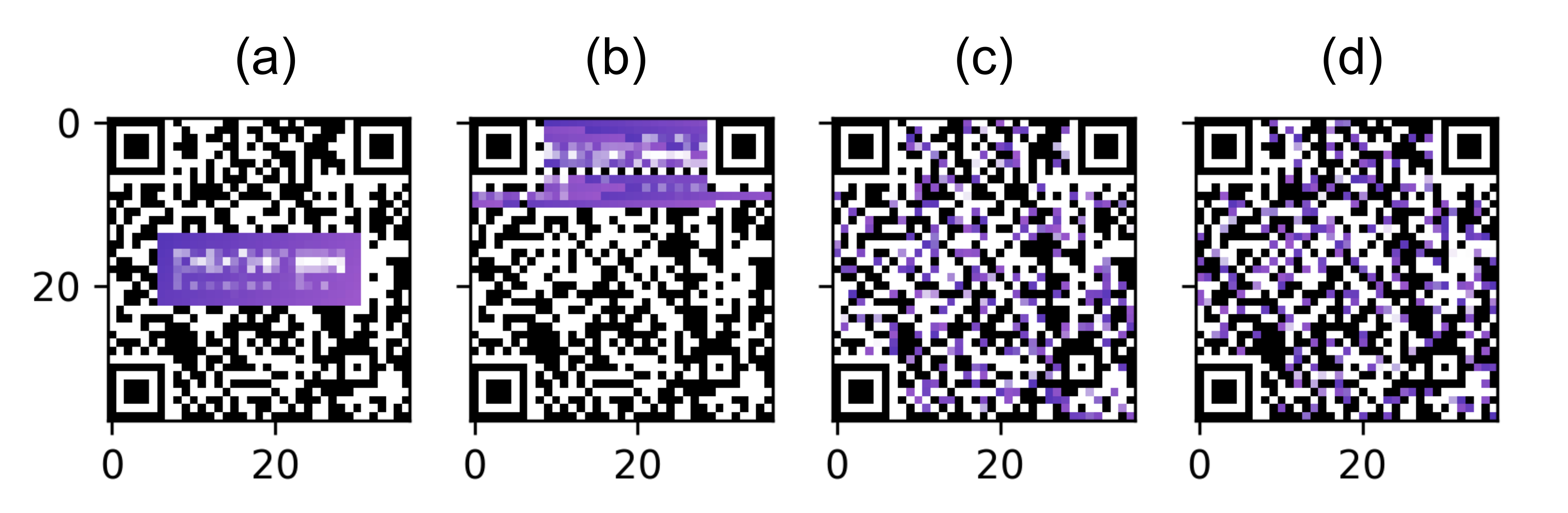

This error correction feature is indirectly responsible for the popularity of QR Codes, since it makes them extremely robust while allowing for a large amount of pixel tampering to accommodate aesthetic features, like allocating brand logos inside the barcode [56], [57] (see [fig:qrswithlogos] and [fig:qrswithlogoscolor]). In this thesis, we will take advantage of such error correction to embed reference colors inside a QR Code.

![Different examples of Halftone QR Codes, introduced by HK. Chu et al. [56]. These QR Codes exploit the error correction features of the QR Code to achieve back-compatible QR Codes with apparent grayscale –halftone– colors.](assets/graphics/chapters/chapter3/figures/jpg/halftone_qrcodes.jpg)

![Original figure from Garateguy et al. (© 2014 IEEE) [57], different QR Codes with color art are shown: (a) a QR Code with a logo overlaid; (b) a QArt Code [58], (c) a Visual QR Code; and (d) the Garateguy et al. proposal.](assets/graphics/chapters/chapter3/figures/jpg/garateguy.jpg)

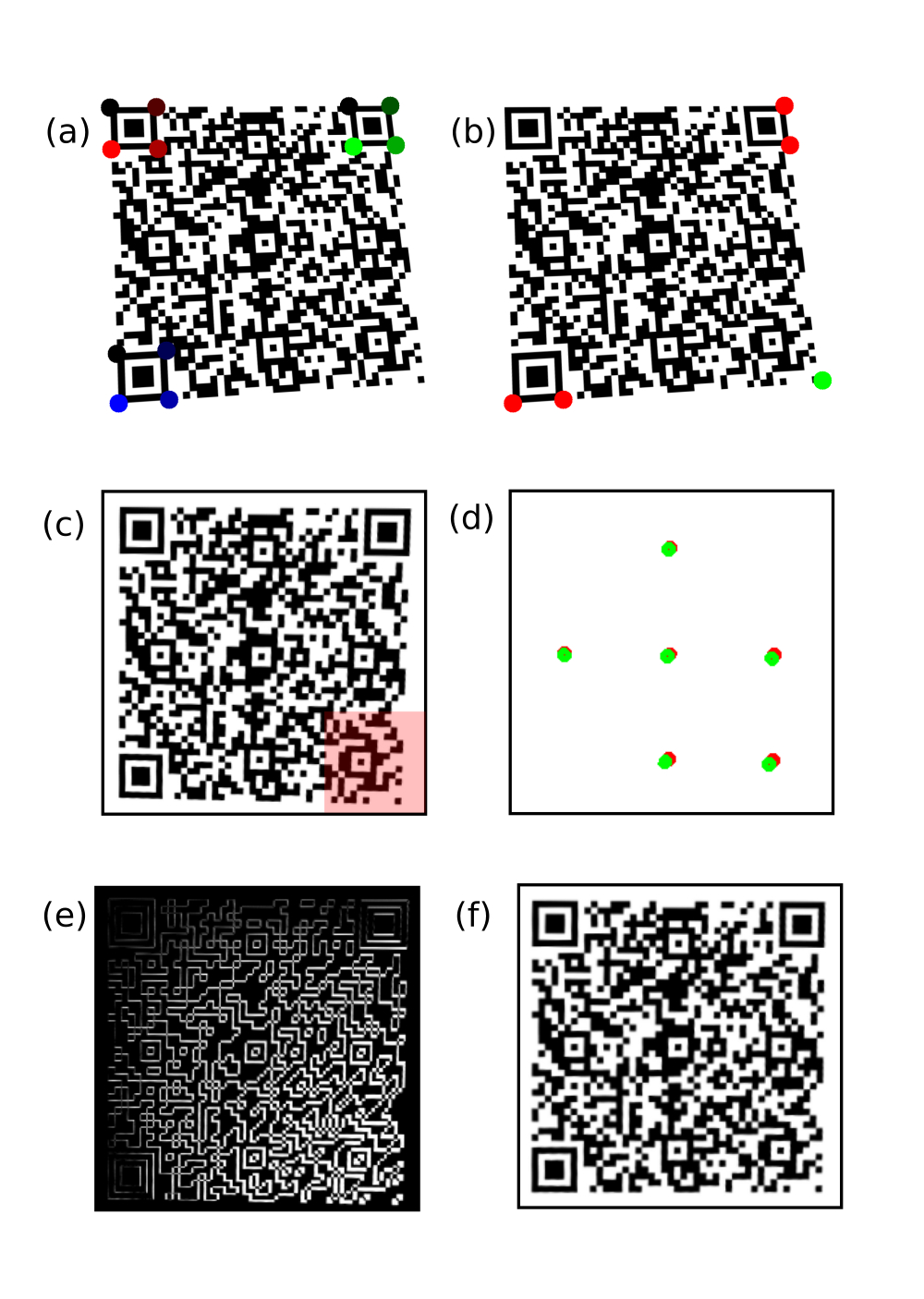

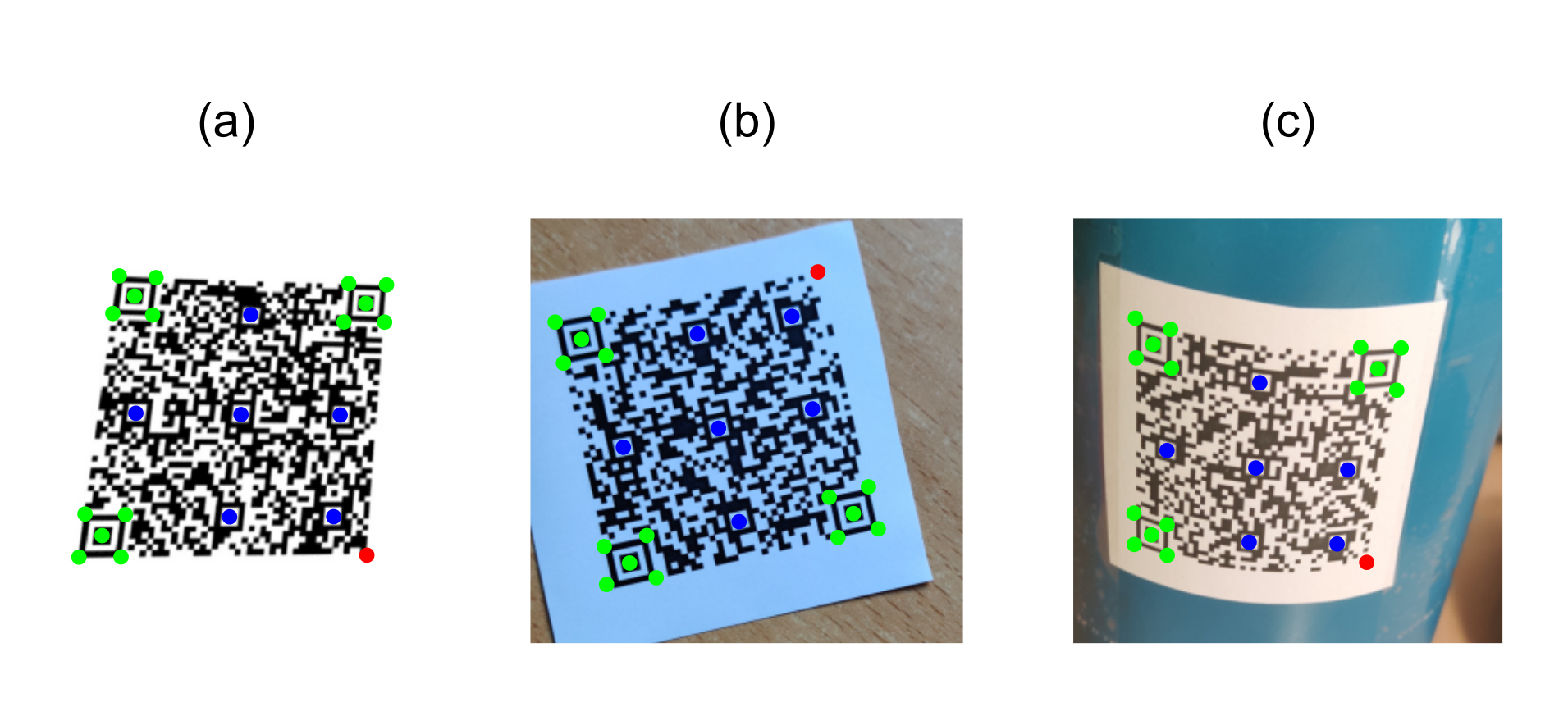

Besides the data encoding introduced before, a QR Code embeds computer vision features alongside with the encoded digital data. These features play a key role when applying computer vision transformations to the acquired images containing QR codes. Usually, they are extracted to establish a correspondence between their apparent positions in the captured image plane and those in the underlying 3D surface topography. The main features we focus on this thesis are:

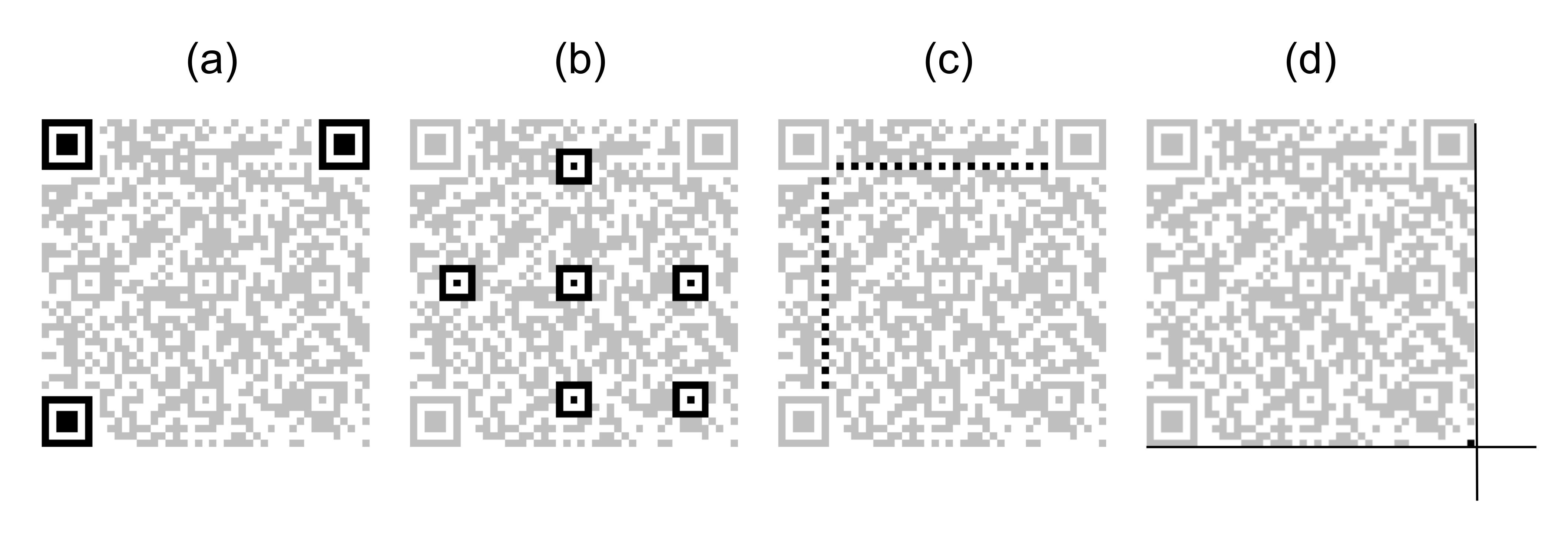

Finder patterns are the corners of the QR Code, it has 3 of them to break symmetry and orient the QR in a scene (see [fig:qrcodeparts].a).

Alignment patterns are placed inside the QR Code to help in the correction of noncoplanar deformations (see [fig:qrcodeparts].b).

Timing patterns are located alongside two borders of the QR Code, between a pair of finder patterns, to help in the correction of coplanar deformations (see [fig:qrcodeparts].c).

The fourth corner is the one corner not marked with a finder pattern. It can be found as the crosspoint of the straight extensions of the outermost edges of two finder patterns (see [fig:qrcodeparts].d). It is useful in linear, coplanar and noncoplanar deformations.

These features are easy to extract due to their spatial properties. They are well-defined as they do not depend on the version of the QR Code, nor the data encoding. The lateral size for a finder pattern is always \(\mathrm{7}\) modules. For an alignment pattern, \(\mathrm{5}\) modules. And timing patterns grow along with each version, but their period is always \(\mathrm{2}\) modules (one black, one white).

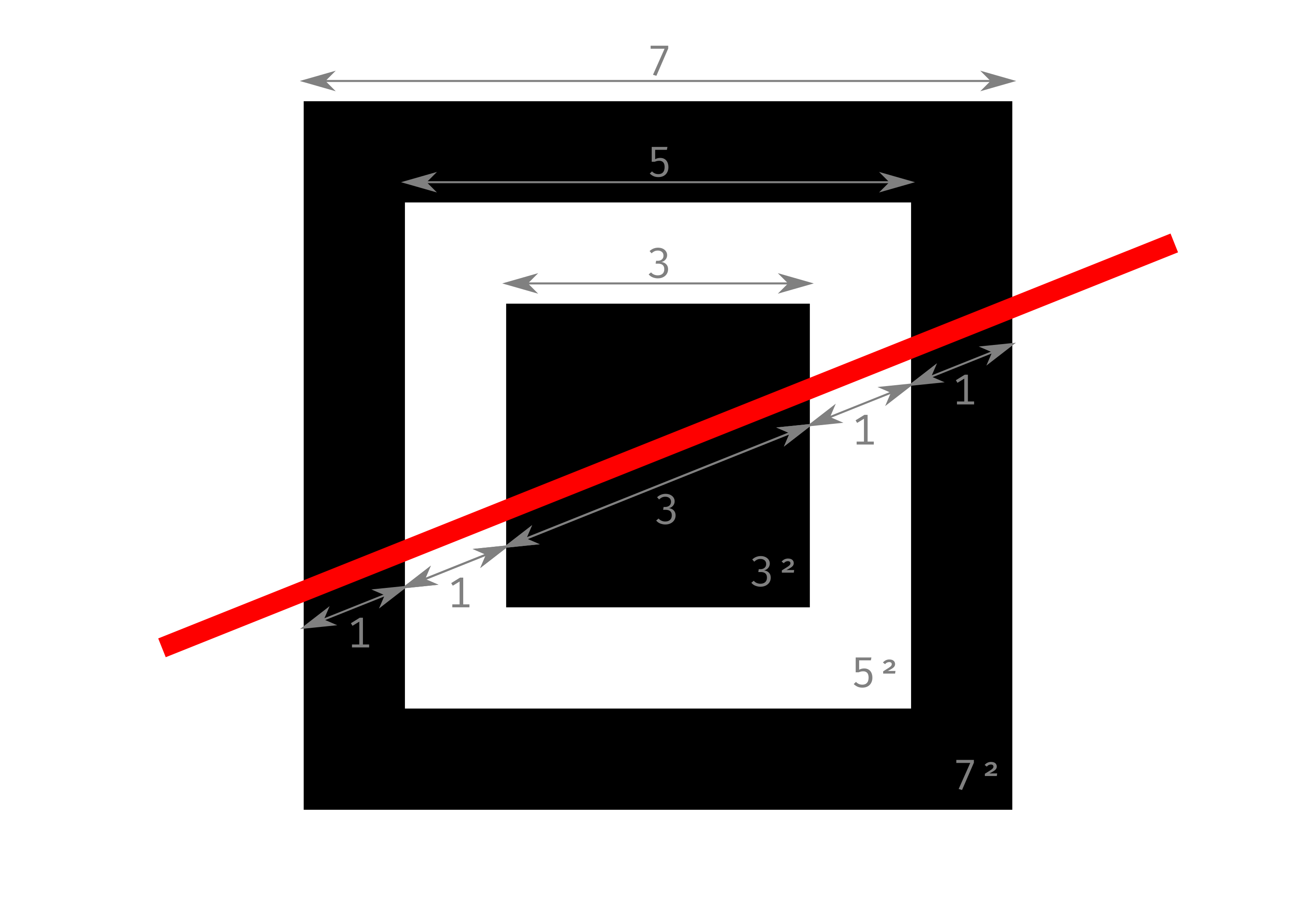

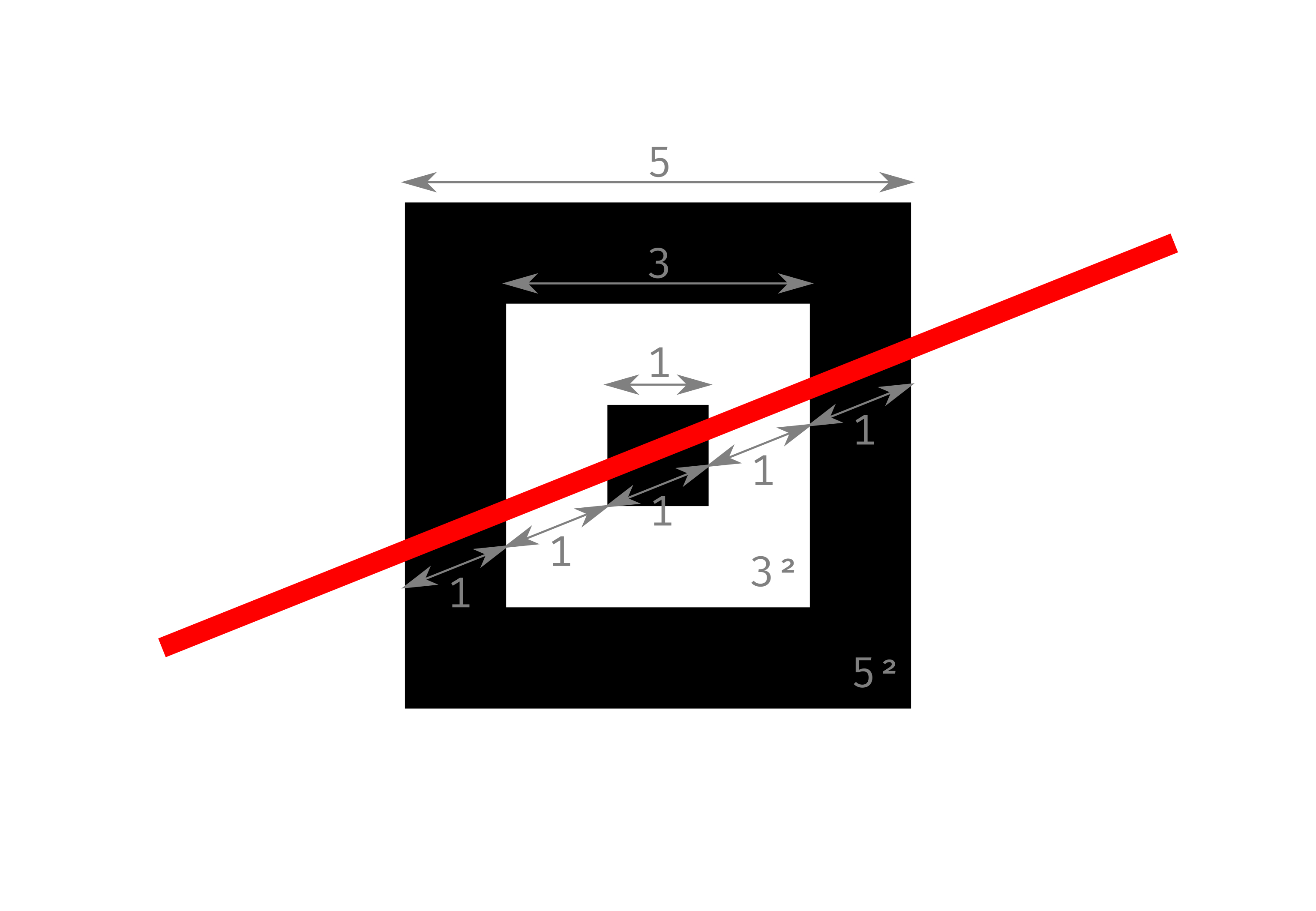

Finder patterns implement a sequence of modules along both axes that follows: \(\mathrm{1}\) black, \(\mathrm{1}\) white, \(\mathrm{3}\) black, \(\mathrm{1}\) white and \(\mathrm{1}\) black, often written as a \(\mathrm{1:1:3:1:1}\) relation (see [fig:finderpattern]). Alignment patterns implement a sequence of modules along both axes that follows: \(\mathrm{1}\) black, \(\mathrm{1}\) white, \(\mathrm{1}\) black, \(\mathrm{1}\) white and \(\mathrm{1}\) black, a \(\mathrm{1:1:1:1:1}\) relation (see [fig:alignmentpattern]).

Thus, the relationship between white and black pixels provides a path to use pattern recognition techniques to extract these features, as these relations are invariant to perspective transformations. Moreover, these linear relations can be expressed as squared area relations, and are still invariant under perspective transformations. This is specially useful when using extraction algorithms based upon contour recognition [18], [59]. For finder patterns the area relation becomes \(\mathrm{7^2:5^2:3^2}\) (see [fig:finderpattern]); and for alignment patterns, \(\mathrm{5^2:3^2:1^2}\) (see [fig:alignmentpattern]).

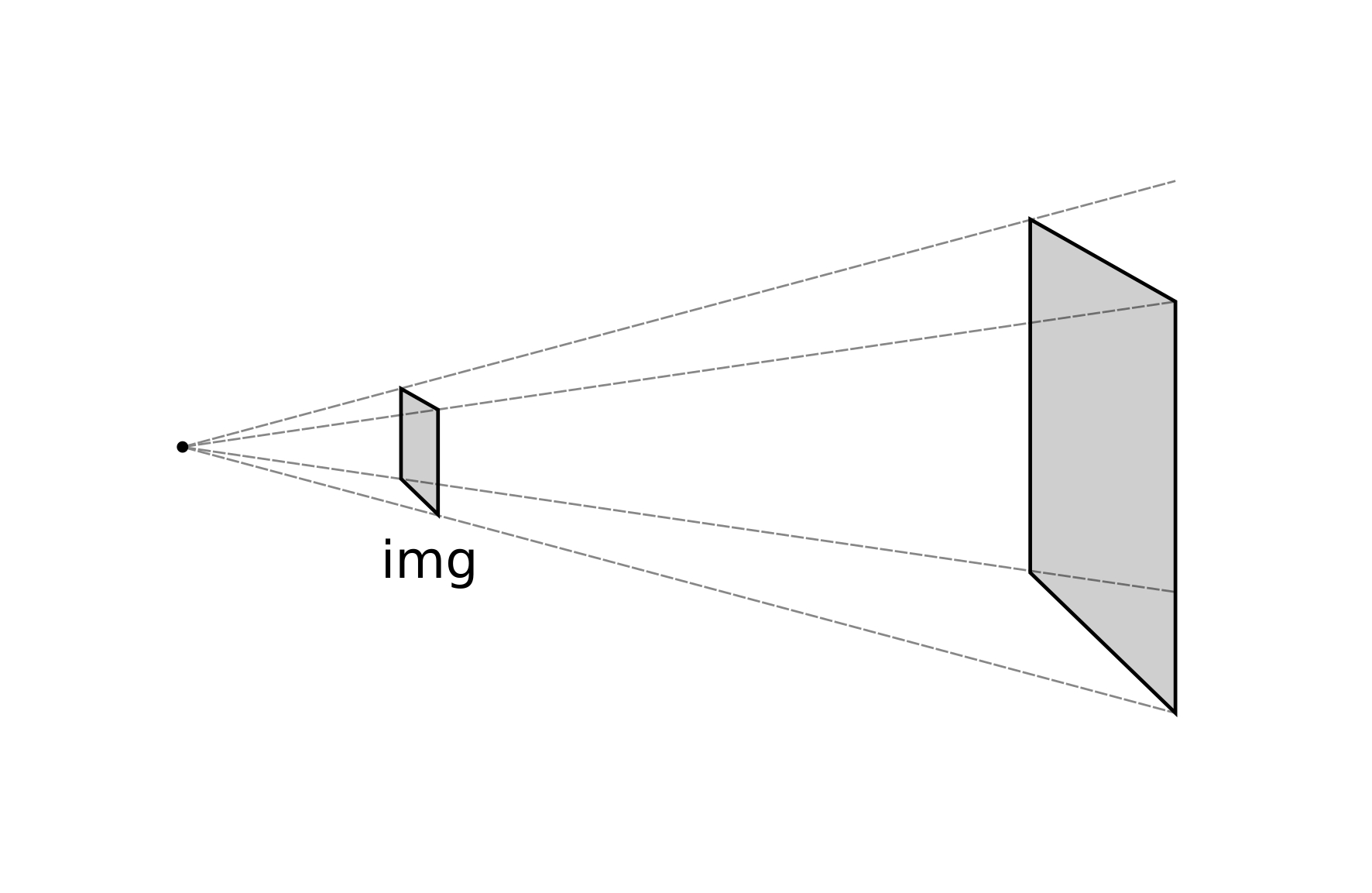

Let us explore a common pipeline towards QR Code readout. First, consider a QR Code captured from a certain point-of-view in a flat surface which is almost coplanar to the capture device (e.g. a box in a production line). Note that more complex applications, such as bottles [60], all sorts of food packaging [61], etc., which are key to this thesis, are tackled in [ch:4].

Due to perspective, the squared shape of the QR Code will be somehow deformed following some sort of projective transformation (see [fig:qrcontours].a). Then, in order to find the QR Code itself within the image field, the three finder patterns are extracted applying contour recognition algorithms based on edge detection [18], [59] (see [fig:qrcontours].b). As explained in [subsec:qrfeatures], each finder pattern candidate must hold a very specific set of area relationships, no matter how they are projected if the projection is linear. The contours that fulfill this area relationship are labeled as candidates finder patterns (see [fig:qrcontours].c).

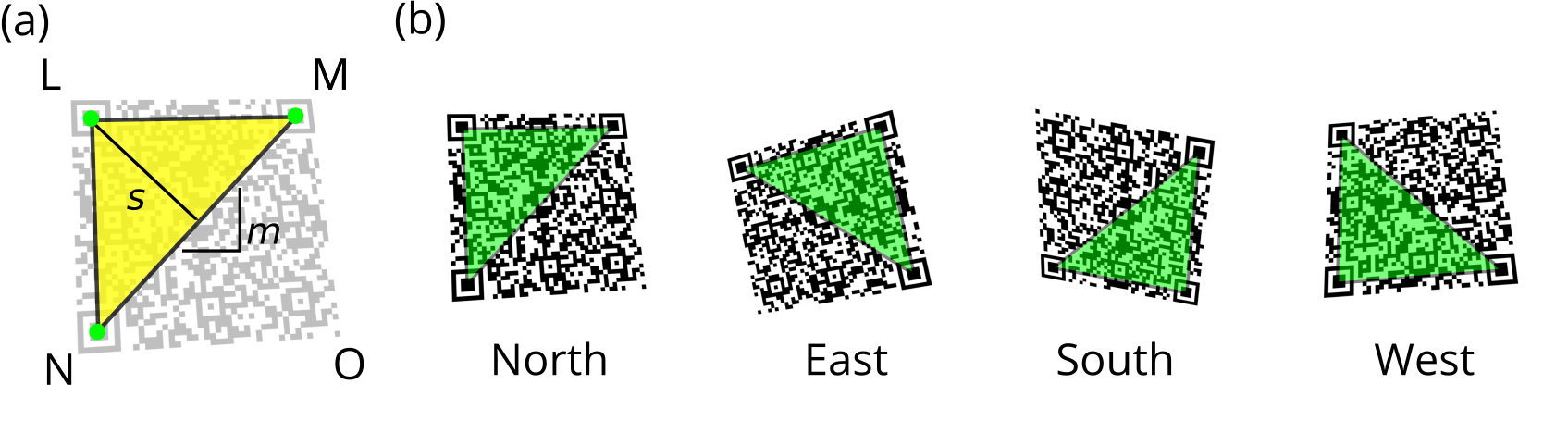

Second, the orientation of the QR Code must be recognized, as in a general situation, the QR Code captured in an image can take any orientation (i.e. rotation). The above-mentioned three candidate finder patterns are used to figure out the orientation of the barcode. To do so, we should bear in mind that one of these corners will correspond to the top-left one and the other two will be the end points of the opposite diagonal (see [fig:qrorientation].a). By computing the distances between the three candidate finder pattern centers and comparing them we can find which distance corresponds to the diagonal and assign the role of each pattern in the QR Code accordingly. The sign of the slope of the diagonal \(m\) and the sign of the distance to the third point \(s\) are computed and analyzed to solve the final assignment of the patterns. The four possible combinations result in 4 possible different orientations: north, east, south, west (see [fig:qrorientation].b). Once the orientation is found, the three corner candidates are labeled following the sequence \({L, M, N}\).

Third, a projection correction is performed to retrieve the QR Code from the scene. The finder patterns can then be used to correct the projection deformation of the image in the QR Code region. If the deformation is purely affine, e.g. a flat surface laying coplanar to the reader device, we can perform the correction with these three points. But, if a more general deformation is presented, e.g. handheld capture in a perspective plane, one needs at least one additional point to carry out such transformation: the remaining fourth corner \(O\) (see [fig:qrorientation].a). As the edges around the 3 main corners were previously determined (see [fig:qrcorrectperspective].a), the fourth corner \(O\) is localized using the crossing points of two straight lines from corners \(M\) and \(N\) (see [fig:qrcorrectperspective].b). With this set of 4 points, a projective transformation that corrects the perspective effect on the QR Code can also be carried out (see [fig:qrcorrectperspective].c).

Notice the calculation of the fourth corner \(O\) can accumulate the numerical error of the previous steps. This might lead to inaccurate results in the bottom-right corner of the recovered code (see [fig:qrcorrectperspective].c) and, in some cases, to a poor perspective correction. This effect is especially strong in low resolution captures, where the modules of the QR Code measure a few image pixels. In order to solve this issue, the alignment patterns are localized (see [fig:qrcorrectperspective].d) in a more restricted and accurate contour search around the bottom-right quarter of the QR Code (see [fig:qrcorrectperspective].e). With this better estimation of a grid of reference points of known (i.e. tabulated) positions a second projective transformation is carried out (see [fig:qrcorrectperspective].f). Normally, having more reference points than strictly needed to compute projective transformations is not a problem thanks to the use of maximum likelihood estimation (MLE) solvers for the projection fitting [62].

Finally, the QR Code readout is performed, this means the QR Code is down-sampled to a resolution where each of the modules occupies exactly one image pixel. After this, the data is extracted following a reverse process of the encoding: the data blocks are interpreted as binary data, also the error correction blocks. The Reed-Solomon technique to resolve errors is applied, and the original data is retrieved.

In [sec:theimageconsistency] we introduced the image consistency problem alongside with a simplified description of the reflectance model (see [fig:colorreproduction_mini]):

\[\mathrm {S_k \propto \int_{-\infty}^{\infty} I(\uplambda) \ R(\uplambda) \ D_k(\uplambda) \ d\uplambda} \label{eq:colorsingalintegral_bis}\]

where a certain light source \(\mathrm{I} (\uplambda)\) illuminates a certain object with a certain reflectance \(\mathrm{R} (\uplambda)\) this scene is captured by a sensor with its response \(\mathrm{D_k} (\uplambda)\); and \(\mathrm {S_k}\) represents the signal captured by this sensor. This model specifically links the definition of color to the sensor response, not only to the wavelength distribution of the reflected light. Thus, our color definition depends on the observer.

Let the sensor \(\mathrm{D_k} (\uplambda)\) be the human eye, then this model becomes the well-known tristimulus model of the human eye. In the tristimulus model, a standard observer is defined from studying the human vision. In 1931 the International Commission of Illumination defined the CIE 1931 RGB and CIE 1931 XYZ color spaces based on the human vision [63], [64]. Since then, the model has been revisited many times defining new color spaces: in 1960 [65], in 1964 [66], in 1976 [67] and so on [68].

Commonly, color spaces referred to a standard observer are called device-independent color spaces. As explained before, we are going to use images which are captured by digital cameras. These images will use device-dependent color spaces, despite the efforts of their manufacturers to solve the color reproduction problem, as they try to match the camera sensor to the tristimulus model of the human eye [69].

Let a color \(\mathbf{s}\) be defined by the components of the camera sensor:

\[\mathbf{s} = (\mathrm{S_r}, \ \mathrm{S_g}, \ \mathrm{S_b}) \label{eq:scolor}\]

where \(\mathrm{S_r}\) , \(\mathrm{S_g}\) and \(\mathrm{S_b}\) are the responses of the three sensors of the camera for the red, green and blue channels, respectively. Cameras do imitate the human tristimulus vision system by placing sensors in the wavelength bands representing those where human eyes have more sensitivity.

Note that \(\mathbf{s}\) is defined as a vector in [eq:scolor]. Although, its definition lacks the specification of its vector space:

\[\mathbf{s} = (r, \ g, \ b) \ \in \mathbb{R}^3 \label{eq:scolor_vector}\]

where \(r\), \(g\), \(b\) is a simplified notation of the channels of the color, and \(\mathbb{R}^3\) is a generic RGB color space. As digital cameras store digital information in a finite discrete representation, \(\mathbb{R}^3\) should become \(\mathbb{N}^3_{[0, 255]}\) for 8-bit images (see [fig:rgb_cube]). This discretization process of the measured signal in the camera sensor is a well-known phenomenon in signal-processing, it is called quantization [70]. All to all, we can write some common color spaces in this notation:

\(\mathbb{N}_{[0, 255]}\) is the grayscale color space of 8-bit images.

\(\mathbb{N}^3_{[0, 255]}\) is the RGB color space of 24-bit images (8-bits/channel).

\(\mathbb{N}^3_{[0, 4096]}\) is the RGB color space of 36-bit images (12-bits/channel).

\(\mathbb{N}^3_{[0, 65536]}\) is the RGB color space of 48-bit images (16-bits/channel).

\(\mathbb{N}^4_{[0, 255]}\) is the CMYK color space of 32-bit images (8-bits /channel).

\(\mathbb{R}^3_{[0, 1]}\) is the RGB color space of a normalized image, specially useful when using computer vision algorithms.

The introduction of color spaces as vector spaces brings all the mathematical framework of geometric transformations. We can now define a color conversion as the application between two color spaces.

For example, let \(f\) be a color conversion between an RGB and a CMYK space:

\[f: \mathbb{N}^3_{[0, 255]} \to \mathbb{N}^4_{[0, 255]}\]

this color conversion can take any form. In [sec:theimageconsistency], we saw that the reflectance spectra of the image of an object would be a linear combination of the inks reflectance spectra used to reproduce that object. If we recover that expression from [eq:reproductionsum] and combine it with the RGB color space from [eq:scolor_vector], we obtain:

\[\mathrm{R'(\uplambda)} = \sum_{j}^{c,m,y,k} f_j (r, g, b) \cdot \mathrm{R_j(\uplambda)} \label{eq:reproductionsum_bis}\]

Now, \(\mathrm{R'(\uplambda)}\) is a linear combination of the reflectance spectra of the cyan, magenta, yellow and black inks. The weights of the combination is the CMYK color derived from the RGB color.

In turn, we can express the CMYK color also as a linear combination of the RGB color channels, \(f_i (r, g, b)\) is our color correction here, then:

\[\mathrm{R'(\uplambda)} = \sum_{j}^{c,m,y,k} \left[ \sum_{k}^{r,g,b} a_{jk} \cdot k \right] \cdot \mathrm{R_j(\uplambda)} \label{eq:reproductionsum_rgb}\]

Note that we have defined \(f_i\) as a linear transformation between the RGB and the CMYK color spaces. Doing so is the most common way to perform color transformations between color spaces.

These are the foundations of the ICC Profile standard [71]. Profiling is a common technique when reproducing colors. For example, take [fig:rgb_cube], if the colors are seen displayed on a screen they will show the RGB space of the LED technology of the screen. However, if they have been printed, the actual colors the reader will be looking at will be the linear combination of CMYK inks representing the RGB space, following [eq:reproductionsum_rgb]. Therefore, ICC profiling is present in each color printing process.

Alongside with the described example, here below, we present some of the most common color transformations we will use during the development of this thesis, that include normalization, desaturation, binarization and colorization transformations.

Normalization is the process of mapping a discrete color space with limited resolution (\(\mathbb{N}_{[0, 255]}\), \(\mathbb{N}^3_{[0, 255]}\), \(\mathbb{N}^3_{[0, 4096]}\), ...) into a color space which is limited to a certain range of values, normally from 0 to 1 \(\mathbb{R}_{[0, 1]}\), but offers theoretically infinite resolution 1. All our computation will take place in such normalized spaces. Formally the normalization process is a mapping that follows:

\[f_{normalize} : \mathbb{N}^K_{[0, \ 2^n]} \to \mathbb{R}^K_{[0, 1]} \label{eq:color_normalize}\]

where \(K\) is the number of channels of the color space (i.e. \(K = 1\) for grayscale, \(K = 3\) for RGB color spaces, etc.) and \(n\) is the bit resolution of the color space (i.e. 8, 12, 16, etc.).

Note that a normalization mapping might not be that simple so only implies a division by a constant. For example, an image can be normalized using an exponential law to compensate camera acquisition sensitivity, etc. [72], [73].

Desaturation is the process of mapping a color space into a grayscale representation of this color space. Thus, formally this mapping will always be a mapping from a vector field to a scalar field. We will assume the color space has been previously normalized following a mapping (see [eq:color_normalize]). Then:

\[f_{desaturate} : \mathbb{R}^K_{[0, 1]} \to \mathbb{R}_{[0, 1]} \label{eq:color_desaturate}\]

where \(K\) is still the number of channel the input color space has. There exist several ways to desaturate color spaces, for example, each CIE standard incorporates different ways to compute their luminance model [64].

Binarization is the process of mapping a grayscale color space into a binary color space, this means the color space gets reduced only to a representation of two values. Formally:

\[f_{binarize} : \mathbb{R}_{[0, 1]} \to \mathbb{N}_{[0, 1]} \label{eq:color_binarize}\]

Normally, these mappings need to define some kind of threshold to split the color space representation into two subsets. Thresholds can be as simple as a constant threshold or more complex [74].

Colorization is the process of mapping a grayscale color space into a full-featured color space. We can define a colorization as:

\[f_{colorize} : \mathbb{R}_{[0, 1]} \to \mathbb{R}^K_{[0, 1]} \label{eq:color_colorize}\]

where \(K\) is now the number of channels the output color space has. This process is more unusual than the previous mappings presented here. It is often implemented in those algorithms that pursue image restoration [75]. In this work, colorization will be of a special interest in [ch:5].

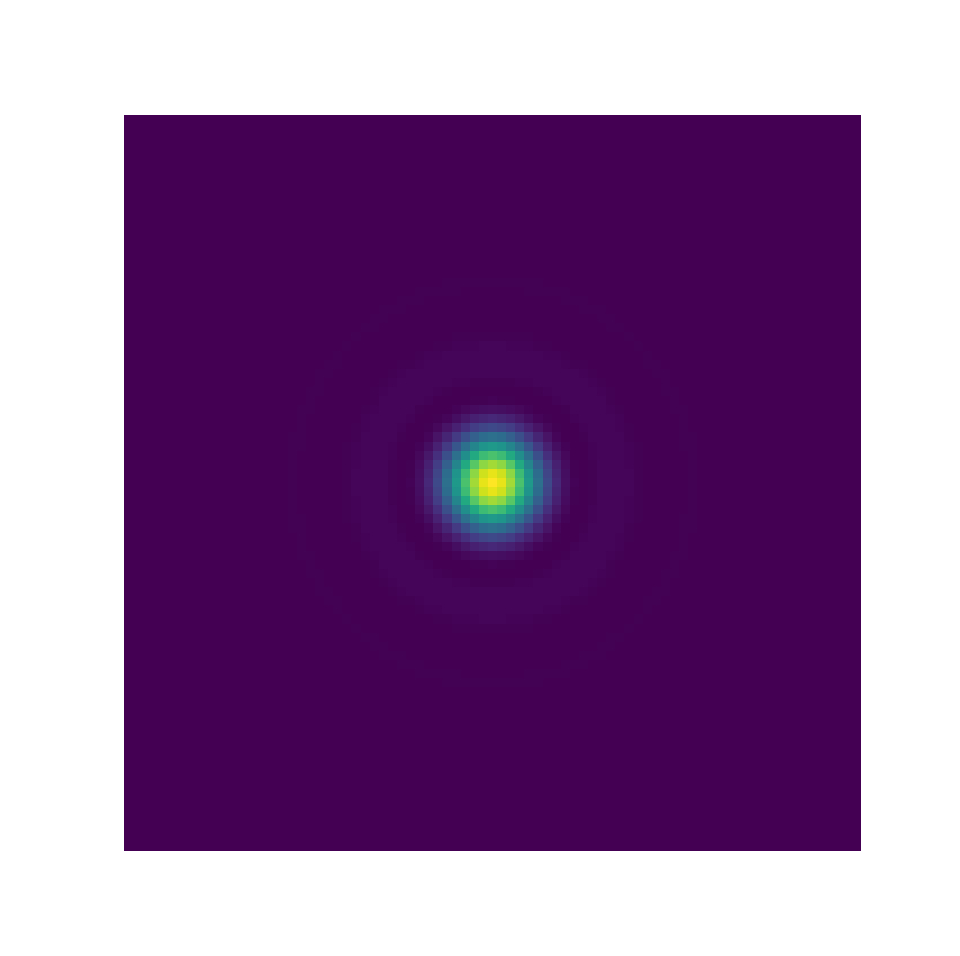

A digital image is the result of capturing a scene with an array of sensors, e.g. the camera [11], following [eq:colorsingalintegral_bis]. A monochromatic image \(I\), means we only have one color channel in our color space. This image can be seen as a mapping between a vector field, the 2D plane of the array of sensors, and a scalar field, the intensity of light captured by each sensor:

\[I: \mathbb{R}^2 \to \mathbb{R} \label{eq:image_mapping}\]

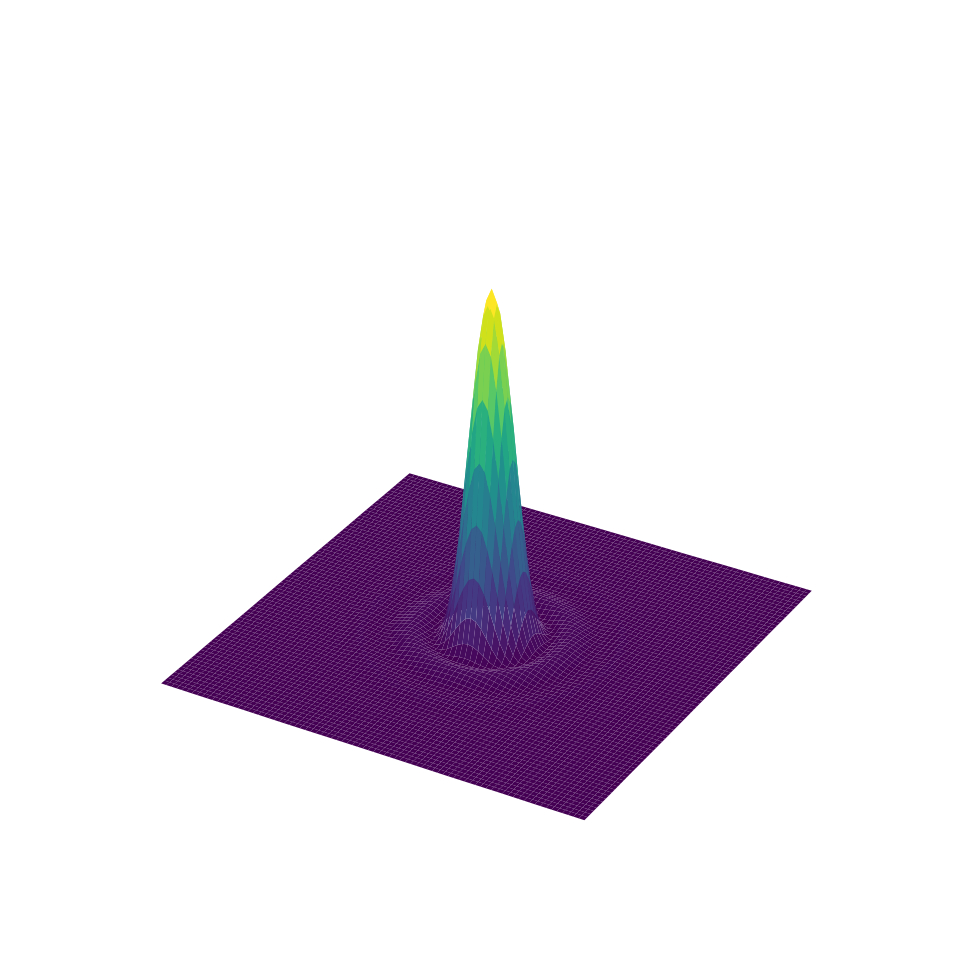

where \(\mathbb{R}^2\) is the capture plane of the camera sensors and \(\mathbb{R}\) is a generic grayscale color space. [fig:img_vs_profile] shows an example of this: an Airy disk [76] is represented first as an image, where the center of the disk is visualized as a spot; also, the Airy disk is shown to be a function of the space distribution.

Altogether, we can extend [eq:image_mapping] definition to images that are not grayscale. This means each image can be defined as a mapping from the 2D plane of the array of sensors to a color space, which is in turn also a vector space:

\[I: \mathbb{R}^2 \to \mathbb{R}^K\]

where \(\mathbb{R}^K\) is now a vector field also, thus the color space of the image can be RGB, CMYK, etc. Note digital cameras can capture more than the above-mentioned color bands, and there exists a huge field of multi-spectral cameras [77], which is not the focus of our research.

As we pointed out when defining color spaces, digital images are captured using discrete variable color spaces. But this process also affects the spatial domain of the image. The process of discretizing the plane \(\mathbb{R}^2\) is called sampling. And, the process of discretizing the illumination data in \(\mathbb{R}\) data is called quantization. Following this, [eq:image_mapping] can be rewritten as:

\[I: \mathbb{N}_{[0, n]} \times \mathbb{N}_{[0,m]} \to \mathbb{N}_{[0, 255]} \label{eq:image_mapping_discrete}\]

which represents an 8-bit grayscale2 image of size \((n, m)\). This definition of an image allows us to differentiate the domain transformations of the image (i.e. geometrical transformations to the perspective of the image); from the image transformations (i.e. color corrections to the color space to the image).

In [ch:4], when dealing with the extraction of QR Codes from challenging surfaces we used the definition in [eq:image_mapping] to refer to the capturing plane of the image and how it relates to the underneath surface where the QR Code is placed by projective laws.

In [ch:5] we used the definition of [eq:image_mapping_discrete] to detail our proposal for the encoding process of colored QR Codes. In this context, it is interesting reducing the notation of image definition taking into account that images can be regarded as matrices. So, [eq:image_mapping_discrete] can be rewritten in a compact form as:

\[I \in [0, 255]^{n \times m}\]

where \(I\) is now a matrix which exist in a matrix space \([0, 255]^{n \times m}\). This vector space contains both the definition of the spatial coordinates of the image and the color space.

As before, we can use this notation to represent different image examples:

\(I \in [0, 255]^{n \times m}\), is an 8-bit grayscale image with size \((n, m)\).

\(I \in [0, 255]^{n \times m \times 3}\), is an 8-bit RGB image with size \((n, m)\).

\(I \in [0, 1]^{n \times m}\), is a float normalized grayscale image with size \((n, m)\).

\(I \in \{0, 1\}^{n \times m}\), is a binary image with size \((n, m)\).

Finally, we can reintroduce the color space transformations presented before, from [eq:color_normalize] to [eq:color_colorize], for images as bitmap matrices:

Normalization: \[% \label{eq:normalizationdef} f_{normalize}: [0,255]^{n \times m \times 3} \to [0,1]^{n \times m \times 3}\]

Desaturation: \[% \label{eq:desaturationdef} f_{desaturate}: [0,1]^{n \times m \times 3} \to [0,1]^{n \times m}\]

Binarization: \[% \label{eq:binarizationdef} f_{binarize}: [0,1]^{n \times m} \rightarrow \{0,1\}^{n \times m}\]

Colorization: \[% \label{eq:binarizationdef} f_{colorize}: [0,1]^{n \times m} \rightarrow [0,1]^{n \times m \times 3}\]

In 1990, Guido van Rosum released the first version of Python, an open-source, interpreted, high-level, general-purpose, multi-paradigm (procedural, functional, imperative, object-oriented) programming language [78]. Since then, Python has released three major versions of the language: Python 1 (1990), Python 2 (2000) and Python 3 (2008) [79].

At the time we started to work in this thesis, Python was one of the most popular programming languages both in the academia and in the industry [80]. As Python is an interpreted language, the actual code of Python is executed by the Python Virtual Machine (PVM), this opens the door to create different PVM written with different compiled languages, the official Python distribution is based on a C++ PVM, that is why the mainstream Python distribution is called ’CPython’ [81].

CPython allows the user to create bindings to C/C++ libraries, this was specially useful for our research. OpenCV is a widely-known tool-kit for computer vision applications, which is written in C++, but presents bindings to other languages like Java, MATLAB or Python [82].

Altogether, we decided to use Python as our main programming language. Both achieving the rapid script capabilities that Python offers, alongside with standard libraries from Python and C++. The research started with Python 3.6 and ended with Python 3.8, due to the Python’s development cycle.

Let us detail the stack of standard libraries used in the development of this thesis:

Python environment: we started using Anaconda,

an open-source Python distribution that contained pre-compiled packages

ready to be used, such as OpenCV [83]. We adopted also pyenv, a

tool to install Python distributions and manage virtual environments

[84]. Later on, we

started to use docker technology, light virtual machines to

enclose the PVM and our programs [85].

Scientific and data: we adopted the well-known

numpy / scipy / matplotlib

stack:

numpy is a C++ implementation of array

representation (MATLAB-like) for Python [86],

scipy is a compendium of common mathematical

operations fully compatible with NumPy arrays. Often SciPy implements

bindings to consolidated calculus frameworks written in C++ and Fortran,

such as OpenBlas [87],

matplotlib is a 2D graphics environment we used to

represent our data [88].

NumPy, SciPy and Matplotlib are the entry point to a huge ecosystem of packages that use them as their core. When processing datasets, two main packages were used,

pandas is an abstraction layer to the previous

stack, where data is organized in spreadsheets (like Excel, Origin Lab,

etc.) [89],

xarray is another abstraction layer to the previous

stack, with labeled N-dimensional arrays, xarray can be regarded as the

N-dimensional generalization of pandas [90].

Images manipulation: there is a huge ecosystem regarding image manipulation in Python, previous to computer vision, we adopted some packages to read and manipulate images,

pillow is the popular fork from the unmaintained

Python Imaging Library, we used Pillow specially to manipulate the image

color spaces, e.g. profile an image from RGB to be printed in CMYK [91],

imageio was used as an abstraction layer from

Pillow, which uses Pillow and other I/O libraries (such as

rawpy) to read images and convert them directly to NumPy

matrices. We standardized our code to read images using this package

instead of using other solutions (SciPy, Matplotlib, Pillow, OpenCV,

...) [92],

imgaug was used to expand image datasets, by tuning

randomly parameters of the image (illumination, contrast, etc.). This

image augmentation technique is well-known to increase datasets

when training computer vision models [93].

Computer vision: we mainly adopted OpenCV as our

main framework to perform feature extraction algorithms, affine and

perspective corrections and other operations [59]. Despite this, other popular

frameworks were used for some applications, such as

scikit-learn [94], scikit-image

[95],

keras [96], etc.

QR Codes: regarding the encoding of QR Codes we

adopted mainly the package python-qrcode and used it as a

base to create our Color QR Codes [97]; regarding the decoding of the QR Codes,

there exists different frameworks. We worked with:

zbar is a light C++ barcode scanner, which decodes

QR Codes and other 1D and 2D barcodes [98]. Among the available Python bindings to

this library we chose the pyzbar library [99],

zxing is a Java bar code scanner, similar to ZBar

(formerly maintained by Google), and it is the core of most of Android

QR Code scanners [100].

As this library was not written in Python we did not use it on a daily

basis, but we kept it as secondary QR Code scanner.

In [ch:3] we have introduced the popular QR codes [20], which have become part of mainstream culture. With the original applications in mind (e.g. a box in a production line), the QR Codes were designed, first, to be placed on top of flat surfaces, second, laying coplanar to the reader device.

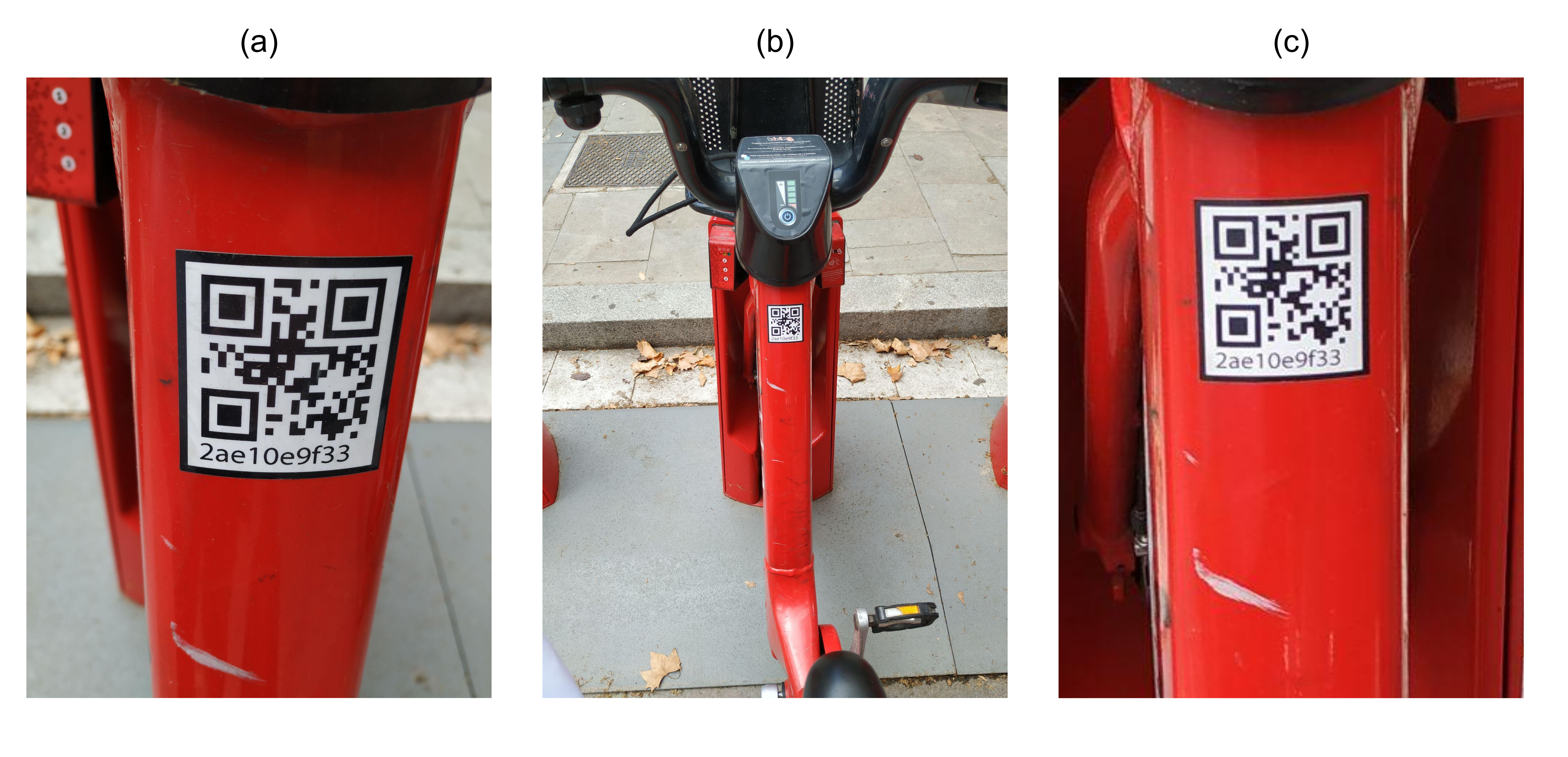

But today, users also apply QR Codes to non-planar surfaces like bottles [60], all sorts of food packaging [61] (like meat [101], fish [102] and vegetables [103]), vehicles, handrails, etc. (see [fig:qrcodebike].a). Also, QR Codes can incorporate biomedical [104], environmental [105] and gas [30] sensors. All these applications involve surfaces that pose challenges to their readout, especially when the QR Codes are big enough to show an evident curvature or deformation.

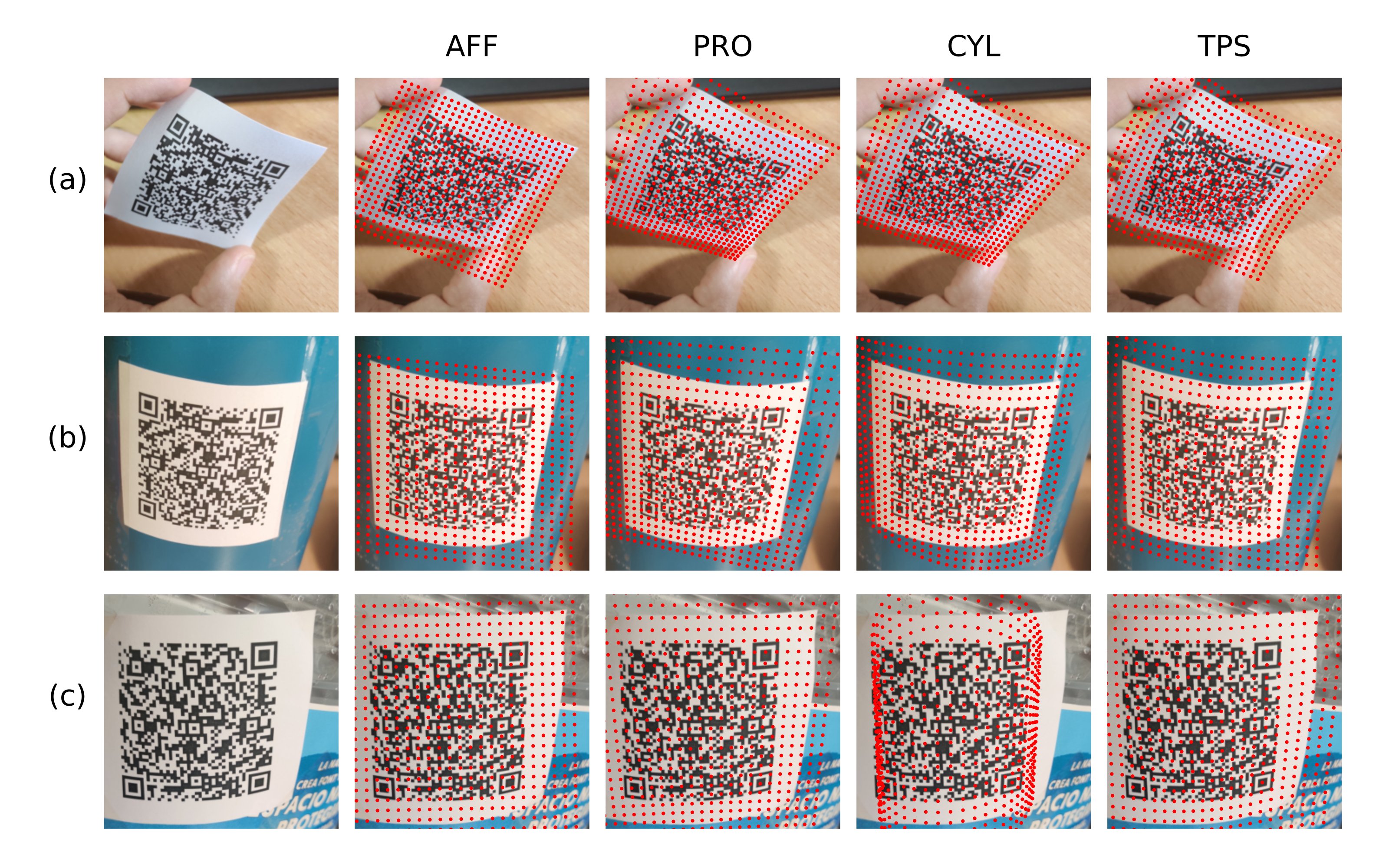

On top of that, in the most common uses, readout is carried out by casual users holding handheld devices (like smartphones) in manifold angles and perspectives. Surprisingly, these perspective effects are not tackled by the original QR Code standard specification, but are so common that are addressed in most of the state-of-the-art QR Code reader implementations [59], [98], [100]. Still, the issues caused by a non-flat topography remain mostly unsolved, and the usual recommendation is just acquiring the QR Code image from a farther distance, where curvature effects turn apparently smaller thanks to the laws of perspective (see [fig:qrcodebike].b and [fig:qrcodebike].c.). This however is a stopgap measure rather than a solution, that fails frequently when the surface deformation is too high or the QR Code is too big.

Other authors have already demonstrated that it is possible to use the QR Code itself to fit the surface underneath to a pre-established topography model. These proposals only work well with surfaces that resemble the shape model assumed (e.g. a cylinder, a sphere, etc.) and mitigate the problem just for a limited set of objects and surfaces, for which analytical topography models can be written.

Regarding perspective transformation models, Sun et al. proposed the idea of using these transformations as a way to enhance readability in handheld images from mobile phones [106]. This idea was explored also by Lin and Fuh, showing that their implementation performed better than ZXing [107], a commercial QR Code decoder formerly developed by Google [100]. Concerning cylindrical transformations, Li, X. et al. [108], Lay et al. [109], [110] and Li, K. [111] reported results on QR Codes placed on top of cylinders. More recently, Tanaka introduced the idea of correcting cylindrical deformation using an Image-to-Image Translation Network [112]. Finally, the problem of arbitrary surface deformations has just been explored very recently. Huo et al. suggested a solution based on Back-Propagation Neural Networks [113]. Kikuchi et al. presented a radically different approach from the standpoint of additive manufacturing by 3D printing the QR codes inside those arbitrary surfaces, and thus solving the inverse problem by rendering apparent planar QR Codes during capture [114].

Here, since a general solution for the decoding of QR Codes placed on top of arbitrary topographies is missing, we present our proposal on this matter based on the thin-plate spline 2D transformation [115]. Thin-plate splines (TPS) are a common solution to fit arbitrary data and have been used before in pattern recognition problems: Bazen et al. [116] and Ross et al. [117] used TPS to match fingerprints; Shi et al. used TPS together with Spatial Transformer Networks to improve handwritten character recognition by correcting arbitrary deformations [118], and Yang et al. reviewed the usage of different TPS derivations in the point set registration problem [119].

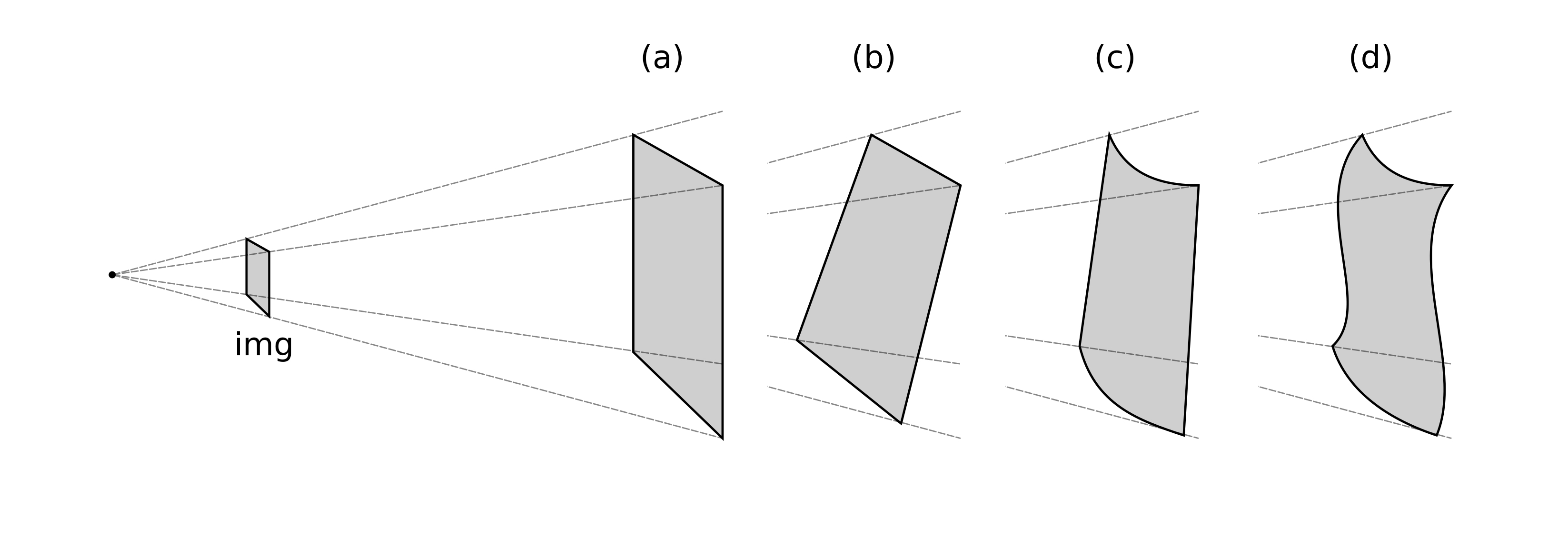

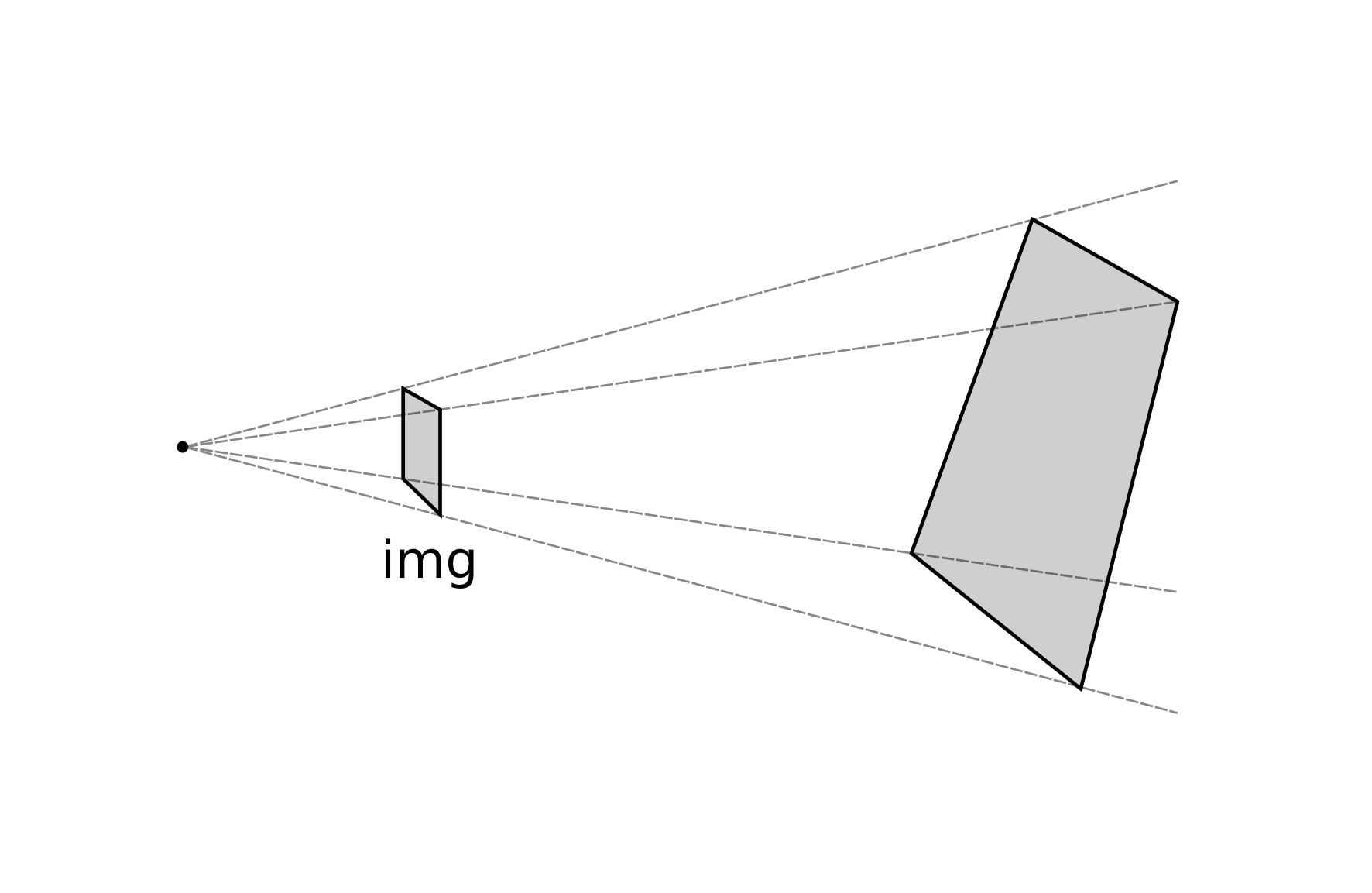

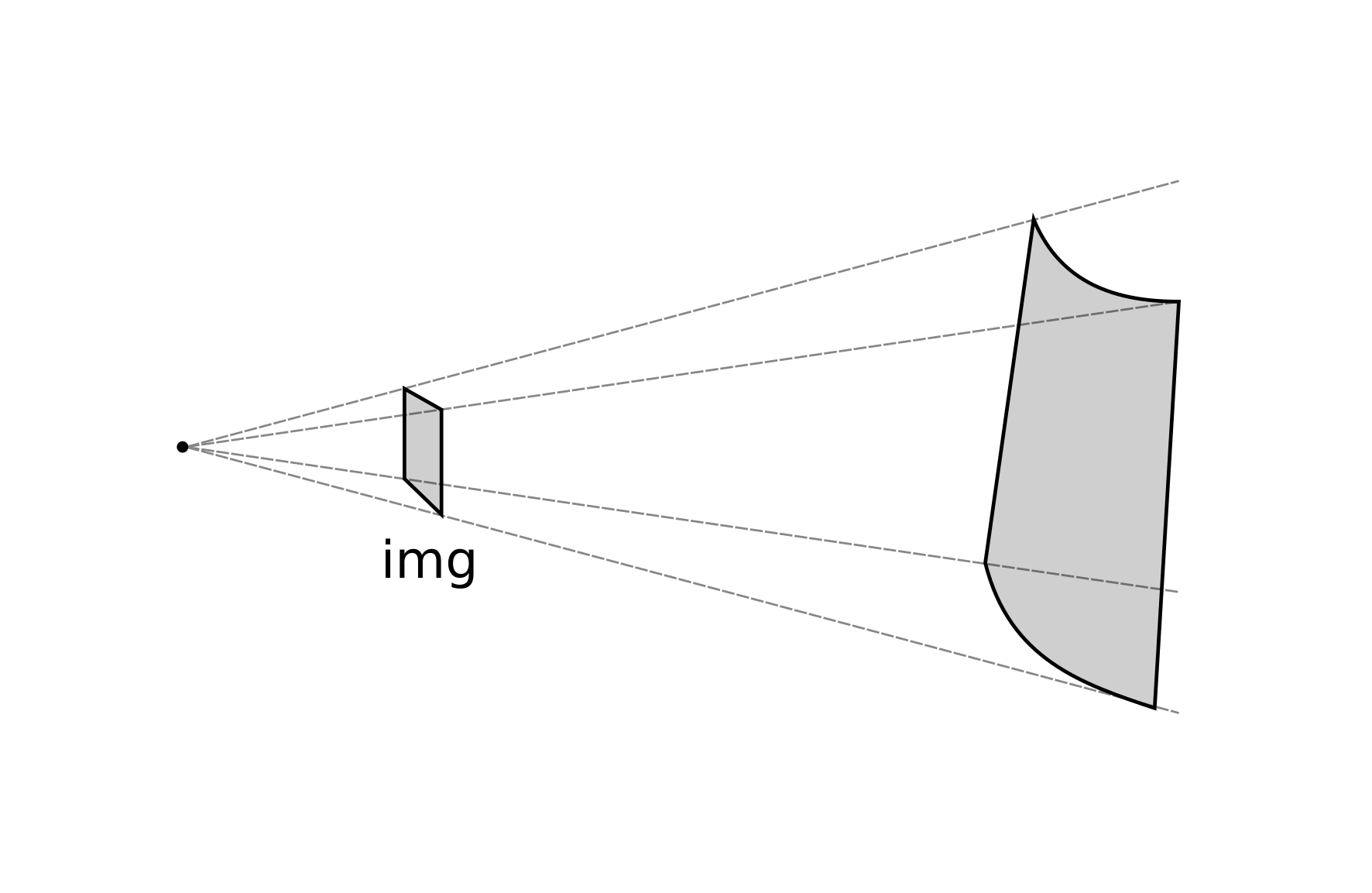

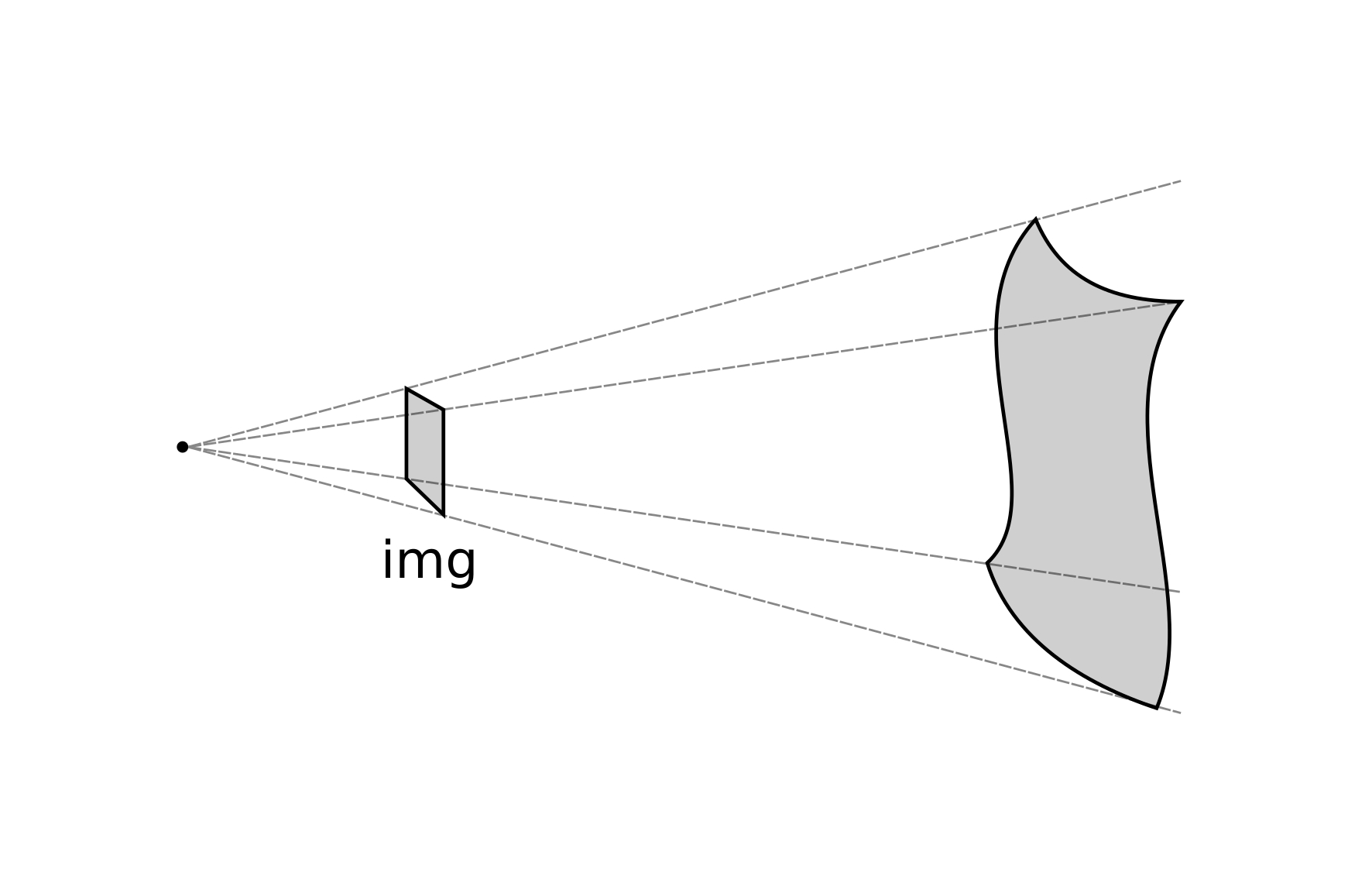

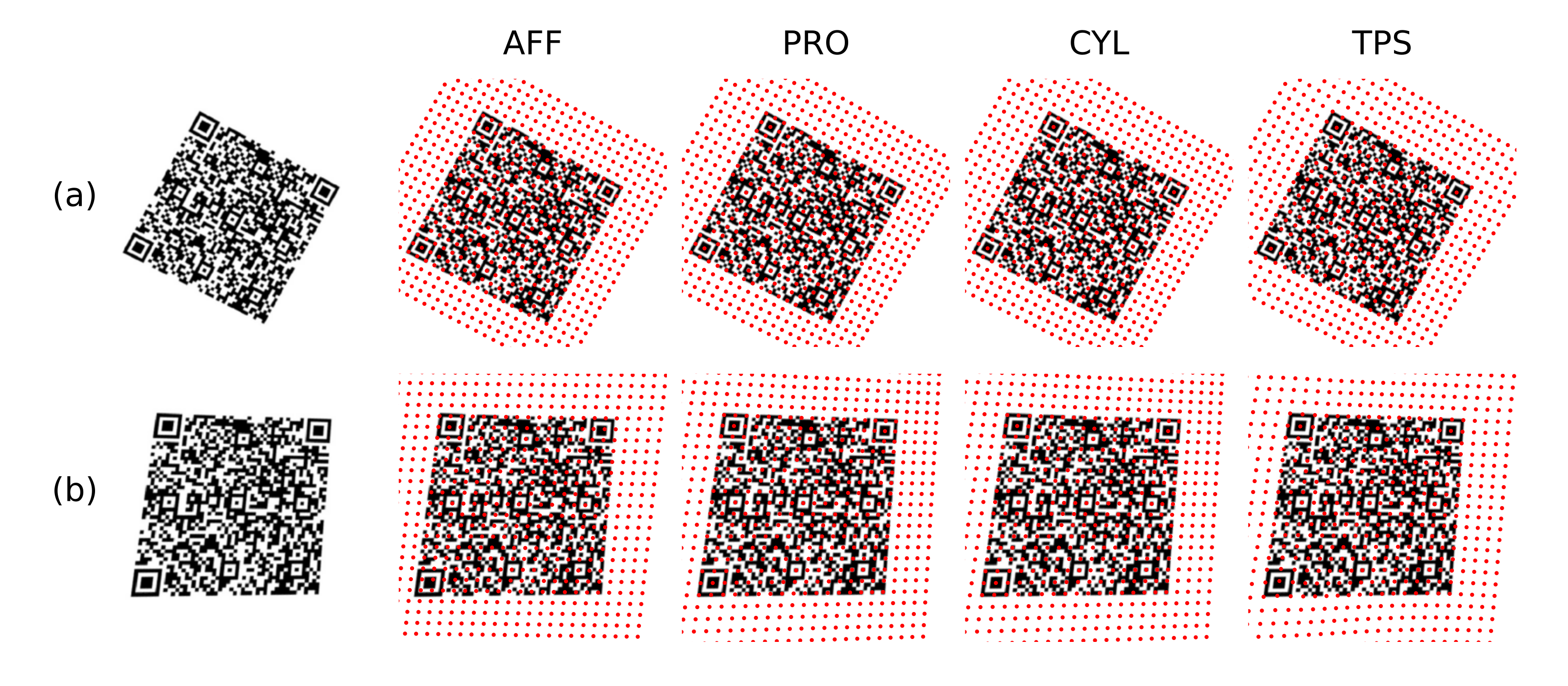

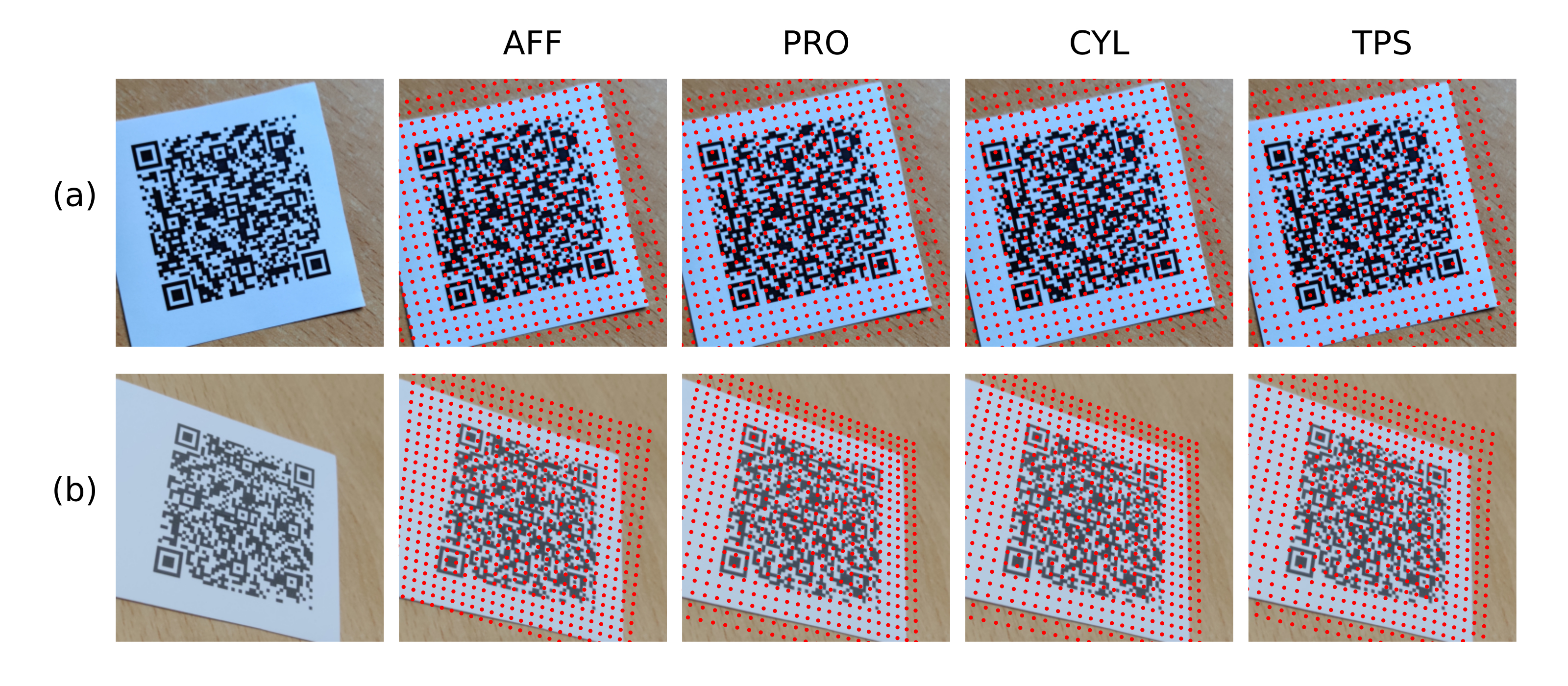

In order to investigate the advantages of the TPS with respect to former approaches, we take here the above-mentioned geometric surface fittings as reference cases, namely: (i) affine coplanar transformations (see [fig:projections].a), (ii) projective transformations (see [fig:projections].b), and (iii) cylindrical transformations (see [fig:projections].c).

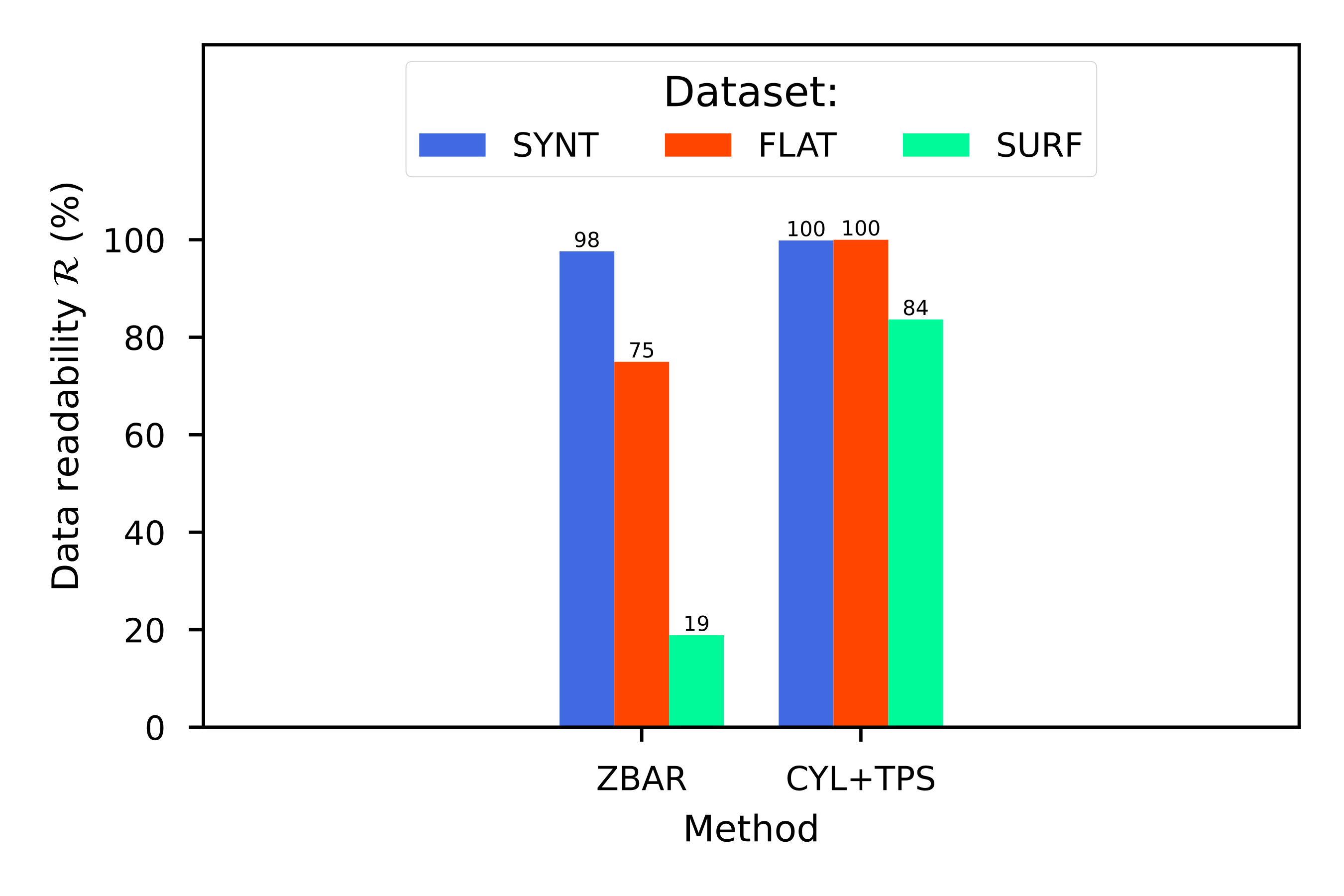

Then we introduce our proposal for arbitrary surfaces based on (iv) the thin-plate spline 2D transformation (see [fig:projections].d) and benchmark against each other. With all four methods we use a commercial barcode scanner, ZBar [98], to decode the corrected image and observe the impact of each methodology, not just on the geometrical correction but also on the actual data extraction.

In [ch:3] we have defined images as mappings from an \(\mathbb{R}^2\) plane to a scalar field \(\mathbb{R}\), assuming they are grayscale. [fig:projections] shows this \(\mathbb{R}^2\) plane and labels it as img. Let us define a projective transformation of this plane as an application between two planes:

\[f: \mathbb{R}^2 \to \mathbb{R}^2\].

Also, let the points \((x,y) \in \mathbb{R}^2\) and \((x', y') \in \mathbb{R}^2\), we can then define an analytical projective mapping between those two points as:

\[\label{eq:projectionsmap} \begin{split} x' = f_x (x, y) = a_{0,0} \cdot x + a_{0,1} \cdot y + a_{0,2} \\ y' = f_y (x, y) = a_{1,0} \cdot x + a_{1,1} \cdot y + a_{1,2} \end{split}\]

where \(a_{i,j} \in \mathbb{R}\) are the weights of the projective transform. For a more compact notation, \((x, y)\) and \((x', y')\) can be replaced by homogeneous coordinates [120] \((p_0, p_1, p_2) \in {\rm P}^2 \mathbb{R}\) and \((q_0, q_1, q_2) \in {\rm P}^2 \mathbb{R}\), respectively, that allow expressing the transformation in a full matrix notation3:

\[\label{eq:projectionmatrix} \begin{pmatrix} a_{0,0} & a_{0,1} & a_{0,2} \\ a_{1,0} & a_{1,1} & a_{1,2} \\ a_{2,0} & a_{2,1} & 1 \end{pmatrix} \cdot \begin{pmatrix} p_{0} \\ p_{1} \\ 1 \end{pmatrix} = \begin{pmatrix} q_{0} \\ q_{1} \\ 1 \end{pmatrix}\]

Finally, we can simplify this expression by naming our matrices as:

\[\label{eq:projectionproduct} \mathbf{A} \cdot \mathbf{P} = \mathbf{Q}\]

Here, we will work with four projective transformations: the affine transformation (AFF), the projective transformation (PRO), the cylindrical transformation (CYL) and the thin-plate spline transformation (TPS). We can define all of them as subsets or extensions of projective transformations, so we will have to specifically formulate \(\mathbf{A}\) for each one of them. To do so, we need to know the landmarks in the captured image (acting as vector \(\mathbf{Q}\)) and their “correct” location in a non-deformed corrected image (acting as vector \(\mathbf{P}\)).

Affine (AFF). This transformation uses the landmarks to fit a coplanar plane to the capturing device sensor (see [fig:projections_affine]). It can accommodate translation, rotation, zoom and shear deformations [120]. An affine transformation can be expressed in terms of [eq:projectionmatrix], only taking \(a_{2,0} = a_{2,1} = 0\):

\[\label{eq:projectionmatrix_affine} \begin{pmatrix} a_{0,0} & a_{0,1} & a_{0,2} \\ a_{1,0} & a_{1,1} & a_{1,2} \\ 0 & 0 & 1 \end{pmatrix} \cdot \begin{pmatrix} p_{0} \\ p_{1} \\ 1 \end{pmatrix} = \begin{pmatrix} q_{0} \\ q_{1} \\ 1 \end{pmatrix}\]

This yields to a system with only 6 unknown \(a_{i, j}\) weights. Thus, if we can map at least 3 points in the QR Code surface to a known location (e.g. finder pattern centers) we can solve the system for all \(a_{i, j}\) using the expression of [eq:projectionproduct] with:

\[\begin{split} \mathbf{A} &= \begin{pmatrix} a_{0,0} & a_{0,1} & a_{0,2} \\ a_{1,0} & a_{1,1} & a_{1,2} \\ 0 & 0 & 1 \end{pmatrix} , \\ \mathbf{P} &= \begin{pmatrix} p_{0,0} & p_{0,1} & p_{0,2} \\ p_{1,0} & p_{1,1} & p_{1,2} \\ 1 & 1 & 1 \end{pmatrix} \ and \\ \mathbf{Q} &= \begin{pmatrix} q_{0,0} & q_{0,1} & q_{0,2} \\ q_{1,0} & q_{1,1} & q_{1,2} \\ 1 & 1 & 1 \end{pmatrix}. \end{split}\]

Projective (PRO). This transformation uses landmarks to fit a noncoplanar plane to the capturing plane (see [fig:projections_proj]). Projective transformations use [eq:projectionmatrix] without any further simplification. Also, [eq:projectionproduct] is still valid, but now we have up to 8 unknown \(a_{i, j}\) weights to be determined. Therefore, we need at least 4 landmarks to solve the system for \(\mathbf{A}\), then:

\[\begin{split} \mathbf{A} &= \begin{pmatrix} a_{0,0} & a_{0,1} & a_{0,2} \\ a_{1,0} & a_{1,1} & a_{1,2} \\ a_{2,0} & a_{2,1} & 1 \end{pmatrix} , \\ \mathbf{P} &= \begin{pmatrix} p_{0,0} & p_{0,1} & p_{0,2} & p_{0,3} \\ p_{1,0} & p_{1,1} & p_{1,2} & p_{1,3} \\ 1 & 1 & 1 & 1 \end{pmatrix} \ and \\ \mathbf{Q} &= \begin{pmatrix} q_{0,0} & q_{0,1} & q_{0,2} & q_{0,3} \\ q_{1,0} & q_{1,1} & q_{1,2} & q_{1,3} \\ 1 & 1 & 1 & 1 \end{pmatrix}. \end{split}\]

Notice that the four points in must not be collinear three-by-three, if we want the mapping to be invertible [120].

Cylindrical (CYL). This transformation uses landmarks to fit a cylindrical surface, which can be decomposed into a projective transformation and a pure cylindrical deformation (see [fig:projections_cyl]). Thus, the cylindrical transformation extends the projective general transformation ([eq:projectionsmap]) and adds a non-linear term to the projection:

\[\label{eq:projectionmap_cyl} \begin{split} x' = f_x (x, y) = a_{0,0} \cdot x + a_{0,1} \cdot y + a_{0,2} + w_0 \cdot g(x,y) \\ y' = f_y (x, y) = a_{1,0} \cdot x + a_{1,1} \cdot y + a_{1,2} + w_1 \cdot g(x,y) \end{split}\]

where \(g(x, y)\) is the cylindrical term, which takes the form of [108], [111]:

\[g(x, y) = \begin{cases} \sqrt{r^2 - (c_0 - x)^2} & if \ \ r^2 - (c_0 - x)^2 \geq 0 \\ 0 & if \ \ r^2 - (c_0 - x)^2 < 0 \end{cases}\]

where \(r \in \mathbb{R}\) is the radius of the cylinder, and \(c_0 \in \mathbb{R}\) is the first coordinate of any point in the centerline of the cylinder. Now, [eq:projectionmatrix] becomes extended with another dimension for cylindrical transformations:

\[\begin{pmatrix} w_0 & a_{0,0} & a_{0,1} & a_{0,2} \\ w_1 & a_{1,0} & a_{1,1} & a_{1,2}\\ w_2 & a_{2,0} & a_{2,1} & 1 \end{pmatrix} \cdot \begin{pmatrix} g(p_0, p_1) \\ p_{0} \\ p_{1} \\ 1 \end{pmatrix} = \begin{pmatrix} q_{0} \\ q_{1} \\ 1 \end{pmatrix}\]

Applying the same reasoning as before, we have now 8 unknown \(a_{i,j}\) plus 3 unknown \(w_{j}\) weights to fit. Equivalent matrices ([eq:projectionproduct]) for cylindrical transformations need now at least 6 landmarks and look like:

\[\begin{split} \mathbf{A} &= \begin{pmatrix} w_0 & a_{0,0} & a_{0,1} & a_{0,2} \\ w_1 & a_{1,0} & a_{1,1} & a_{1,2} \\ w_2 & a_{2,0} & a_{2,1} & 1 \end{pmatrix} , \\ \mathbf{P} &= \begin{pmatrix} g(p_{0,0}, p_{1,0}) & ... & g(p_{0,5}, p_{1,5}) \\ p_{0,0} & ... & p_{0,5} \\ p_{1,0} & ... & p_{1,5} \\ 1 & ... & 1 \end{pmatrix} \ and \\ \mathbf{Q} &= \begin{pmatrix} q_{0,0} & ... & q_{0,5} \\ q_{1,0} & ... & q_{1,5} \\ 1 & ... & 1 \end{pmatrix}. \end{split}\]

Thin-plate splines (TPS). This transform uses the landmarks as centers of radial basis splines to fit the surface in a non-linear way that resembles the elastic deformation of a metal thin-plate bent around fixed points set at these landmarks [115] (see [fig:projections_tps]). The radial basis functions are real-valued functions:

\[h: [0, \inf) \to \mathbb{R}\]

that take into account a metric on a vector space. Their value only depends on the distance to a reference fixed point:

\[h_c(v) = h(|| v - c ||) \label{eq:tpskernel}\]

where \(v \in \mathbb{R}^n\) is the point in which the function is evaluated, \(c \in \mathbb{R}^n\) is the fixed point, \(h\) is a radial basis function. [eq:tpskernel] reads as "\(h_c(v)\) is a kernel of \(h\) in \(c\) with the metric \(||\cdot||\)". Similarly to cylindrical transformations ([eq:projectionmap_cyl]), we extended the affine transformation ([eq:projectionsmap]) with \(N\) nonlinear spline terms:

\[\begin{split} x' &= f_x (x, y) = a_{0,0} \cdot x + a_{0,1} \cdot y + a_{0,2} + \sum_{k=0}^{N-1} w_{0,k} \cdot h_k ((x, y)) \\ y' &= f_y (x, y) = a_{1,0} \cdot x + a_{1,1} \cdot y + a_{1,2} + \sum_{k=0}^{N-1} w_{1,k} \cdot h_k ((x, y)) \end{split}\]

where \(w_{j,k}\) are the weights of the spline contributions, and \(h_k (x, y)\) are kernels of \(h\) in \(N\) landmark points.

These radial basis function remains open to multiple definitions. Bookstein [115] found that the second order polynomial radial basis function is the proper function to compute splines in \(\mathbb{R}^2\) mappings in order to minimize the bending energy, and mimic the elastic behavior of a metal thin-plate. Thus, let \(h\) be:

\[h(r) = r^2 \ln(r)\]

with the corresponding kernels computed using the euclidean metric:

\[|| (x, y) - (c_x, c_y) || = \sqrt{(x - c_x)^2 + (y - c_y)^2}\]

Finally, in matrix representation, terms from [eq:projectionproduct] are expanded as follows:

\[\begin{split} \mathbf{A} &= \begin{pmatrix} w_{0,0} & ... & w_{0, N-1} & a_{0,0} & a_{0,1} & a_{0,2} \\ w_{1,0} & ... & w_{1,N-1} & a_{1,0} & a_{1,1} & a_{1,2} \end{pmatrix} , \\ \mathbf{P} &= \begin{pmatrix} h_0(p_{0,0}, p_{1,0}) & ... & h_0(p_{0,N-1}, p_{1,N-1}) \\ \vdots & ... & \vdots \\ h_{N-1}(p_{0,0}, p_{1,0}) & ... & h_{N-1}(p_{0,N-1}, p_{1,N-1}) \\ p_{0,0} & ... & p_{0,N-1} \\ p_{1,0} & ... & p_{1,N-1} \\ 1 & ... & 1 \end{pmatrix} \ and \\ \mathbf{Q} &= \begin{pmatrix} q_{0,0} & ... & q_{0,N-1} \\ q_{1,0} & ... & q_{1,N} \end{pmatrix}. \end{split} \label{eq:qr_tps_APQ}\]

First, notice that only \(a_{i,j}\) affine weights are present, since this definition does not include a perspective transformation. Second, in contrast with previous transformations, this system is unbalanced: we have a total of \(2N + 6\) weights to compute (\(2N\) \(w_{j,k}\) spline weights plus 6 \(a_{i,j}\) affine weights), however, we only have defined \(N\) landmarks.

In the previous transformations, we used additional landmarks to solve the system, but Bookstein imposed an additional condition of the spline contributions: the sum of \(w_{j,k}\) coefficients to be \(\mathrm{0}\), and also their cross-product with the \(p_{i,k}\) landmark coordinates [115]. With such a condition, spline contributions tend to \(\mathrm{0}\) at infinity, while affine contributions prevail. This makes our system of equations solvable and can be expressed as:

\[\begin{pmatrix} w_{0,0} & ... & w_{0, N-1} \\ w_{1,0} & ... & w_{1,N-1} \end{pmatrix} \cdot \begin{pmatrix} p_{0,0} & ... & p_{0,N-1} \\ p_{1,0} & ... & p_{1,N-1} \\ 1 & ... & 1 \end{pmatrix}^T = 0\]

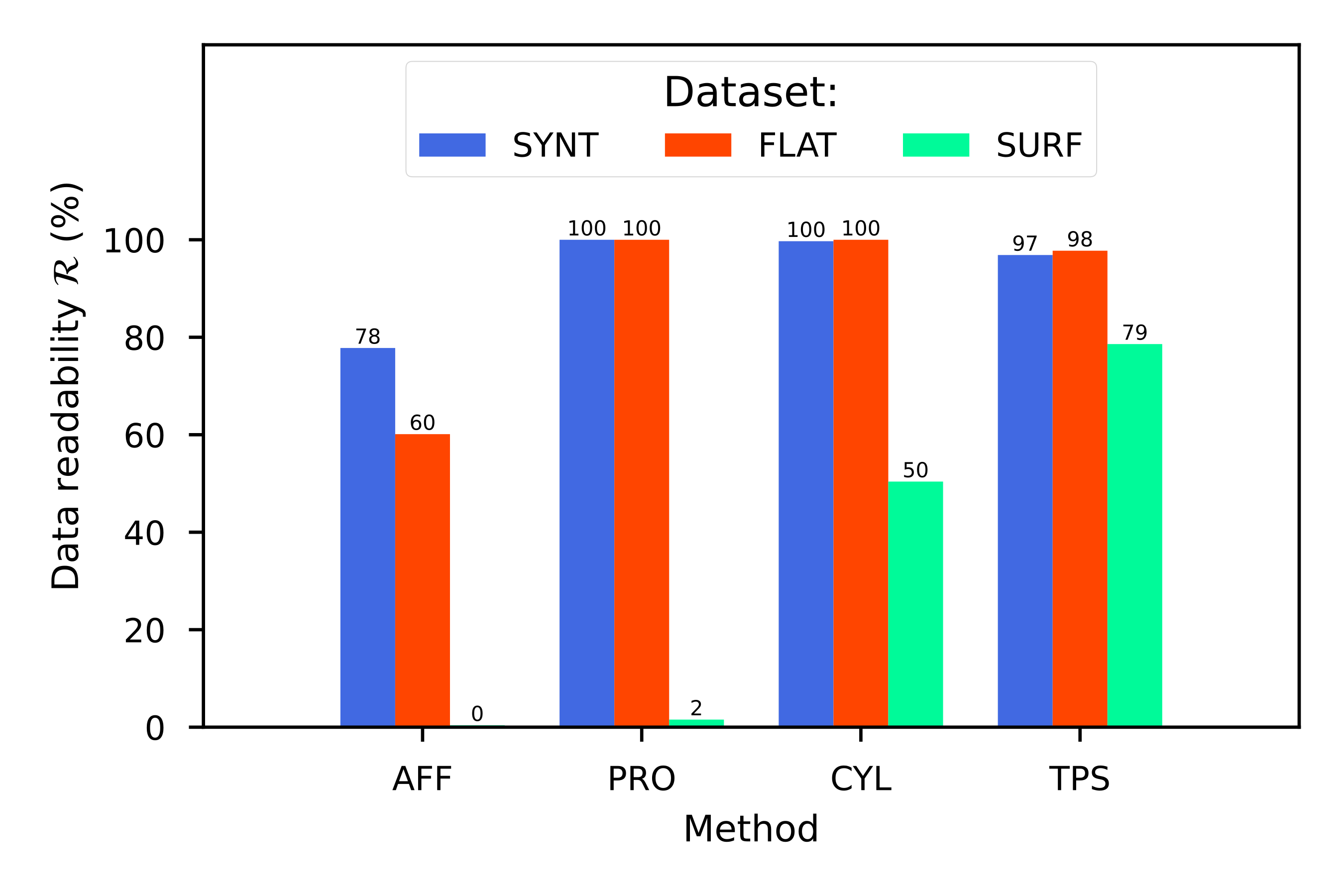

Experiments were designed to reproduce the QR Code life-cycle in different scenarios, which can be regarded as a digital communication channel: a message made of several bytes with their corresponding error correction blocks is encoded in the black and white pixels of the QR Code, that is transmitted through a visual channel (i.e. first displayed or printed and then captured by a camera), and finally decoded, and the original message retrieved (see [fig:pipeline].a).

In this context, the effects of the challenging surface topographies can be seen as an additional step in the channel, where the image is deformed in different ways prior to the capture. To investigate these effects we attached our QR codes to real complex objects to collect pictures with relevant deformations (see details below). Then, in order to expand our dataset, we incorporated an image augmentation step that programmatically added additional random projective deformations to the captured images [121]. Finally, we considered the surface fitting and correction as an additional step in the QR Code processing workflow, prior to attempting decoding. This proved more effective than directly attempting the QR Code decoding based on the distorted image with deformed position and feature patterns due to the surface topography (see [fig:pipeline].b).

We created 3 datasets to evaluate the performance of different transformations in different scenarios with arbitrary surface shapes.

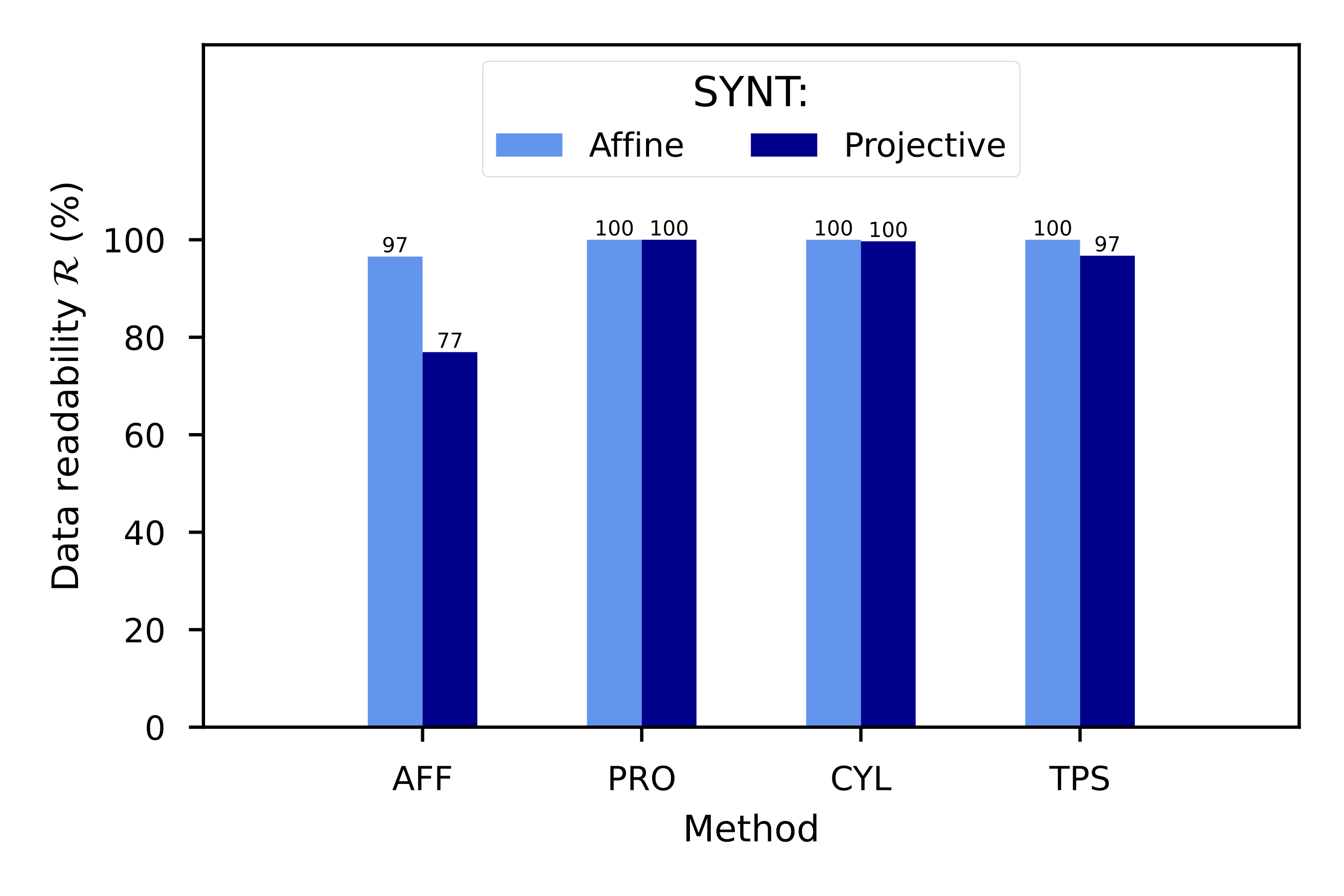

Synthetic QR Codes (SYNT). This dataset was intended to evaluate the impact of data and geometry of the QR Code on the proposed deformation correction methods. To that end, we generated the QR Codes as digital images, without printing them, and applied to them affine and projective transformations directly with image augmentation techniques (see [fig:datasetexamples].a). This dataset contained 12 QR Code versions (from version 1 to 12), each of them repeated 3 times with different random data (IDs), and 19 augmented images plus the original one. The mutual combination of all these variations yielded a total of 720 images to be processed by the proposed transformations (see [tab:datasets]).

QR Codes on flat surfaces (FLAT). In this dataset, we only encoded 1 QR Code version 7 and printed it. We placed this QR Code on different flat surfaces and captured images (see [fig:datasetexamples].b). Thus, we only expected projective deformations in this dataset, to be used as a reference. We also augmented the captured images to match the same quantity of images from the previous dataset (see [tab:datasets]).

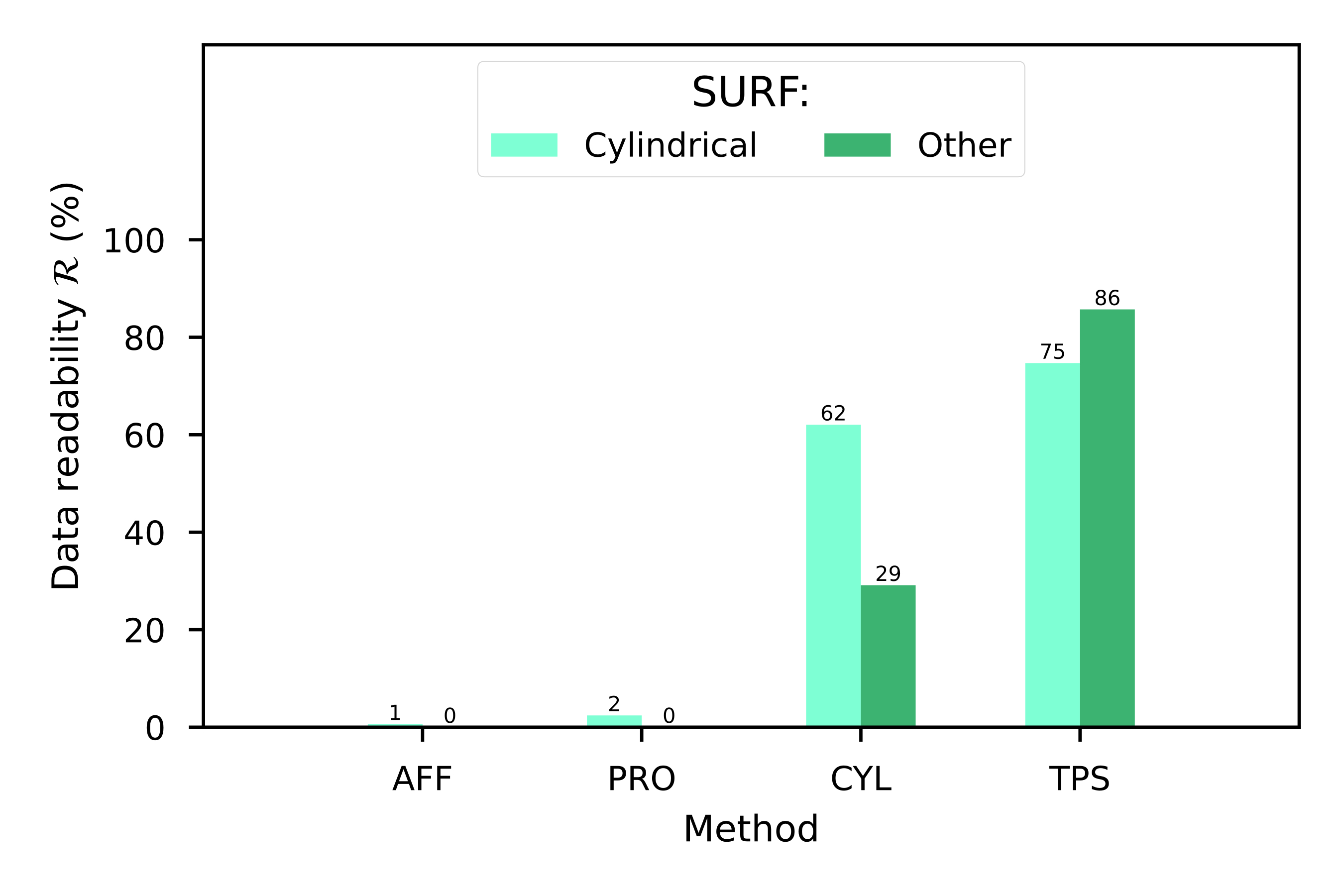

QR Codes on challenging surfaces (SURF). In this dataset, we used the same QR Code we used in the FLAT dataset, but placed on top of challenging surfaces, such as bottles, or manually deforming the QR Codes (see [fig:datasetexamples].c). We expected here to have cylindrical and arbitrary deformations in the dataset. Also, we augmented the captured images to match the size of the other datasets (see [tab:datasets]).

For all the datasets, the same features were extracted: finder patterns, alignment patterns and the fourth corner, see for more details about the extraction of those features in [ch:3].

| SYNT | Values | Dataset size |

|---|---|---|